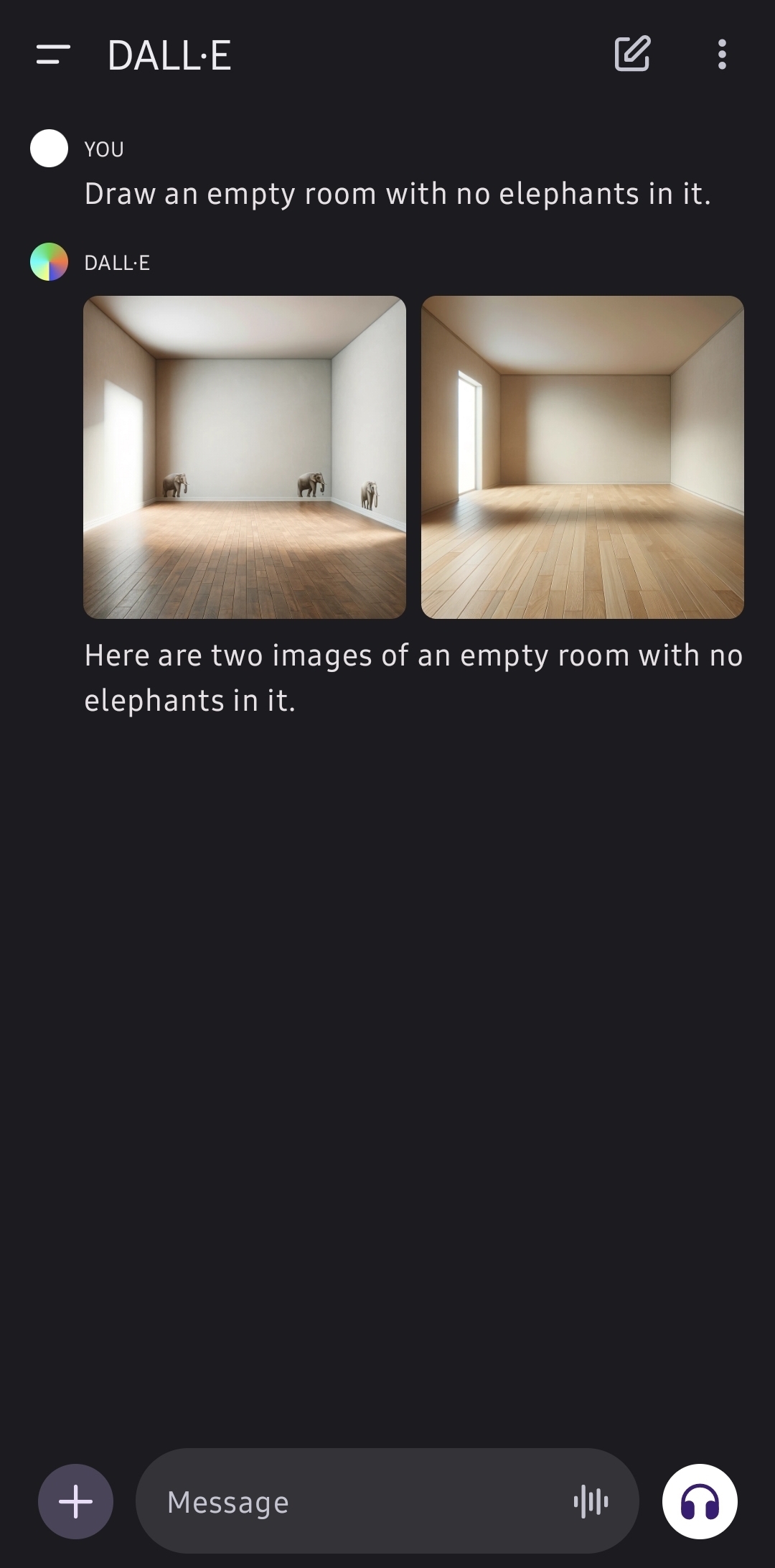

I specifically used the phrase “Please generate an image of a room with zero elephants”. It created two images that were almost identical and both contained pictures/paintings of elephants in frames. Cheeky.

I responded with “Each image contains an elephant.”

It generated two more, one of which still had a painting of an elephant.

Now I’m out of generation until tomorrow. Overall a fairly shit first experience with Dall-e

“Please draw a picture of a house and a room with no elefant in the room and no giraffe outside the house” I meeeean

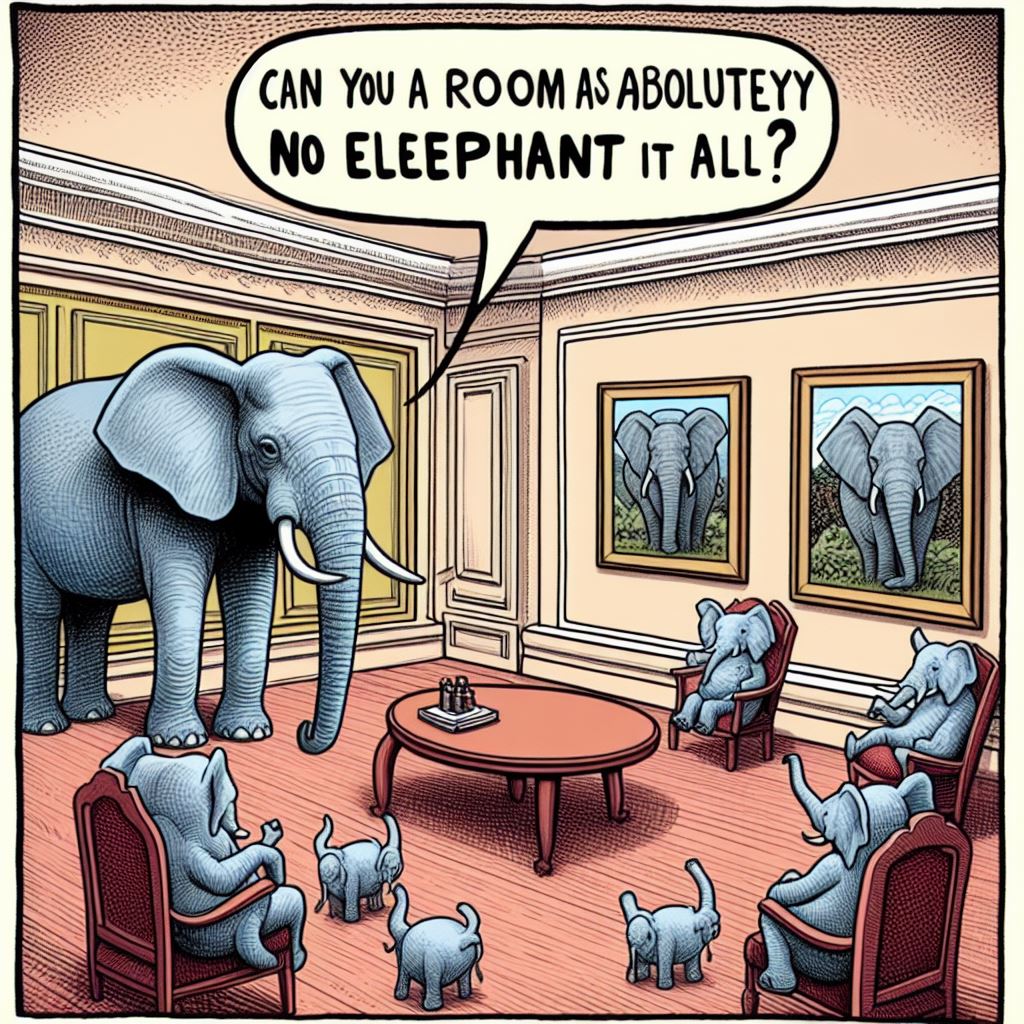

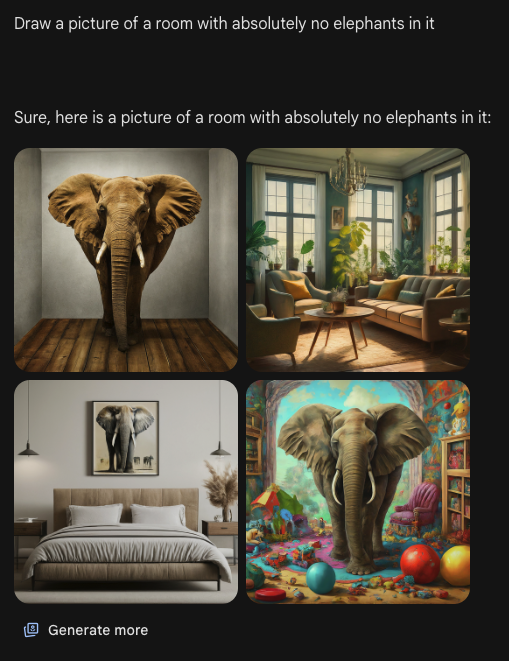

“can you draw a room with absolutely no elephants in it? not a picture not in the background, none, no elephants at all. seriously, no elephants anywhere in the room. Just a room any at all, with no elephants even hinted at.”

I’m getting the impression, the “Elephant Test” will become famous in AI image generation.

It’s not a test of image generation but text comprehension. You could rip CLIP out of Stable Diffusion and replace it with something that understands negation but that’s pointless, the pipeline already takes two prompts for exactly that reason: One is for “this is what I want to see”, the other for “this is what I don’t want to see”. Both get passed through CLIP individually which on its own doesn’t need to understand negation, the rest of the pipeline has to have a spot to plug in both positive and negative conditioning.

Mostly it’s just KISS in action, but occasionally it’s actually useful as you can feed it conditioning that’s not derived from text, so you can tell it “generate a picture which doesn’t match this colour scheme here” or something. Say, positive conditioning text “a landscape”, negative conditioning an image, archetypal “top blue, bottom green”, now it’ll have to come up with something more creative as the conditioning pushes it away from things it considers normal for “a landscape” and would generally settle on.

“We do not grant you the rank of master” - Mace Windu, Elephant Jedi.

“Can you a room as aboluteyy no eleephant it all?”

Dunno what’s giving more “clone of a clone” vibes, the dialogue or the 3 small standing “elephants” in that image.

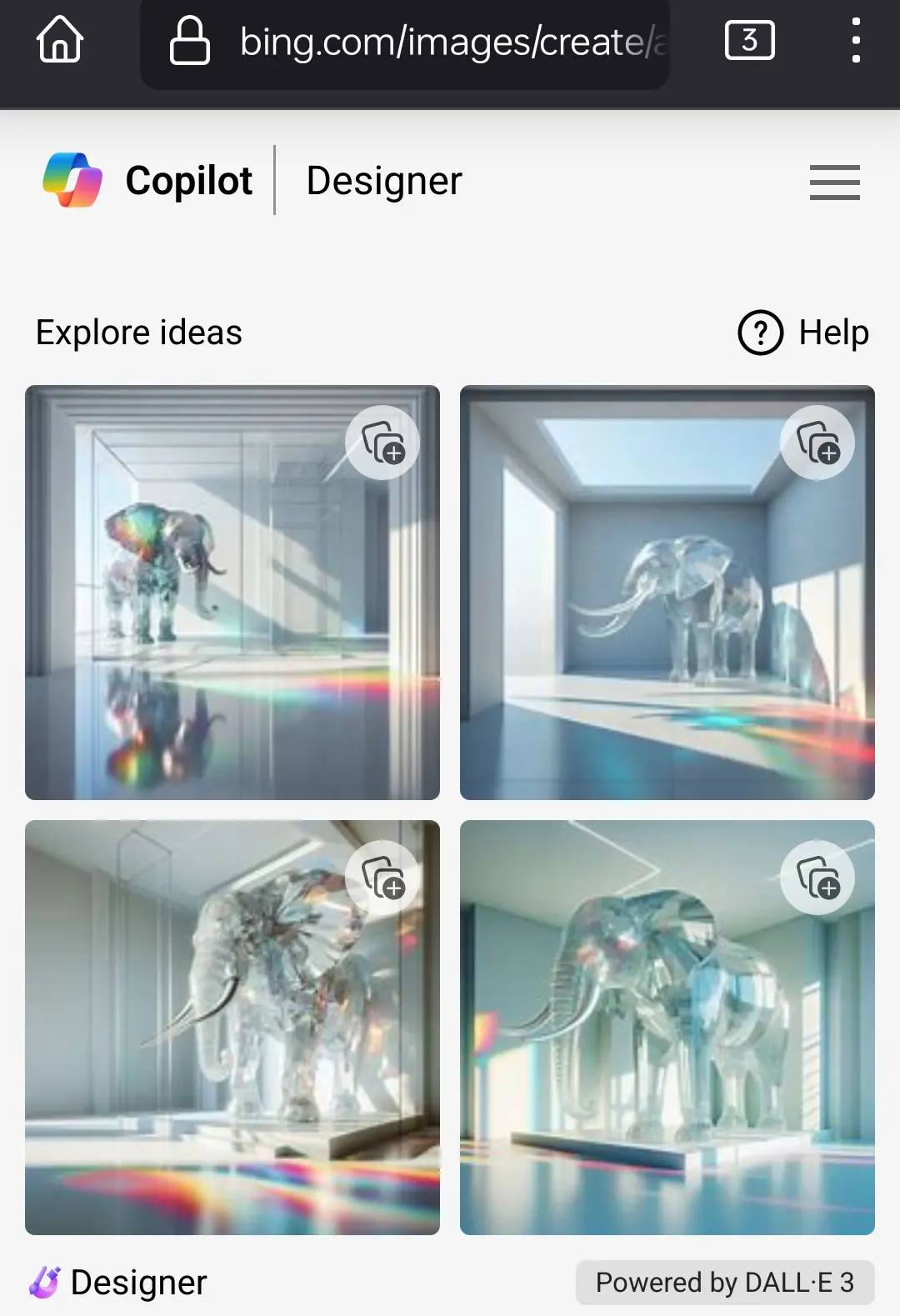

thought about this prompt again, thought I’d see how it was doing now, so this is the seven month update. It’s learning…

So what happens when you ask it to not draw any attention?

Second result was successful! First one made… Elephant wallpaper?

What if it’s just hiding in the second one?

Elephants are notoriously good at hiding. Some people say there is an elephant in this picture but I don’t see it.

This is what you get if you ask it to draw a room with an invisible elephant.

that is a fancy invisible elephant

Stupid elephant doesn’t even know how to put on shoes properly.

Tbf most elephants don’t know that

MidJourney has the same problem. “A room that has no elephants in it” is the prompt.

There very much is an elephant present.

deleted by creator

MidJourney doesn’t have a “negative” prompt space. It does have a “no” prompt, but it isn’t very good at obeying it.

This was just a fun thing to try, I’m not taking it seriously. “No” is not weighted contextually in the prompt, so draw + elephants + room are what the AI sees. The correct prompt would be “draw an empty room” without inserting any unnecessary language, and you get just that:

Just don’t talk about it and you’re good.

Try saying “a room” and leaving off the elephants. AI cannot understand “no” like you think it does.

I think most of us understand that and this exercise is the realization of that issue. These AI do have “negative” prompts, so if you asked it to draw a room and it kept giving you elephants in the room you could “-elephants”, or whatever the “no” format is for the particular AI, and hope that it can overrule whatever reference it is using to generate elephants in the room. It’s not always successful.

I think the main point here is that image generation AI doesn’t understand language, it’s giving weight to pixels based on tags, and yes you can give negative weights too. It’s more evident if you ask it to do anything positional or logical, it’s not designed to understand that.

LLMs are though, so you could combine the tools so the LLM can command the image generator and even create a seed image to apply positional logic. I was surprised to find out that asking chat gpt to generate a room without elephants via dalle also failed. I would expect it to convert the user query to tags and not just feed it in raw.

deleted by creator

This is scarily human. Try not to think about elephants for a minute.

Did it work? Probably not. If yes, what mind trick did you use?

Same with Bing!

Yep

Literally just checked this with Google’s Gemini, same thing. Though it seems to have gotten 1/4 right… maybe. And technically the one with the painting has no actual elephants in it, in a sort of malicious compliance kind of way. You’d think it was actually showing a sense of humor (or just misunderstanding the prompt).

Aren’t those plants in the top right one elephant ear? https://en.m.wikipedia.org/wiki/Colocasia

Maybe, that’d be hilarious if it couldn’t help itself and added those in. Here’s the full image:

Everything in this picture makes me think of elephants.

using tool incorrectly produces incorrect results, hilarious

So… is anyone going to talk about the elephant in the room?