As the AI market continues to balloon, experts are warning that its VC-driven rise is eerily similar to that of the dot com bubble.

In a few months there will be a new buzzword everyone will be jizzing themselves over.

No! Really, what a shock!!

deleted by creator

interestingly it’s more like investing in Apple stocks in the 90’s.

How much VC is really being invested at the moment? I know a variety of people at start ups and the money is very tight at the moment given the current interest rate environment.

Did you mean the crypto/NFT bubble?

Yeah I was going to say VC throwing money at the newest fad isn’t anything new, in fact startups strive exploit the fuck out of it. No need to actually implement the fad tech, you just need to technobabble the magic words and a VC is like “here have 2 million dollars”.

In our own company we half joked about calling a relatively simple decision flow in our back end an “AI system”.

crypto and nft have nothing to do with AI, though. Investing in AI properly is like investing in Apple in the early 90’s.

ultimately it won’t matter because if we get AGI, which is the whole point of investing in AI, stocks will become worthless.

NFTs yes but crypto is absolutely not a bubble. People are saying that for decades now and it hasn’t been truth. Yes there are shitcoins, just shitstocks. But in general, it’s definitely not a bubble but an alternative investing method beside stocks, gold etc.

Crypto is 100% a bubble. It’s not an investment so much as a ponzi, sure you can dump money into it and maybe even make money, doesn’t mean it doesn’t collapse on a whim when someone else decides to dip out or the government shuts it down. Its value is exactly that of NFT’s because it’s basically identical, just a string of characters showing “ownership” of something intangible

Whatever man, used electricity will become valuable someday, I just know it.

Being able to pay for things anonymously is very valuable to me and to many other people. That’s just one example of what this technology can be used for.

That’s called ‘cash.’

Bitcoin isn’t anonymous yet it’s the most valuable crypto. Monero is barely used. Most crypto people just want to get rich, they don’t actually care about using it.

100% a bubble

NFTs yes but crypto is absolutely not a bubble. People are saying that for decades now and it hasn’t been truth. Yes there are shitcoins, just like shitstocks. But in general, it’s definitely not a bubble but an alternative investing method beside stocks, gold etc.

What is the underlying mechanism that increases its value, like company earnings are to stocks? Otherwise, it’s just a reverse funnel scheme.

What’s the underlying mechanism that gives money a value? We the humans give money and gold a value because we believe they’re valuable. Same with crypto. Bitcoin is literally like gold but digital. Stop saying what everyone without knowledge says and inform yourself. Not only about crypto, also about money etc.

If you don’t understand the fundamentals of money, how can you judge something of being scam. There are lot of people here who didn’t even understand how money and gold works.

What’s the underlying mechanism that gives money a value?

A) the government backing it up along with its advanced military

B) the fact that you have to pay taxes in it

Yep, which is why Bitcoin can’t last forever without turning into some sort of GovCoin for it to truly replace money

Money has value insofar as governments use it to collect tax - so long as there’s a tax obligation, there’s a mandated demand for that currency and it has some value. Between different currencies, the value is determined based upon the demand for that currency, which is essentially tied to how much business is done in that currency (eg if a country sells goods in its own currency, demand for that currency goes up and so does it’s value).

This is not the same for crypto, there are no governments collecting tax with it so it does not have induced demand. The value of crypto is 100% speculative, which is fine for something that is used as currency, but imo a terrible vehicle for investment.

You are right in that it increases people’s belief in money because it is the primary source of revenue for states. But if the majority of people did not believe in the piece of paper, it would be worth nothing. That is the fundamental value of money as we know it.

There have been states where stones were the currency simply because the inhabitants believed in them.

Money is a physical representation of the concept of value. Saying “what gives money value” is like asking “why does rain make clouds.”

This is why printing money decreases the value of the currency - the value it represents has not changed so the value is diluted across the currency as the amount of currency expands.

Gold Standard

Linking money to a material with intrinsic value for it’s value

Gold has intrinsic value

US Dollar moved to be a Fiat Currency

US Dollar is backed by a Government

Crypto has zero intrinsic value, not linked to anything with intrinsic value, and not backed by a Government

Crypto is an imaginary “item” some people want to have valve. Value is created because of this want.

US Dollar is legal tinder for all US debts, Crypto is not

Crypto is not a currency but a digital commodity

legal tinder

I recall hearing it was illegal to burn money 😉

Money and gold have value because they can be exchanged easily for goods and services that are really wanted. As long as crypto currencies make it hard to trade for goods ands services, they will be, at best, a fad, at worst, a scam. There needs to be market for crypto to work. All I see is promises, no real commitments to it.

I don’t know if it’s good investment or not, but cryptocurrency has uses that are valuable to a lot of people. You can send money to other people without using a bank or PayPal and you can pay for things online anonymously. Some cryptocurrencies might have additional properties like Monero, which also gives you privacy. NFT might also have practical uses some day - for example it could be used for concert tickets.

Did ChatGPT write your comment?

Do you not have anything constructive to say?

The only way for someone to make money in crypto is for someone else to lose it.

Crypto is a scam.

Sooooo, the exact same premise with ehmmmm… Stocks.

You don’t understand how stocks work.

Reminds me of money.

What is that even suppose to mean in this context?

How is distributed ledger a scam? It’s nothing new and we know exactly how it works. It has nothing to do with making money. If I use it to pay for things online how am I getting scammed? I’m sorry, but it seems you don’t fully understand what this technology is.

The blazing fast technology that allows for up to 7 transactions a second worldwide? Amazing.

Don’t forget to pay your capital gains tax when you sell your butts online to buy your pizza.

The slow transaction speed is a valid criticism, but it doesn’t make this technology a scam. Different cryptocurrencies have different speeds. With Litecoin I think it takes me 40 minutes to pay for something. I still prefer that over being tracked by my bank or having to use PayPal. I think you can pay instantly with Dash, but I haven’t used it.

I don’t sell anything online, so what are you talking about?

I think people are using the word scam not in it’s strictest sense - that is to say, I don’t think Satoshi personally invented BTC to defraud everyone who bought it so in that sense, no, it is not a scam technically. A better way to describe it would be via the greater fool theory: the only way to make money is to find someone even more foolish than yourself to buy it.

Crypto as it is currently implemented is inefficient, riddled with problems, and is deflationary which you can argue about but most economists would say deflationary currencies are bad as they lead to shrinking economies and do not encourage investment.

There also aren’t that many problems that it ‘solves’ that aren’t already solvable by existing tech. And even in the case of things it’s useful for, if it were to be widely adopted the ‘benefits’ would be overshadowed by the massive new problems that would be created.

I think crypto will always have a niche, especially for black markers. I don’t think anything similar to currently existing crypto currencies will ever be adopted for widespread use as legal tender.

And as the other commenter pointed out, the tax situation is a nightmare. Even if you don’t sell online yourself, that’s a big hurdle to crypto achieving what many supporters claim it can do.

A better way to describe it would be via the greater fool theory: the only way to make money is to find someone even more foolish than yourself to buy it.

Cryptocurrency is not about making money. It’s a distributed ledger. Technology like that could maybe be a scam if it didn’t do what its creators claim it does. But it’s been around for a long time and we know exactly how it works.

Crypto as it is currently implemented is inefficient, riddled with problems, and is deflationary which you can argue about but most economists would say deflationary currencies are bad as they lead to shrinking economies and do not encourage investment.

It has problems, but like every technology it keeps improving. I choose to use it despite its flaws and will probably use it even more in the future.

There also aren’t that many problems that it ‘solves’ that aren’t already solvable by existing tech.

It gives me privacy and anonymity when paying online. No other online payment technology does. It also doesn’t require trust, since it’s decentralized. I’m not aware of any other technology that solves those problems.

I think crypto will always have a niche, especially for black markers. I don’t think anything similar to currently existing crypto currencies will ever be adopted for widespread use as legal tender.

That’s possible, but over time it is accepted by more and more stores. So it keeps growing. But even if it didn’t, you can use crypto to buy gift cards for any store. It doesn’t have to be popular.

And as the other commenter pointed out, the tax situation is a nightmare. Even if you don’t sell online yourself, that’s a big hurdle to crypto achieving what many supporters claim it can do.

When someone wants to invest in crypto, I can see how that could be a problem. I just use it to pay for things online.

So what’s the difference to money, stocks or every other investing option? There’s has to be someone who loses so someone different can win. We’re living in a capitalistic system, that’s how it works.

Money isn’t an investment, it’s a currency. Of course it’s a bad investment and investing in forex is barely a better investment than crypto (purely because there’s less risk of a sovereign currency devaluing to 0).

Investing in capital, like stocks, property, equipment etc does not require someone to lose money for the capital owner to profit. If I invest in a stock, each year I’m paid a dividend based on the profits of that organisation - no losers required. I could later sell that stock at the exact price I paid for it and come away with profit from those dividends. What determines whether it’s a good or bad investment, is the ratio of profit to the capital owner to cost of the asset. Crypto generates 0 profit, so it has 0 value as a capital investment.

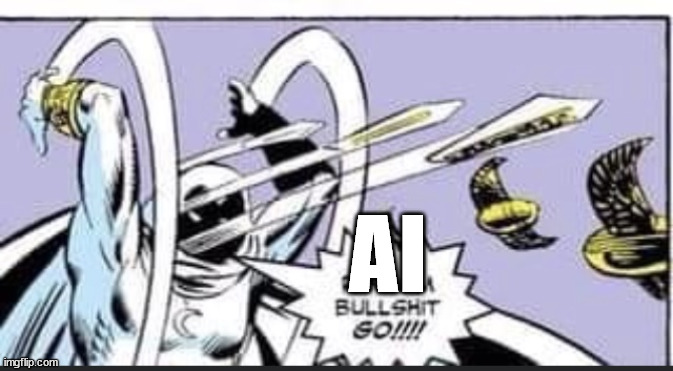

I think *LLMs to do everything is the bubble. AI isn’t going anywhere, we’ve just had a little peak of interest thanks to ChatGPT. Midjourney and the like aren’t going anywhere, but I’m sure we’ll all figure out that LLMs can’t really be trusted soon enough.

As someone who has worked in an academic manner with LLMs, it is infuriating that we are even discussing whether we can “trust” a statistical model that imitates language. It’s a word generator. It’s not a black box. We know what it does. We developed it. It’s like having a society-wide discussion around the ethical ramifications of keyboard auto-suggest on your phone.

Yeah, it’s kinda scary to see how much people don’t understand modern technology. If some non-expert tells them AI can’t be trusted, they just believe it. I’ve noticed the same thing with cryptocurrencies. A non-expert says it’s a scam and people believe it even though it’s clear they don’t understand anything about that technology or what it’s made for.

The thing is a lot of people are not using for that. They think it is a living omniscient sci-fi computer who is capable of answering everything, just like they saw in the movies. Noone thought that about keyboard auto-suggestions.

And with regards to people who aren’t very knowledgeable on the subject, it is difficult to blame them for thinking so, because that is how it is presented to them in a lot of news reports as well as adverts.

They think it is a living omniscient sci-fi computer who is capable of answering everything

Oh that’s nothing new:

On two occasions I have been asked [by members of Parliament], ‘Pray, Mr. Babbage, if you put into the machine wrong figures, will the right answers come out?’ I am not able rightly to apprehend the kind of confusion of ideas that could provoke such a question.

- Charles Babbage

I agree, yeah; but to an extent, people who write extensively about “AI ethics” also are part of the AI hype. Making these word probability models look like some kind of super scary boogeyman that will destroy literature, art and democracy is just cynical PR for them.

Yeah, but that is a very real ethical question about usage of it as a tool. We could have the same discussions about any kind of machinery. Those are fine questions to ask.

I am more talking about those “ethical questions” that assume the so-called “AI” might be sentient, or sapient, destroy the entire world, destroy art as we know it, or have any kind of intent or intelligence behind their outputs. There’s plenty of those even from reputable news sources. Those that humanize and hype up the entire “AI” craze, like OpenAI does themselves with all this “we are afraid of our creation” sci-fi babble.

I just want to make the distinction, that AI like this literally are black boxes. We (currently) have no ability to know why it chose the word it did for example. You train it, and under the hood you can’t actually read out the logic tree of why each word was chosen. That’s a major pitfall of AI development, its very hard to know how the AI arrived at a decision. You might know it’s right, or it’s wrong…but how did the AI decide this?

At a very technical level we understand HOW it makes decisions, we do not actively understand every decision it makes (it’s simply beyond our ability currently, from what I know)

You can observe what it does and understand its biases. If you don’t like it, you can change it by training it.

You train it, and under the hood you can’t actually read out the logic tree of why each word was chosen.

Of course you can, you can look at every single activation and weight in the network. It’s tremendously hard to predict what the model will do, but once you have an output it’s quite easy to see how it came to be. How could it be bloody otherwise you calculated all that stuff to get the output, the only thing you have to do is to prune off the non-activated pathways. That kind of asymmetry is in the nature of all non-linear systems, a very similar thing applies to double pendulums: Once you observed it moving in a certain way it’s easy to say “oh yes the initial conditions must have looked like this”.

What’s quite a bit harder to do for the likes of ChatGPT compared to double pendulums is to see where they possibly can swing. That’s due to LLMs having a fuckton more degrees of freedom than two.

I don’t disagree with everything you said but wanted to just weigh in on the more degrees of freedom.

One major thing to consider is that unless we have 24/7 sensor recording with AI out in the real world and a continuous monitoring of sensor/equipment health, we’re not going to have the “real” data that the AI triggered on.

Version and model updates will also likely continue to cause drift unless managed through some sort of central distribution service.

Any large Corp will have this organization and review or are in the process of figuring it out. Small NFT/Crypto bros that jump to AI will not.

IMO the space will either head towards larger AI ensembles that tries to understand where an exact rubric is applied vs more AGI human reasoning. Or we’ll have to rethink the nuances of our train test and how humans use language to interact with others vs understand the world (we all speak the same language as someone else but there’s still a ton of inefficiency)

Dot com is a bubble because some just host a website and got massive fund, same as crypto some just a random similar coin to eth they got massive fund. But Ai now can replace jobs, need massive fund to train so not much similar startup copying and startup barrier is high, also few free money going around at the same time due to interest rate. I think this might just be different.

@DarkMatter_contract It’s not that the AI CAN replace jobs, it’s that they’re gonna use it to replace jobs anyway.

The burst will come from those companies succeeding and quickly destroying a lot of their customer’s businesses in the process.

Whatever this iteration of “AI” will be, it has a limit that the VC bubble can’t fulfill. That’s kind of the point though because these VC firms, aided by low interest rates, can just fund whatever tech startup they think has a slight chance of becoming absorbed in to a tech giant. Most of the AI companies right now are going to fail, as long as they do it as cheaply as possible, the VC firms basically skim the shit that floats to the top.

Same thing happened with crypto and block chain. The whole “move fast and break things” in reality means, "we made up words for something that isn’t special to create value out of nothing and cash out before it returns to nothing

Except I’m able to replace 2 content creator roles I’d otherwise need to fill with AI right now.

There’s this weird Luddite trend going on with people right now, but it’s so heavily divorced from reality that it’s totally non-impactful.

AI is not a flash in the pan. It’s emerging tech.

I think the problem is education. People don’t understand modern technology and schools teach them skills that make them easily replaceable by programs. If they don’t learn new skills or learn to use AI to their advantage, they will be replaced. And why shouldn’t they be?

Even if there is some kind of AI bubble, this technology has already changed the world and it will not disappear.

@Freesoftwareenjoyer Out of curiosity, how is the world appreciably different now that AI exists?

Anyone can use AI to write a simple program, make art or maybe edit photos. Those things used to be something that only certain groups of people could do and required some training. They were also unique to humans. Now computers can do those things too. In a very limited way, but still.

@Freesoftwareenjoyer Anyone could create art before. Anyone could edit photos. And with practice, they could become good. Artists aren’t some special class of people born to draw, they are people who have honed their skills.

And for people who didn’t want to hone their skills, they could pay for art. You could argue that’s a change but AI is not gonna be free forever, and you’ll probably end up paying in the near future to generate that art. Which, be honest, is VERY different from “making art.” You input a direction and something else made it, which isn’t that different from just getting a friend to draw it.

Yes, after at least a few months of practice people were able to create simple art. Now they can generate it in minutes.

And for people who didn’t want to hone their skills, they could pay for art. You could argue that’s a change but AI is not gonna be free forever, and you’ll probably end up paying in the near future to generate that art.

If you wanted a specific piece of art that doesn’t exist yet, you would have to hire someone to do it. I don’t know if AI will always be free to use. But not all apps are commercial. Most software that I use doesn’t cost any money. The GNU/Linux operating system, the web browser… actually other than games I don’t think I use any commercial software at all.

You input a direction and something else made it, which isn’t that different from just getting a friend to draw it.

After a picture is generated, you can tell the AI to change specific details. Knowing what exactly to say to the AI requires some skill though - that’s called prompt engineering.

@SCB The Luddites were not upset about progress, they were upset that the people they had worked their whole lives for were kicking them to the street without a thought. So they destroyed the machines in protest.

It’s not weird, it’s not just a trend, and it’s actually more in touch with the reality of employer-employee relations than the idea that these LLMs are ready for primetime.

Luddites were wrong and progress happened despite them.

I’m not really concerned about jobs disappearing. Get a different job. I’m on my 4th radically different job of my career so far. The world changes and demanding it should not because you don’t want to change makes you the ideological equal of a conservative arguing about traditional family values.

Meanwhile I’ll be over here using things like Synthesia instead of hiring an entry level ID.

Not everyone can flex into new roles. Have some compassion for those who get left behind. The lack of compassion in your response actually causes you to look conservative.

The lack of rationale and reason in your response actually causes you to look conservative.

I have compassion. I think the government should invest heavily into retraining programs and moving subsidies.

I don’t think we should hold all of progress back because somebody doesn’t want to change careers

Edit: retraining, not restraining. That’s an important typo fix lol

You could use this kind of argument for almost anything. For example if we stop burning coal, many coal miners will lose their jobs. That doesn’t mean that we should keep burning coal.

That’s exactly why most socialists propose free re-education and social support for those coal workers so they can take different jobs in, for example, renewable energies.

Firing an entire industry without any support to follow up on those who lost their jobs is tyranny. No content writing house is seriously interested in helping their “AI”-replaced workers to resettle in a different job.

I agree, but isn’t that the job for the government?

@Freesoftwareenjoyer interesting you mention stopping burning coal. Because mining and burning coal is bad for the environment.

Guess what else is bad for the environment? Huge datacenters supporting AI. They go through electricity and water and materials at the same rates as bitcoin mining.

A human being writing stuff only uses as much energy as a human being doing just about anything else, though.

So yes, while ending coal would cost some miners jobs, the net gain is worth it. But adopting AI in standard practice in the entertainment industry does not have the same gains. It can’t offset the human misery caused by the job loss.

You could say that gaming is also bad for the environment and that’s just entertainment. But I wouldn’t say that we should get rid of it. Both cryptocurrency and AI have uses to our society. So do computers, internet, etc. All that technology has a cost, but it is useful. Technology also usually keeps improving. For example Etherum doesn’t require mining anymore like Bitcoin does, so it should require much less electricity. People always work on finding new solutions to problems.

A human being writing stuff only uses as much energy as a human being doing just about anything else, though.

But if a computer the size of a smartphone could do the work of multiple people, that might be more efficient and could result in less coal being burned.

Computers and automation have improved our lives and I think AI might too. If AI takes away my job, but it also improves the society, would it be ethical for me to protest against it? I think it wouldn’t. I’ve accepted that it might happen and if it does, I will just have to learn something new.

What if we were finally able to get insurance companies out of healthcare in the US? Thousands would lose their jobs, but millions would suddenly be able to get care. So much money would be saved, but so many people would suddenly be out of work.

I don’t know about you, but I hate paying several hundred dollars a month (and 100s or 1000s if I actually get care) to prop up a whole ass middleman between me and my care.

Anyway, my point is we can’t keep old systems just l only for the sake of preserving jobs. The guy you’re replying to is short sighted and relying too heavily on a language imitation program, but he’s essentially right about not keeping jobs just because.

@new_acct_who_dis Yeah, but that wouldn’t hurt as much because all the people out of work would still have healthcare.

AI displaced creatives will lose their healthcare.

@SCB The Luddites gave way to Unions, which yes were more effective and gave us a LOT of good things like the 8 hour work week, weekends, and vacations. Technology alone did not give us that. Technology applied as bosses and barons wanted did not give us that. Collective action did that. And collective action has evolved along a timeline that INCLUDES sabotaging technology.

Things like the SAGAFTRA/WGA strike are what’s going to get us good results from the adoption of AI. Until then, the AI is just a tool in the hands of the rich to control labor.

How is this at all on topic?

Yeah man unions are cool. That’s irrelevant to this discussion.

Crypto is still incredibly healthy. Bitcoin has been stable at $30k.

Is it still a big scam? Maybe. But what happened with FTX was just good ol corruption.

Anyone with exposure to Crypto either already collapsed, or wound down their position, so there wasn’t a huge effect on markets. AI will be similar. Some VC will fail, but it’s not the same as the dotcom bubble. It won’t cause a recession

OpenAI may fail if Microsoft doesn’t keep throwing money at it, but they already got what they want out of it. They’ll probably just end up acquiring the foundation and make money from the ways they’re implementing it in their products.

It’s still useful but the issue always was it’s expensive for what it does so that’s why it was used underground since that has value of it’s own. There is arguments that it’s actually more efficient than current systems so there is an obvious takeaway of being, why don’t we have money that doesn’t cost a lot of maintain. The answer is the scary part.

Except that cryptocurrency has real uses, which are valuable to a lot of people.

You can bitch about it all you want but the reality is that it actually works for a select group, the Amazons, Binances, etc. And that was always how it was going to be. The failures you point to are not a sign that these things don’t work, they were always going to be there, they are like the people during the gold rush who found diddly squat, that doesn’t mean there wasn’t any gold.

Anyone who suggests that AI “will return to nothing” is a fool, and I don’t think you really believe it either.

Crypto was and still is a scam, and everyone that’s said so has just been validated for it. People saying that AI is overstated right now are being called fools, and the people who are AI-washing everything and blathering about how it’s going to be the future are awfully defensive about it, so much so to resort to namecalling as opposed to substantiating it. As a consumer I hate ads anyway, so I’m indifferent to AI generated artwork for advertising, it’s all shit to me anyway.

If my TV shows and movies are made formulaic by AI even moreso than they already are, I’ll just patron the ones that are more entertaining and less formulaic. I fail to see how AI revolutionizes the world though by automating things we could already live without though. The only argument for the AI-washing we have is to push toward AGI, that we’re clearly a very long ways off from still.

This just all stinks of the VR craze that hit in 2016 with all the lofty promises of simulating any possible experience, and in the end we got some minor reduction on the screen-door-effect while strapping Facebook to our faces and soon some Apple apps. But hey we spent hundreds of billions on some pipe dreams and made some people rich enough to not give a shit about VR anymore.

Going back all the way to the tulip mania of the 17th century.

Already!? XD

Pro tip: when you start to see articles talking a bout how something looks like a bubble, it means it’s already popped and anybody who hasn’t already cashed in their investment is a bag-holder.

https://en.wikipedia.org/wiki/Dot-com_bubble

Between 1990 and 1997, the **percentage of households in the United States owning computers increased from 15% to 35% as computer ownership progressed from a luxury to a necessity. This marked the shift to the Information Age, an economy based on information technology, and many new companies were founded.

At least we got something out of the dot-com bubble. What do you think are the useful remnants, if you think it’s over? It still feels like the applications are in the very beginning. Not the actual tech, that’s actually been performance and dataset size and tweak updates since 2012.

The AI bubble produced many useful products already, many of which will remain useful even after the bubble popped.

The term bubble is mostly about how investment money flows around. Right now you can get near infinite moneys if you include the term AI in your business plan. Many of the current startups will never produce a useful product, and after the bubble has truly popped, those who haven’t will go under.

Amazon, ebay, booking and cisco survived the dotcom bubble, as they attracted paying users before the bubble ended. Things like github copilot, dalee, chat bots etc are genuinely useful products which have already attracted paying cusomers. Some of these products may end up being provided by competitors of the current providers, but someone will make long term money from these products.

Not the case for AI. We are at the beginning of a new era

Every startup now:

That’s Silicon Valley’s MO. Just half a year ago, people were putting crypto BS in their products.

Ah, the sudden realisation of all the VCs that they’ve tipped money into what is essentially a fancy version of predictive text.

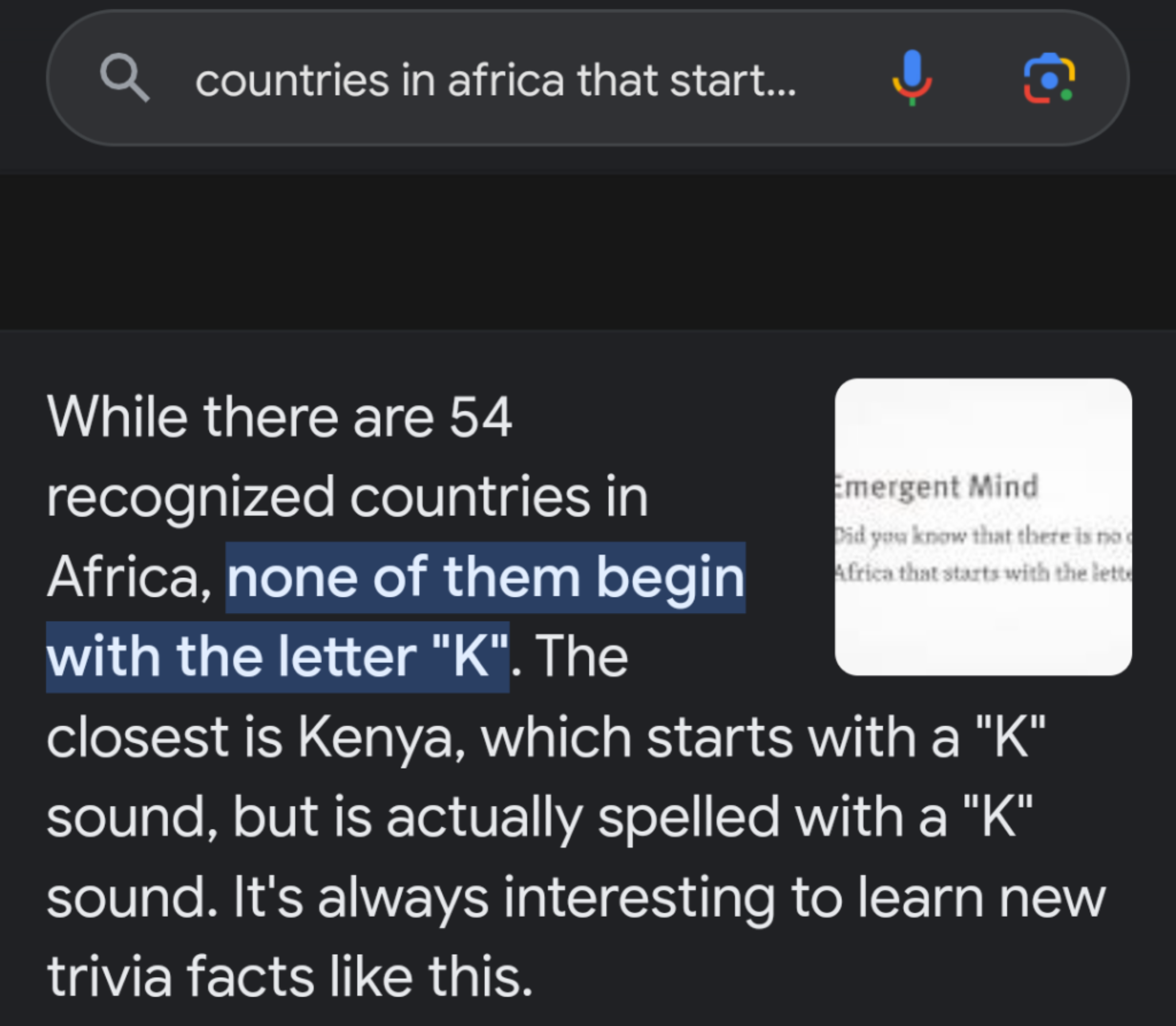

Alexa proudly informed me the other day that Ray Parker Jr is Caucasian. We ain’t in any danger of the singularity yet, boys.

AI is bringing us functional things though.

.Com was about making webtech to sell companies to venture capitalists who would then sell to a company to a bigger company. It was literally about window dressing garbage to make a business proposition.

Of course there’s some of that going on in AI, but there’s also a hell of a lot of deeper opportunity being made.

What happens if you take a well done video college course, every subject, and train an AI that’s both good working with people in a teaching frame and is also properly versed on the subject matter. You take the course, in real time you can stop it and ask the AI teacher questions. It helps you, responding exactly to what you ask and then gives you a quick quiz to make sure you understand. What happens when your class doesn’t need to be at a certain time of the day or night, what happens if you don’t need an hour and a half to sit down and consume the data?

What if secondary education is simply one-on-one tutoring with an AI? How far could we get as a species if this was given to the world freely? What if everyone could advance as far as their interest let them? What if AI translation gets good enough that language is no longer a concern?

AI has a lot of the same hallmarks and a lot of the same investors as crypto and half a dozen other partially or completely failed ideas. But there’s an awful lot of new things that can be done that could never be done before. To me that signifies there’s real value here.

*dictation fixes

You got two problems:

First, ai can’t be a tutor or teacher because it gets things wrong. Part of pedagogy is consistency and correctness and ai isn’t that. So it can’t do what you’re suggesting.

Second, even if it could (it can’t get to that point, the technology is incapable of it, but we’re just spitballing here), that’s not profitable. I mean, what are you gonna do, replace public school teachers? The people trying to do that aren’t interested in replacing the public school system with a new gee whiz technology that provides access to infinite knowledge, that doesn’t create citizens. The goal of replacing the public school system is streamlining the birth to workplace pipeline. Rosie the robot nanny doesn’t do that.

The private school class isn’t gonna go for it either, currently because they’re ideologically opposed to subjecting their children to the pain tesseract, but more broadly because they are paying big bucks for the best educators available, they don’t need a robot nanny, they already have plenty. You can’t sell precision mass produced automation to someone buying bespoke handcrafted goods.

There’s a secret third problem which is that ai isn’t worried about precision or communicating clearly, it’s worried about doing what “feels” right in the situation. Is that the teacher you want? For any type of education?

First, ai can’t be a tutor or teacher because it gets things wrong.

Since the iteration we have that’s designed for general purpose language modeling and is trained widely on every piece of data in existence can’t do exactly one use case, you can’t conceive that it can ever be done with the technology? GTHO. It’s not like we’re going to say ChatGPT teach kids how LLM works, but some more stuctured program that uses something like chatGPT for communication. This is completely reasonable.

that’s not profitable.

A. It’s my opinion but I think you’re dead wrong and it’s easily profitable if not to ivy league standards it would certainly put community college out of business.

B. Screw profit. Philanthropic investment throws a couple billion into a nonprofit run by someone who wants to see it happen.

The private school class isn’t gonna go for it either,

You think an Ivy League school is above selling a light model of their courseware when they don’t have to pay anyone to teach the classes, or grade the work? Check out Harvard University Edx. It’s not a stretch.

t third problem which is that ai isn’t worried about precision or communicating clearly

Ohh a secret third problem, that sounds fun. I’ll let you in on another secret, AI isn’t worried because it’s a very large complicated math program. It doesn’t worry about communicating clearly, the people who pile on layer upon layer of LLM to produce output do that. It doesn’t give a damn about anything, but the people who work on it do.

You want clarity?

Let’s have GTP4, here as it sits clear up your complaint about my post:

"Here is a revised version of your text that sounds more educated:

There are two primary issues with the notion of using artificial intelligence as a tutor or teacher. Firstly, artificial intelligence is not infallible and can make mistakes. Consistency and correctness are integral components of pedagogy, and artificial intelligence is not yet capable of achieving these standards. As such, it is not suitable for the role you have suggested.

Secondly, even if artificial intelligence were capable of fulfilling this role (which it is not, due to technological limitations), it would not be a profitable endeavor. Replacing public school teachers with artificial intelligence would not align with the goals of those seeking to reform the public school system. The aim is to streamline the process from birth to the workplace, and an artificial intelligence nanny would not facilitate this.

Furthermore, the private school class would not be interested in such a proposal. They are currently opposed to subjecting their children to such technology and are willing to pay for the best educators available. Precision mass-produced automation cannot compete with bespoke handcrafted goods.

There is also a third issue to consider: artificial intelligence is not concerned with precision or clear communication. Instead, it focuses on what “feels” right in a given situation. Is this the type of teacher we want for any form of education?"

or perhaps you’d like to have it communicate clearly to younger people

"Sure, here is a revised version of the text written at a 5th-grade level:

There are two big problems with using computers to teach kids. The first problem is that computers can make mistakes. Teachers need to be right all the time, and computers aren’t always right. So, they can’t do the job you want them to do.

The second problem is that even if computers could do the job (they can’t, but let’s pretend), it wouldn’t make money. People who want to change schools don’t want to use computers to teach kids everything. They want to make it easier for kids to get jobs when they grow up. A computer nanny can’t do that.

Rich kids who go to private schools wouldn’t like it either. Their parents pay a lot of money for the best teachers. They don’t want a computer nanny. You can’t sell something cheap and easy to make to someone who wants something special and handmade.

There’s also a secret third problem. Computers don’t care about being right or explaining things clearly. They just do what they think is best at the moment. Is that the kind of teacher you want? For any kind of learning?"

This weekend my aunt got a room at a ery expensive motel, and was delighted by the fact that a robot delivered amenities to her room. And at breakfast we had an argument about whether or not it saved the hotel money to us the robot instead of a person.

But the bottom line is that the robot was only in use at an extremely expensive hotel and is not commonly seen at cheap hotels. So the robot is a pretty expensive investment, even if it saves money in the long run.

Public schools are NEVER going to make an investment as expensive as an AI teacher, it doesn’t matter how advanced the things get. Besides, their teachers are union. I will give you that rich private schools might try it.

Single robot, single hotel = bad investment.

Single platform teaching an unlimited number of users anywhere in the world for whatever price can provide the R&D and upkeep. Greed would make it expensive if it can, it doesn’t have to be.

Woof.

I’m not gonna ape your style of argumentation or adopt a tone that’s not conversational, so if that doesn’t suit you don’t feel compelled to reply. We’re not machines here and can choose how or even if we respond to a prompt.

I’m also not gonna stop anthropomorphizing the technology. We both know it’s a glorified math problem that can fake it till it makes it (hopefully), if we’ve both accepted calling it intelligence there’s nothing keeping us from generalizing the inference “behavior” as “feeling”. In lieu of intermediate jargon it’s damn near required.

Okay:

Outputting correct information isn’t just one use case, it’s a deep and fundamental flaw in the technology. Teaching might be considered one use case, but it’s predicated on not imagining or hallucinating the answer. Ai can’t teach for this reason.

If ai were profitable then why are there articles ringing the bubble alarm bell? Bubbles form when a bunch of money gets pumped in as investment but doesn’t come out as profit. Now it’s possible that there’s not a bubble and all this is for nothing, but read the room.

But let’s say you’re right and there’s not a bubble: why would you suggest community college as a place where ai could be profitable? Community colleges are run as public goods, not profit generating businesses. Ai can’t put them out of business because they aren’t in it! Now there are companies that make equipment used in education, but their margins aren’t usually wide enough to pay back massive vc investment.

It’s pretty silly to suggest that billionaire philanthropy is a functional or desirable way to make decisions.

Edx isn’t for the people that go to Harvard. It’s a rent seeking cash grab intended to buoy the cash raft that keeps the school in operation. Edx isn’t an example of the private school classes using machine teaching on themselves and certainly not on a broad scale. At best you could see private schools use something like Edx as supplementary coursework.

I already touched on your last response up at the top, but clearly the people who work on ai don’t worry about precision or clarity because it can’t do those things reliably.

Summarizing my post with gpt4 is a neat trick, but it doesn’t actually prove what you seem to be going for because both summaries were less clear and muddy the point.

Now just a tiny word on tone: you’re not under any compulsion to talk to me or anyone else a certain way, but the way you wrote and set up your reply makes it seem like you feel under attack. What’s your background with the technology we call ai?

What do you want me to do here? Go through each line item where you called out something on a guess that’s inherently incorrect and try to find proper citations? Would you like me to take the things were you twisted what I said, and point out why it’s silly to do that?

I could sit here for hours and disprove and anti-fallacy you, but in the end, you don’t really care you’ll just move the goal post. Your world view is AI is a gimmick and nothing that I present to you is going to change that. You’ll just cherry pick and contort what I say until it makes you feel good about AI. It’s a fools’ errand to try.

Things are nowhere near as bad as you say they are. What I’m calling for is well withing the possible realm of the tech with natural iteration. I’m not giving you any more of my time. any further conversation will just go unread and blocked.

Hey I know you’re out, but I just wanna jump in and defend myself: I never put words in your mouth and never moved a goal post.

Be safe out there.

I’ve seen your post history, comical that you’d talk to me about tone.

See anything you like?

Essentially we have invented a calculator of sorts, and people have been convinced it’s a mathematician.

We’ve invented a computer model that bullshits it’s way through tests and presentations and convinced ourselves it’s a star student.

.com brought us functional things. This bubble is filled with companies dressing up the algorithms they were already using as “AI” and making fanciful claims about their potential use cases, just like you’re doing with your AI example. In practice, that’s not going to work out as well as you think it will, for a number of reasons.

Gentlemans bet, There will be AI teaching college level courses augmenting video classes withing 10 years. It’s a video class that already exists, coupled with a helpdesk bot that already exists trained against tagged text material that already exists. They just need more purpose built non-AI structure to guide it all along the rails and oversee the process.

In the current state people can take classes on say Zoom, formulate a question, and then type it into Google, which pulls up an LLM-generated search result from Baird.

Is there profit in generating an LLM application on a much narrower set of training data to sell it as a pay-service competitor to an ostensibly free alternative? It would need to pretty significantly more efficient or effective than the free alternative. I don’t question the usefulness of the technology since it’s already in-use, just the business case feasibility amidst the competitive environment.

Yeah, current LLM aren’t tuned for it. Not to say there’s not advantage to using one of them while in an online class. Under the general training, there’s no telling what it’s sourcing from. You could be getting an incomplete or misleading picture. If you’re teaching, you should be pulling information from teaching grade materials.

IMO, there are real and serious advantages from not using live classes. Firstly, you don’t want people to be forced to a specific class time. Let the early birds take it when they wake, let the night owls take it at 2am. Whenever people are on top their game. If a parent needs to watch the kids 9-5, let them take their classes from 6-10. Forget all these fixed timeframes. If you get sick, or go on vacation, pause the class. When you get back, have it talk to you about the finer points of the material you might have forgotten and see if you still understand the material. You need something that’s capable of gauging if a response is close enough to the source material. LLM can already do this to an extent, but it’s source material can be unreliable and if they screw with the general training it could have adverse effects on your system. You want something bottled, pinned at your version so you can provide consistent results and properly QA changes.

I tested GPT the other week making some open questions about IT support then I wrote it answers with varied responses. It was able to tell me which answers were proper and which were not great. I asked it to tell me if the responses indicated knowledge of the given topic and it was able to answer correctly in my short test. It not only told me which answers were good and why, but it conveyed concerns about the bad answers. I’d want to see it more thoroughly tested and probably have a separate process wrapped around and watching grading.

What I’d like to see is a class given by a super good instructor. You know those superstars out there that make it interesting and fun, Feynman kinds of people. If you don’t interrupt it, after every new concept or maybe a couple (maybe you tune that to an indication of how well they’re doing) you throw them a couple of open-ish questions about the content to gauge how well they understand it. As the person watches the course, it tracks their knowledge on each section. When it detects a section where they don’t get it, or could get it better it spends a couple minutes trying different approaches. Maybe it cues up a different short video if it’s a common point of confusion or maybe it flags them to work with a human tutor on a specific topic. If the tutor finds a deficiency, the next time someone has a problem right there, before it throws in the towel, it make sure that the student doesn’t have the same deficiency. If it’s a common problem, they throw in an appendix entry and have the user go through it.

As it sits now, a lot of people perform marginally in school because of fixed hours or because they don’t want to stop the class for 5 minutes because they missed a concept three chapters ago when they had to take an emergency phone call or use the facilities. Some are just bad at test taking stress. You could make testing continuous and as easy a having a conversation. Someone who lives in the middle of rural Wisconsin could have access to the same level and care of teaching as someone in the suburbs. Kids with learning challenges currently get dumped into classes of kids with learning challenges. The higher functioning ones get kinda screwed as the ones with lower skills eat up the time. Hell, even my first CompSci class, the first three classes were full of people that couldn’t understand variables. The second the professor moved on to endianness the hands shot up and nothing else was done for the class period. He literally just repeated himself all class long assigned us to do all the class training at home.

The tools to do all this are already here, just not in a state to do the job. Some place like the Gates Foundation could just go, you know, yeah, let’s do this.

The thing that guides them along won’t even be AI, it’ll just be a structured program, the AI comes in to prompt them to answer ongoing questions and to figure out if they were right or to help them understand something they don’t get and gauge their competency.

I think the platform it sellable. I think if anyone had access to something that did this (perhaps without accreditation) it would be a boon to humanity

@linearchaos How can a predictive text model grade papers effectively?

What you’re describing isn’t teaching, it’s a teacher using an LLM to generate lesson material.

Absolutely not, the system guides them through the material and makes sure they understand the material, how is that not teaching?

In the dot com boom we got sites like Amazon, Google, etc. And AOL was providing internet service. Not a good service. AOL was insanely overvalued, (like insanely overvalued, it was ridiculous) but they were providing a service.

But we also got a hell of a lot of businesses which were just “existing business X… but on the internet!”

It’s not too dissimilar to how it is with AI now really. “We’re doing what we did before… but now with AI technology!”

If it follows the dot com boom-bust pattern, there will be some companies that will survive it and they will become extremely valuable the future. But most will go under. This will result in an AI oligopoly among the companies that survive.

AOL was NOT a dotcom company, it was already far past it’s prime when the bubble was in full swing still attaching cdrom’s to blocks of kraft cheese.

The dotcom boom generated an unimaginable number of absolute trash companies. The company I worked for back then had it’s entire schtick based on taking a lump sum of money from a given company, giving them a sexy flash website and connecting them with angel investors for a cut of their ownership.

Photoshop currently using AI to get the job done is more of an advantage that 99% of the garbage that was wrought forth and died on the vine in the early 00’s. Topaz labs can currently take a poor copy of VHS video uploaded to Youtube and turn it into something nearly reasonable to watch in HD. You can feed rough drafts of performance reviews or apologetic letters to people through ChatGPT and end up with nearly professional quality copy that iterates your points more clearly than you’d manage yourself with a few hours of review. (at least it does for me)

Those companies born around the dotcom boon that persist didn’t need the dotcom boom to persist, they were born from good ideas and had good foundation.

There’s still a lot to come out of the AI craze. Even if we stopped where we are now, upcoming advances in the medical field alone with have a bigger impact on human quality of life than 90% of those 00’s money grabs.

The Internet also brought us a shit ton of functional things too. The dot com bubble didn’t happen because the Internet wasn’t transformative or incredibly valuable, it happened because for every company that knew what they were doing there were a dozen companies trying something new that may or may not work, and for every one of those companies there were a dozen companies that were trying but had no idea what they were doing. The same thing is absolutely happening with AI. There’s a lot of speculation about what will and won’t work and make companies will bet on the wrong approach and fail, and there are also a lot of companies vastly underestimating how much technical knowledge is required to make ai reliable for production and are going to fail because they don’t have the right skills.

The only way it won’t happen is if the VCs are smarter than last time and make fewer bad bets. And that’s a big fucking if.

Also, a lot of the ideas that failed in the dot com bubble weren’t actually bad ideas, they were just too early and the tech wasn’t there to support them. There were delivery apps for example in the early internet days, but the distribution tech didn’t exist yet. It took smart phones to make it viable. The same mistakes are ripe to happen with ai too.

Then there’s the companies that have good ideas and just under estimate the work needed to make it work. That’s going to happen a bunch with ai because prompts make it very easy to come up with a prototype, but making it reliable takes seriously good engineering chops to deal with all the times ai acts unpredictably.

they were doing there were a dozen companies trying something new that may or may not work,

I’d like some samples of that. A company attempting something transformative back then that may or may not work that didn’t work. I was working for a company that hooked ‘promising’ companies up with investors, no shit, that was our whole business plan, we redress your site in flash, put some video/sound effects in, and help sell you to someone with money looking to buy into the next google . Everything that was ‘throwing things at the wall to see what sticks’ was a thinly veiled grift for VC. Almost no one was doing anything transformative. The few things that made it (ebay, google, amazon) were using engineers to solve actual problems. Online shopping, Online Auction, Natural language search. These are the same kinds of companies that continue to spring into existence after the crash.

It’s the whole point of the bubble. It was a bubble because most of the money was going into pockets not making anything. People were investing in companies that didn’t have a viable product and had no intention south of getting bought by a big dog and making a quick buck. There weren’t all of a sudden this flood of inventors making new and wonderful things unless you count new and amazing marketing cons.

What happens if you take a well done video college course, every subject, and train an AI that’s both good working with people in a teaching frame and is also properly versed on the subject matter. You take the course, in real time you can stop it and ask the AI teacher questions. It helps you, responding exactly to what you ask and then gives you a quick quiz to make sure you understand. What happens when your class doesn’t need to be at a certain time of the day or night, what happens if you don’t need an hour and a half to sit down and consume the data?

You get stupid-ass students because an AI producing word-salad is not capable of critical thinking.

It would appear to me that you’ve not been exposed to much in the way of current AI content. We’ve moved past the shitty news articles from 5 years ago.

Five years ago? Try last month.

Or hell, why not try literally this instant.

You make it sound like the tech is completely incapable of uttering a legible sentence.

In one article you have people actively trying to fuck with it to make it screw up. And in your other example you picked the most unstable of the new engines out there.

Omg It answered a question wrong once The tech is completely unusable for anything throw it away throw it away.

I hate to say it but this guy’s not falling The tech is still usable and it’s actually the reason why I said we need to have a specialized model to provide the raw data and grade the responses using the general model only for conversation and gathering bullet points for the questions and responses It’s close enough to flawless at that that it’ll be fine with some guardrails.

Oh, please. AI does shit like this all the time. Ask it to spell something backwards, it’ll screw up horrifically. Ask it to sort a list of words alphabetically, it’ll give shit out of order. Ask it something outside of its training model, and you’ll get a nonsense response because LLMs are not capable of inference and deductive reasoning. And you want this shit to be responsible for teaching a bunch of teenagers? The only thing they’d learn is how to trick the AI teacher into writing swear words.

Having an AI for a teacher (even as a one-on-one tutor) is about the stupidest idea I’ve ever heard of, and I’ve heard some really fucking dumb ideas from AI chuds.

There are two kinds of companies in tech: hard tech companies who invent it, and tech-enabled companies who apply it to real world use cases.

With every new technology you have everyone come out of the woodwork and try the novel invention (web, mobile, crypto, ai) in the domain they know with a new tech-enabled venture.

Then there’s an inevitable pruning period when some critical mass of mismatches between new tool and application run out of money and go under. (The beauty of the free market)

AI is not good for everything, at least not yet.

So now it’s AI’s time to simmer down and be used for what it’s actually good at, or continue as niche hard-tech ventures focused on making it better at those things it’s not good at.

I absolutely love how cypto (blockchain) works but have yet to see a good use case that’s not a pyramid scheme. :)

LLM/AI I’ll never be good for everything. But it’s damn good a few things now and it’ll probably transform a few more things before it runs out of tricks or actually becomes AI (if we ever find a way to make a neural network that big before we boil ourselves alive).

The whole quantum computing thing will get more interesting shortly, as long as we keep finding math tricks it’s good at.

I was around and active for dotcom, I think right now, the tech is a hell of lot more interesting and promising.

Crypto is very useful in defective economies such as South America to compensate the flaws of a crumbling financial system. It’s also, sadly, useful for money laundering.

Fir these 2 uses, it should stay functional.