- cross-posted to:

- [email protected]

- cross-posted to:

- [email protected]

There was a great article in the Journal of Irreproducible Results years ago about the development of Artificial Stupidity (AS). I always do a mental translation to AS when ever I see AI.

Nice replacement topic after the maintainer drama last week

I think the drama came from when the Russian forces started killing civilians 🤷

Not a company following the law.

Sucks to

suckwork for companies run by a wartime government.Yea this is so blatant I’m not even going to click on that shit.

I play around with the paid version of chatgpt and I still don’t have any practical use for it. it’s just a toy at this point.

It’s useful for my firmware development, but it’s a tool like any other. Pros and cons.

I use shell_gpt with OpenAI api key so that I don’t have to pay a monthly fee for their web interface which is way too expensive. I topped up my account with 5$ back in March and I still haven’t use it up. It is OK for getting info about very well established info where doing a web search would be more exhausting than asking chatgpt. But every time I try something more esoteric it will make up shit, like non existent options for CLI tools

ugh hallucinating commands is such a pain

I used chatGPT to help make looking up some syntax on a niche scripting language over the weekend to speed up the time I spent working so I could get back to the weekend.

Then, yesterday, I spent time talking to a colleague who was familiar with the language to find the real syntax because chatGPT just made shit up and doesn’t seem to have been accurate about any of the details I asked about.

Though it did help me realize that this whole time when I thought I was frying things, I was often actually steaming them, so I guess it balances out a bit?

That’s about right. I’ve been using LLMs to automate a lot of cruft work from my dev job daily, it’s like having a knowledgeable intern who sometimes impresses you with their knowledge but need a lot of guidance.

watch out; i learned the hard way in an interview that i do this so much that i can no longer create terraform & ansible playbooks from scratch.

even a basic api call from scratch was difficult to remember and i’m sure i looked like a hack to them since they treated me as such.

In addition, there have been these studies released (not so sure how well established, so take this with a grain of salt) lately, indicating a correlation with increased perceived efficiency/productivity, but also a strongly linked decrease in actual efficiency/productivity, when using LLMs for dev work.

After some initial excitement, I’ve dialed back using them to zero, and my contributions have been on the increase. I think it just feels good to spitball, which translates to heightened sense of excitement while working. But it’s really just much faster and convenient to do the boring stuff with snippets and templates etc, if not as exciting. We’ve been doing pair programming lately with humans, and while that’s slower and less efficient too, seems to contribute towards rise in quality and less problems in code review later, while also providing the spitballing side. In a much better format, I think, too, though I guess that’s subjective.

I mean, interviews have always been hell for me (often with multiple rounds of leetcode) so there’s nothing new there for me lol

Same here but this one was especially painful since it was the closest match with my experience I’ve ever encountered in 20ish years and now I know that they will never give me the time of day again and; based on my experience in silicon valley; may end up on a thier blacklist permanently.

Blacklists are heavily overrated and exaggerated, I’d say there’s no chance you’re on a blacklist. Hell, if you interview with them 3 years later, it’s entirely possible they have no clue who you are and end up hiring you - I’ve had literally that exact scenario happen. Tons of companies allow you to re-apply within 6 months of interviewing, let alone 12 months or longer.

The only way you’d end up on a blacklist is if you accidentally step on the owners dog during the interview or something like that.

Being on the other side of the interviewing table for the last 20ish years and being told that we’re not going to hire people that everyone unanimously loved and we unquestionably needed more times that I want to remember makes me think that blacklists are common.

In all of the cases I’ve experienced in the last decade or so: people who had faang and old silicon on their resumes but couldn’t do basic things like creating an ansible playbook from scratch were either an automatic addition to that list or at least the butt of a joke that pervades the company’s cool aide drinker culture for years afterwards; especially so in recruiting.

Yes they’ll eventually forget and I think it’s proportional to how egregious or how close to home your perceived misrepresentation is to them.

I think I’ve probably only ever been blacklisted once in my entire career, and it’s because I looked up the reviews of a company I applied to and they had some very concerning stuff so I just ghosted them completely and never answered their calls after we had already begun to play a bit of phone tag prior to that trying to arrange an interview.

In my defense, they took a good while to reply to my application and they never sent any emails just phone calls, which it’s like, come on I’m a developer you know I don’t want to sit on the phone all day like I’m a sales person or something, send an email to schedule an interview like every other company instead of just spamming phone calls lol

Agreed though, eventually they will forget, it just needs enough time, and maybe you’d not even want to work there.

And then people will complain about that saying it’s almost all hype and no substance.

Then that one tech bro will keep insisting that lemmy is being unfair to AI and there are so many good use cases.

No one is denying the 10% use cases, we just don’t think it’s special or needs extra attention since those use cases already had other possible algorithmic solutions.

Tech bros need to realize, even if there are some use cases for AI, there has not been any revolution, stop trying to make it happen and enjoy your new slightly better tool in silence.

Hi! It’s me, the guy you discussed this with the other day! The guy that said Lemmy is full of AI wet blankets.

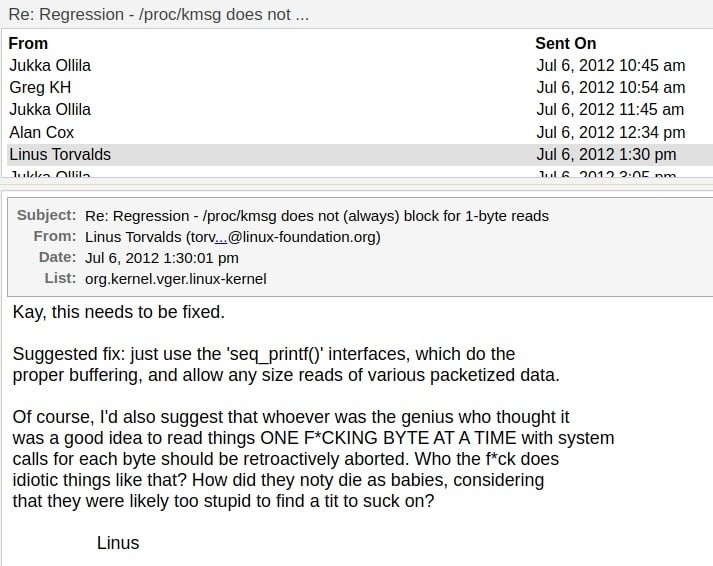

I am 100% with Linus AND would say the 10% good use cases can be transformative.

Since there isn’t any room for nuance on the Internet, my comment seemed to ruffle feathers. There are definitely some folks out there that act like ALL AI is worthless and LLMs specifically have no value. I provided a list of use cases that I use pretty frequently where it can add value. (Then folks started picking it apart with strawmen).

I gotta say though this wave of AI tech feels different. It reminds me of the early days of the web/computing in the late 90s early 2000s. Where it’s fun, exciting, and people are doing all sorts of weird,quirky shit with it, and it’s not even close to perfect. It breaks a lot and has limitations but their is something there. There is a lot of promise.

Like I said else where, it ain’t replacing humans any time soon, we won’t have AGI for decades, and it’s not solving world hunger. That’s all hype bro bullshit. But there is actual value here.

Hi! It’s me, the guy you discussed this with the other day! The guy that said Lemmy is full of AI wet blankets.

Omg you found me in another post. I’m not even mad; I do like how passionate you are about things.

Since there isn’t any room for nuance on the Internet, my comment seemed to ruffle feathers. There are definitely some folks out there that act like ALL AI is worthless and LLMs specifically have no value. I provided a list of use cases that I use pretty frequently where it can add value. (Then folks started picking it apart with strawmen).

What you’re talking about is polarization and yeah, it’s a big issue.

This is a good example, I never did any strawman nor disagree with the fact that it can be useful in some shape or form. I was trying to say its value is much much lower than what people claim to be.

But that’s the issue with polarization, me saying there is much less value can be interpreted as absolute zero, and I apologize for contributing to the polarization.

and that 10% isnt really real, just a gabbier dr.sbaitso

Idk man, my doctors seem pretty fucking impressed with AI’s capabilities to make diagnoses by analyzing images like MRI’s.

then you are a fortunate rarity. most posts about the tech complain about ai just rearranging what it is told and regurgitating it with added spice

I think that is because most people are only aware of its use as what are, effectively, chat bots. Which, while the most widely used application, is one of its least useful. Medical image analysis is one of the big places it is making strides in. I am told, by a friend in aerospace, that it is showing massive potential for a variety of engineering uses. His firm has been working on using it to design, or modify, things like hulls, air frames, etc. Industrial uses, such as these, are showing a lot of promise, it seems.

thats good. be nice if all the current ai developers would aim that way

Yup.

I don’t know why. The people marketing it have absolutely no understanding of what they’re selling.

Best part is that I get paid if it works as they expect it to and I get paid if I have to decommission or replace it. I’m not the one developing the AI that they’re wasting money on, they just demanded I use it.

That’s true software engineering folks. Decoupling doesn’t just make it easier to program and reuse, it saves your job when you need to retire something later too.

The worrying part is the implications of what they’re claiming to sell. They’re selling an imagined future in which there exists a class of sapient beings with no legal rights that corporations can freely enslave. How far that is from the reality of the tech doesn’t matter, it’s absolutely horrifying that this is something the ruling class wants enough to invest billions of dollars just for the chance of fantasizing about it.

The people marketing it have absolutely no understanding of what they’re selling.

Has it ever been any different? Like, I’m not in tech, I build signs for a living, and the people selling our signs have no idea what they’re selling.

Their goal isn’t to make AI.

The goal of both the VCs and the startups is to make money. That’s why.

It’s not even to make money, they already do that. They need GROWTH. More money this quarter than last or the stockholders don’t get paid.

Growth doesn’t mean revenue over cost anymore, it just means number go up. The easiest way to create growth from nothing is marketing tulips to venture capital and retail investors.

Linus is known for his generosity.

Linus is a generous man.

Dude…

What?

True. 10% is very generous.

Yeah, he’s right. AI is mostly used by corps to enshittificate their products for just extra profit

Like with any new technology. Remember the blockchain hype a few years back? Give it a few years and we will have a handful of areas where it makes sense and the rest of the hype will die off.

Everyone sane probably realizes this. No one knows for sure exactly where it will succeed so a lot of money and time is being spent on a 10% chance for a huge payout in case they guessed right.

It has some application in technical writing, data transformation and querying/summarization but it is definitely being oversold.

There’s an area where blockchain makes sense!?!

Cryptocurrencies can be useful as currencies. Not very useful as investment though.

Git is a sort of proto-blockchain – well, it’s a ledger anyway. It is fairly useful. (Fucking opaque compared to subversion or other centralized systems that didn’t have the ledger, but I digress…)

Yep, Ik ai should die someday.

he isn’t wrong

If anything he’s being a bit generous.

game devs gonna have to use different language to describe what used to be simply called “enemy AI” where exactly zero machine learning is involved

Logic and Path-finding?

CPU

So basically just like linux. Except linux has no marketing…So 10% reality, and 90% uhhhhhhhhhh…

So basically just like linux. Except linux has no marketing

Except for the most popular OS on the Internet, of course.

You’re aware Linux basically runs the Internet, right?

You’re aware Linux basically runs the

InternetWorld, right?Billions of devices run Linux. It is an amazing feat!

That says more about your ignorance than anything about AI or Linux.

What

Some Linux bad Windows good troll

Did I fall into a 1999 Slashdot comment section somehow?

Never heard of Android I guess?

90% angry nerds fighting each other over what answer is “right”

AI as we know it does have its uses, but I would definitely agree that 90% of it is just marketing hype

You just haven’t tried OpeningAI’s latest orione model. A company employee said it is soooo smart, can you believe it? And the government is like, goddamn we are so scareded of it. Im telling you AGI december 2024, you’ll will see!

Edit:

Is it so hard for people to see sarcasm?

Year of the Linux Deskto…oh wait wrong thread, same same though. If we just wait one more year, we’ll have FULL FSD!

Next year, I promise, is the year we all switch to crypto, just wait!

In just two years, no one will be driving 4,000lb cars anymore, everyone just needs a Segway.

We’re going to have “just walk out” grocery stores in two years, where you pick items off the shelf, and

10,000 outsourced Indians will review your purchase and complete your CC transaction in about a half hour.our awesome technology will handle everything, charging you for your groceries as you leave the store, in just two more years!I really thought by making intentional mistakes in my comment people would be able to see the OBVIOUS sarcasm, but I guess not…

The image generation features are fun, even though you have to browbeat the idiot AI into following the description.

The only time I’ve seen AI work well are for things like game development, mainly the upscaling of textures and filling in missing frames of older games so they can run at higher frames without being choppy. Maybe even have applications for getting more voice acting done… If the SAG and Silicon Valley can find an arrangement for that that works out well for both parties…

If not for that I’d say 10% reality was being… incredibly favorable to the tech bros

^^

^^^