- cross-posted to:

- [email protected]

- cross-posted to:

- [email protected]

cross-posted from: https://lemmy.world/post/24850430

EDIT: i had an rpi it died from esd i think

EDIT2: this is also my work machine and i sleep to the sound of the fans

Many selfhosters are also homelabbers

I don’t know if I can completely explain the difference, but I would classify myself as a home labber not a self-hoster.

I use Proton for email and don’t have any YouTube/Twitter/etc alt front ends. The majority of my lab (below) is storage and compute for playing around with stuff like Kubernetes and Ansible to help me with my day job skills. Very little is exposed to the Internet (mostly just a VPN endpoint for remote lab work).

I view self-hosting as more of a, “let me put this stuff on the internet instead of of using a corporation’s gear” effort. I know folks who host their own Mastodon instance, have their own alt front ends for various social media, their own self-hoster search engines.

Thanks this makes sense!

I think I’m somewhere in between the two. I’m still pretty inexperienced so I might say I’m self hosting through my homelab as I expect to screw something up any day now haha. So far so good though

Homelab = I have a bunch of computers I experiment and learn with, often breaking stuff and starting from scratch

Self-host = I have a bunch of computers where I run my own email service, I replaced Netflix with plex/jellyfin, I have a Minecraft server for my friend group, etc

Thanks! I am still pretty inexperienced so I’m inadvertently doing both at the same time with the same few machines haha

The first few years of self hosting tend to have a lot of experimentation, so the overlap is natural.

I’m hitting my grumpy old man phase of self-hosting where I want my Minecraft server and Jellyfin to to be stable so I don’t have to hear about it from my family. So ironically, my setup is starting to look more like an overkill setup because I want to self host with stability instead of tinkering around to see if I can run a different server distro, etc. My home lab years got me to find a real nice base, but now I just add things to that base and I don’t mess with the formula I have.

IMO the distinction is that if you are doing it for fun (or education) and could afford to lose any service you run for an extended period, you’re home labbing. If you are doing it for cost savings, privacy, anti-capitalist, or control reasons and the services are critical and need to stay up, you’re self-hosting.

tl;dr - experimentation vs utility

That’s the thing, it’s pretty typical to have both and do both at the same time! You just have some machines more stable so you don’t wipe your photos when you break k8s.

the best home server is a computer you’re not using, the second best home server is a bajillion dollar server rack you looted from behind a meta LLM farm

Sure, from behind it…

I bought a cheap mini PC with an Intel N100 processor as my entry into self hosting, so far it absolutely crushes every task I’ve thrown at it

How do you manage storage limitations on Mini pcs?

In my case, 2 USB 3.0 hard drive enclosures with twin drives, in ZFS mirror configuration. I keep the the disks “awake” with https://packages.debian.org/bookworm/hd-idle, and it meets all my needs so far, no complaints about the speed for my humble homelab needs.

So far I haven’t needed mass storage. The Mini Pc itself has a 1TB nvme drive, which I could expand upon since there’s space for another 2.5 inch drive inside the case, plus USB ports for external drives. Obviously not close to a real NAS, but again, so far I have not had any need for that.

Which Mini-Pc do you use?

https://www.amazon.de/dp/B0CJF6CFLP

This is the one I bought, it was discounted to 220€ when I grabbed it.

If wanting to have cool oscilloscopes and blinkenlights is wrong then I don’t want to be right.

no one said it’s wrong keep going

I went overboard but only because I was having fun with it and didn’t like the octopus of hard drives plugged into my NUC

w520 goes hard. Still a very capable machine with the sheer amount of cpu horsepower it has from that era.

Not comparable to modern chips of course, but for what you can get those things for, damn it’s not bad.

You have it backwards. We self host to justify the hardware setup.

I just have a used Dell T3600 I got for like 50 bucks at most? Desktop form factor and quiet fans mostly, but still has 32GB ECC memory, 8 core CPU and a full size PCI-E slot to put my 1070 Ti in for transcoding in Immich and Jellyfin, secondary stable Diffusion setup and such and such.

Is having a bunch of oscilloscopes in your electronics lab self-hosting now ?

Using old laptops or other repurposed computer for self-hosting is just great! Who does have an old computer collecting dusk in their home ? Anyone had the potential for self-hosting :)

😆

Noice!

This is mine:

dayeeem nice really with 2 gigs

Yeah, the normal N150 had 1 GB RAM but plus model had 2 GB. Served me well. Nowadays it barely uses it though. :)

Lol is this an eeepc?

(Edit: Samsung logo - it is not) 🤣

My “rack” consisted entirely of old laptops, two of which were eeepcs, for years and it worked great. I replaced them all with a single NUC later heh

The eeepc was a modern marvel at the time change my mind!

They are both smol though :D

indeed smol bois

I got like 6 old computers from 2000 to 2016 all doing different things. If I had a choice between a high end server and cobbled together mess I would always choose the mess. Lot more entertainment and fun to figure out

Mine are a bit more recent (2012-202*) but same thing. Old hardware gets used for something, my “server” is just my old i5 11500k with as much ram as I could throw at it and as many drives as I can fit in the case. Oldest is a laptop that’s my bench computer.

Helps me justify upgrades, hardware’s been capable for a long time, always impressive to me just how capable things are, and sometimes it’s part of the fun (if you enjoy problem solving) to work around limitations. Off-lease enterprise stuff interests me, would need to figure out where it lives though.

How would you connect to your “server” when you don’t know it’s IP? With static IP or DNS or both?

For local services? - just type in static IP that I’ve assigned myself, otherwise I have a subdomain pointing to my online services. works like a charm

Dynamic DNS or static IP. Whatever is convenient for you. If humans are connecting, it is generally prefered to type in a domain name, rather than an IP address.

Yeah dynamic DNS works pretty good for me, after I set it up I never had any problems with it.

hostname + tailscale

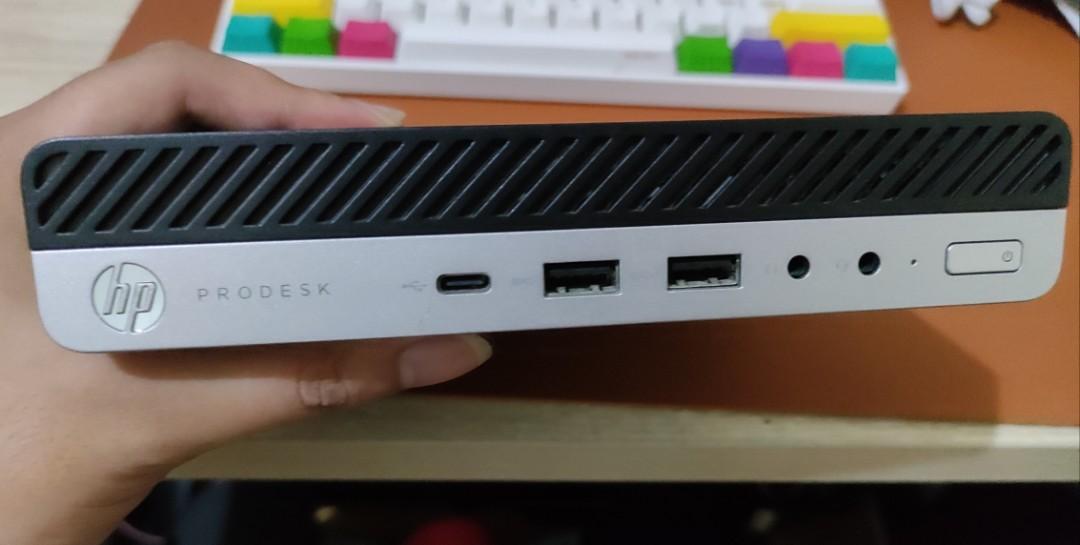

Best starter for self hosting:

Although laptops technically have a built in battery backup 😎

I recently got a M710q with an i3 7100T. It uses around 3W on idle. I threw 8GB of RAM and a 512GB ramless NVMe for a total of under 100€. Absolutely would recommend (if you don’t need too much storage). Also Dell has some machines.

For more info, servethehome (they have a YouTube channel and a blog) has a whole series on “tiny mini micro” machines.

What’s a ramless NVMe? Specifically the ramless part, I know an NVMe is an SSD.

Some fancy SSDs have additional DRAM cache:

The presence of a DRAM chip means that the CPU does not need to access the slower NAND chips for mapping tables while fetching data. DRAM being faster provides the location of stored data quickly for viewing or modification.

TIL, thanks!

DRAM-less NVMe drives don’t have what basically amounts to a cache of readily accessible storage that makes large reads and writes faster. So they’re cheaper, but slower, and wear out faster

I’d say not just starter… My rack is full of tiny/mini/micros. Proxmox on all, data on the three NAS boxes, easy to replace a box if needed (for example, the optiplex 7040 that the board died on).

Way quieter than a regular rack, lower power use, etc. If all goes well following an intended move, I should be able to safely power it off solar + batt only. Grand total wattage for all these boxes is less than my desktop (when I last checked at least, I was running about 300-350W. I did swap two that have dgpu’s now, so maybe a touch higher).

My homelab is three Lenovo M920q systems complete with 9th gen i7 procs, 24GB ram, and 10Gbps fibre/Ceph storage. Those mini PCs can be beasts.

There are some 13th gen i9s at work that are usff (like a fat version of the tiny, they are p3 ultras) I can’t wait to get my hands on at home. dGPU, 2.5gbit + 1gbit on board, 64gb ram on these as purchased, etc, etc. Total monster in under 4l.

I actually ended up with a cluster of those over a standard server for a client, way more power and lower price, and with HA to boot. Should have a few all to myself next year and I can’t wait to be ridiculous with them.

Don’t worry, I’m using an over 10 year old on-board Atom Mainboard, and it works fine with several services running.

I think the issue for some people (why they may buy expensive hardware) is that their server is not “enterprise grade”, literally meaning a whole server rack with a SAN, firewall, etc. If you’re new to this hobby, please consider this unsolicited advice:

Use whatever hardware you already have or buy only what you need to achieve your goals.

Some people want to “cosplay as a sysadmin” like what Jeff Geerling sells on his tshirts. That can mean doing this stuff for fun or maybe self teaching for a job. For those folks, buying “enterprise” could possibly make sense. But I would argue that even the core concepts of that hardware can be learned on stuff you already have.

Enterprise hardware is loud, inefficient, and will likely have idiosyncrasies that making them run at home kinda suck. An old laptop is perfect as a place to host stuff or play with software.

One of the things engineers/admins have to do in a datacenter is plan for rack power efficiency. That often means planning for the capacity you are going to use, for the space you have and choosing the cheapest solution for that.

I think its considered generally more impressive with how much you can do within the constraints you have, vs having so much capacity for a cheap price. Like, how many services can you run on a Raspberry Pi? Can you create “good enough” performance for a storage area network using just gigabit? The skills you get by limiting yourself probably out perform working with “the real stuff”, even if your purpose is trying to get a job. I’d argue the same for folks who simply want to self host. Run what you got until it stops, and then try to buy for capacity again.

Your power bill, the environment, and your wallet will thank you.

Downsizing from an ex biz full fat tower server to a few Pis, a mini PC and a Synology NAS was the best decision ever here.

The new hardware was paid for quickly in the power savings alone. The setup is also much quieter.

You don’t think about power consumption a lot when working with someone else’s supply (unless it’s your actual job to), but it becomes very visible when you see a server gobbling up power on a meter at home.

You’re right about the impressiveness of working creatively within constraints. We got to the moon in '69 with a fraction of the computing power available to the average consumer today. Look at the history of the original Elite videogame for another great example of working creatively and efficiently within a rather small box.