That’s… just not true? Current frontier AI models are actually surprisingly diverse, there are a dozen companies from America, Europe, and China releasing competitive models. Let alone the countless finetunes created by the community. And many of them you can run entirely on your own hardware so no one really has control over how they are used. (Not saying that that’s a good thing necessarily, just to point out Eno is wrong)

Either the article editing was horrible, or Eno is wildly uniformed about the world. Creation of AIs is NOT the same as social media. You can’t blame a hammer for some evil person using it to hit someone in the head, and there is more to ‘hammers’ than just assaulting people.

Eno does strike me as the kind of person who could use AI effectively as a tool for making music. I don’t think he’s team “just generate music with a single prompt and dump it onto YouTube” (AI has ruined study lo fi channels) - the stuff at the end about distortion is what he’s interested in experimenting with.

There is a possibility for something interesting and cool there (I think about how Chuck Pearson’s eccojams is just like short loops of random songs repeated in different ways, but it’s an absolutely revolutionary album) even if in effect all that’s going to happen is music execs thinking they can replace songwriters and musicians with “hey siri, generate a pop song with a catchy chorus” while talentless hacks inundate YouTube and bandcamp with shit.

Yeah, Eno actually has made a variety of albums and art installations using generative simple AI for musical decisions, although I don’t think he does any advanced programming himself. That’s why it’s really odd to see comments in an article that imply he is really uninformed about AI…he was pioneering generative music 20-30 years ago.

I’ve come to realize that there is a huge amount of misinformation about AI these days, and the issue is compounded by there being lots of clumsy, bad early AI works in various art fields, web journalism etc. I’m trying to cut back on discussing AI for these reasons, although as an AI enthusiast, it’s hard to keep quiet about it sometimes.

Eno is more a traditional algorist than “AI” (by which people generally mean neural networks)

Sure. I worked in the game industry and sometimes AI can mean ‘pick a random number if X occurs’ or something equally simple, so I’m just used to the term used a few different ways.

Totally fair

I could see him using neural networks to generate and intentionally pick and loop short bits with weird anomalies or glitchy sounds. Thats the route I’d like AI in music to go, so maybe that’s what I’m reading in, but it fits Eno’s vibe and philosophy.

AI as a tool not to replace other forms of music, but doing things like training it on contrasting music genres or self made bits or otherwise creatively breaking and reconstructing the artwork.

John Cage was all about ‘stochastic’ music - composing based on what he divined from the I Ching. There are people who have been kicking around ideas like this for longer than the AI bubble has been around - the big problem will be digging out the good stuff when the people typing “generate a three hour vapor wave playlist” can upload ten videos a day…

brian eno is cooler than most of you can ever hope to be.

Dunno, the part about generative music (not like LLMs) I’ve tried, I think if I spent a few more years of weekly migraines on that, I’d become better.

you mean like in the same way that learning an instrument takes time and dedication?

For some reason the megacorps have got LLMs on the brain, and they’re the worst “AI” I’ve seen. There are other types of AI that are actually impressive, but the “writes a thing that looks like it might be the answer” machine is way less useful than they think it is.

most LLM’s for chat, pictures and clips are magical and amazing. For about 4 - 8 hours of fiddling then they lose all entertainment value.

As for practical use, the things can’t do math so they’re useless at work. I write better Emails on my own so I can’t imagine being so lazy and socially inept that I need help writing an email asking for tech support or outlining an audit report. Sometimes the web summaries save me from clicking a result, but I usually do anyway because the things are so prone to very convincing halucinations, so yeah, utterly useless in their current state.

I usually get some angsty reply when I say this by some techbro-AI-cultist-singularity-head who starts whinging how it’s reshaped their entire lives, but in some deep niche way that is completely irrelevant to the average working adult.

I have also talked to way too many delusional maniacs who are literally planning for the day an Artificial Super Intelligence is created and the whole world becomes like Star Trek and they personally will become wealthy and have all their needs met. They think this is going to happen within the next 5 years.

The delusional maniacs are going to be surprised when they ask the Super AI “how do we solve global warming?” and the answer is “build lots of solar, wind, and storage, and change infrastructure in cities to support walking, biking, and public transportation”.

Which is the answer they will get right before sending the AI back for “repairs.”

As we saw with Grock already several times.

They absolutely adore AI, it makes them feel in-touch with the world and able to feel validated, since all it is is a validation machine. They don’t care if it’s right or accurate or even remotely neutral, they want a biased fantasy crafting system that paints terrible pictures of Donald Trump all ripped and oiled riding on a tank and they want the AI to say “Look what you made! What a good boy! You did SO good!”

He’s not wrong.

I’d say the biggest problem with AI is that it’s being treated as a tool to displace workers, but there is no system in place to make sure that that “value” (I’m not convinced commercial AI has done anything valuable) created by AI is redistributed to the workers that it has displaced.

Welcome to every technological advancement ever applied to the workforce

The system in place is “open weights” models. These AI companies don’t have a huge head start on the publicly available software, and if the value is there for a corporation, most any savvy solo engineer can slap together something similar.

No?

Anyone can run an AI even on the weakest hardware there are plenty of small open models for this.

Training an AI requires very strong hardware, however this is not an impossible hurdle as the models on hugging face show.

deleted by creator

But the people with the money for the hardware are the ones training it to put more money in their pockets. That’s mostly what it’s being trained to do: make rich people richer.

This completely ignores all the endless (open) academic work going on in the AI space. Loads of universities have AI data centers now and are doing great research that is being published out in the open for anyone to use and duplicate.

I’ve downloaded several academic models and all commercial models and AI tools are based on all that public research.

I run AI models locally on my PC and you can too.

That is entirely true and one of my favorite things about it. I just wish there was a way to nurture more of that and less of the, “Hi, I’m Alvin and my job is to make your Fortune-500 company even more profitable…the key is to pay people less!” type of AI.

We shouldn’t do anything ever because poors

But you can make this argument for anything that is used to make rich people richer. Even something as basic as pen and paper is used everyday to make rich people richer.

Why attack the technology if its the rich people you are against and not the technology itself.

It’s not even the people; it’s their actions. If we could figure out how to regulate its use so its profit-generation capacity doesn’t build on itself exponentially at the expense of the fair treatment of others and instead actively proliferate the models that help people, I’m all for it, for the record.

Yah, I’m an AI researcher and with the weights released for deep seek anybody can run an enterprise level AI assistant. To run the full model natively, it does require $100k in GPUs, but if one had that hardware it could easily be fine-tuned with something like LoRA for almost any application. Then that model can be distilled and quantized to run on gaming GPUs.

It’s really not that big of a barrier. Yes, $100k in hardware is, but from a non-profit entity perspective that is peanuts.

Also adding a vision encoder for images to deep seek would not be theoretically that difficult for the same reason. In fact, I’m working on research right now that finds GPT4o and o1 have similar vision capabilities, implying it’s the same first layer vision encoder and then textual chain of thought tokens are read by subsequent layers. (This is a very recent insight as of last week by my team, so if anyone can disprove that, I would be very interested to know!)

It’s possible to run the big Deepseek model locally for around $15k, not $100k. People have done it with 2x M4 Ultras, or the equivalent.

Though I don’t think it’s a good use of money personally, because the requirements are dropping all the time. We’re starting to see some very promising small models that use a fraction of those resources.

Would you say your research is evidence that the o1 model was built using data/algorithms taken from OpenAI via industrial espionage (like Sam Altman is purporting without evidence)? Or is it just likely that they came upon the same logical solution?

Not that it matters, of course! Just curious.

Well, OpenAI has clearly scraped everything that is scrap-able on the internet. Copyrights be damned. I haven’t actually used Deep seek very much to make a strong analysis, but I suspect Sam is just mad they got beat at their own game.

The real innovation that isn’t commonly talked about is the invention of Multihead Latent Attention (MLA), which is what drives the dramatic performance increases in both memory (59x) and computation (6x) efficiency. It’s an absolute game changer and I’m surprised OpenAI has released their own MLA model yet.

While on the subject of stealing data, I have been of the strong opinion that there is no such thing as copyright when it comes to training data. Humans learn by example and all works are derivative of those that came before, at least to some degree. This, if humans can’t be accused of using copyrighted text to learn how to write, then AI shouldn’t either. Just my hot take that I know is controversial outside of academic circles.

AI has a vibrant open source scene and is definitely not owned by a few people.

A lot of the data to train it is only owned by a few people though. It is record companies and publishing houses winning their lawsuits that will lead to dystopia. It’s a shame to see so many actually cheering them on.

So long as there are big players releasing open weights models, which is true for the foreseeable future, I don’t think this is a big problem. Once those weights are released, they’re free forever, and anyone can fine-tune based on them, or use them to bootstrap new models by distillation or synthetic RL data generation.

The biggest problem with AI is that they’re illegally harvesting everything they can possibly get their hands on to feed it, they’re forcing it into places where people have explicitly said they don’t want it, and they’re sucking up massive amounts of energy AMD water to create it, undoing everyone else’s progress in reducing energy use, and raising prices for everyone else at the same time.

Oh, and it also hallucinates.

I don’t care much about them harvesting all that data, what I do care about is that despite essentially feeding all human knowledge into LLMs they are still basically useless.

Oh, and it also hallucinates.

Oh, and people believe the hallucinations.

In a Venn Diagram, I think your “illegally harvesting” complaint is a circle fully inside the “owned by the same few people” circle. AI could have been an open, community-driven endeavor, but now it’s just mega-rich corporations stealing from everyone else. I guess that’s true of literally everything, not just AI, but you get my point.

Eh I’m fine with the illegal harvesting of data. It forces the courts to revisit the question of what copyright really is and hopefully erodes the stranglehold that copyright has on modern society.

Let the companies fight each other over whether it’s okay to pirate every video on YouTube. I’m waiting.

I would agree with you if the same companies challenging copyright (protecting the intellectual and creative work of “normies”) are not also aggressively welding copyright against the same people they are stealing from.

With the amount of coprorate power tightly integrated with the governmental bodies in the US (and now with Doge dismantling oversight) I fear that whatever comes out of this is humans own nothing, corporations own anything. Death of free independent thought and creativity.

Everything you do, say and create is instantly marketable, sellable by the major corporations and you get nothing in return.

The world needs something a lot more drastic then a copyright reform at this point.

It’s seldom the same companies, though; there are two camps fighting each other, like Gozilla vs Mothra.

So far, the result seems to be “it’s okay when they do it”

Yeah… Nothing to see here, people, go home, work harder, exercise, and don’t forget to eat your vegetables. Of course, family first and god bless you.

AI scrapers illegally harvesting data are destroying smaller and open source projects. Copyright law is not the only victim

https://thelibre.news/foss-infrastructure-is-under-attack-by-ai-companies/

That article is overblown. People need to configure their websites to be more robust against traffic spikes, news at 11.

Disrespecting robots.txt is bad netiquette, but honestly this sort of gentleman’s agreement is always prone to cheating. At the end of the day, when you put something on the net for people to access, you have to assume anyone (or anything) can try to access it.

You think Red Hat & friends are just all bad sysadmins? Source hut maybe…

I think there’s a bit of both: poorly optimized/antiquated sites and a gigantic spike in unexpected and persistent bot traffic. The typical mitigations do not work anymore.

Not every site is and not every site should have to be optimized for hundreds of thousands of requests every day or more. Just because they can be doesn’t mean that it’s worth the time effort or cost.

In this case they just need to publish the code as a torrent. You wouldn’t setup a crawler if there was all the data in a torrent swarm.

I’ve heard stuff like bittorent doesn’t work well when the data is often updated or changed

I might be totally wrong, I’ve only ever used it once when downloading Wikipedia

They’re not illegally harvesting anything. Copyright law is all about distribution. As much as everyone loves to think that when you copy something without permission you’re breaking the law the truth is that you’re not. It’s only when you distribute said copy that you’re breaking the law (aka violating copyright).

All those old school notices (e.g. “FBI Warning”) are 100% bullshit. Same for the warning the NFL spits out before games. You absolutely can record it! You just can’t share it (or show it to more than a handful of people but that’s a different set of laws regarding broadcasting).

I download AI (image generation) models all the time. They range in size from 2GB to 12GB. You cannot fit the petabytes of data they used to train the model into that space. No compression algorithm is that good.

The same is true for LLM, RVC (audio models) and similar models/checkpoints. I mean, think about it: If AI is illegally distributing millions of copyrighted works to end users they’d have to be including it all in those files somehow.

Instead of thinking of an AI model like a collection of copyrighted works think of it more like a rough sketch of a mashup of copyrighted works. Like if you asked a person to make a Godzilla-themed My Little Pony and what you got was that person’s interpretation of what Godzilla combined with MLP would look like. Every artist would draw it differently. Every author would describe it differently. Every voice actor would voice it differently.

Those differences are the equivalent of the random seed provided to AI models. If you throw something at a random number generator enough times you could–in theory–get the works of Shakespeare. Especially if you ask it to write something just like Shakespeare. However, that doesn’t meant the AI model literally copied his works. It’s just doing it’s best guess (it’s literally guessing! That’s how work!).

The problem with being like… super pedantic about definitions, is that you often miss the forest for the trees.

Illegal or not, seems pretty obvious to me that people saying illegal in this thread and others probably mean “unethically”… which is pretty clearly true.

I wasn’t being pedantic. It’s a very fucking important distinction.

If you want to say “unethical” you say that. Law is an orthogonal concept to ethics. As anyone who’s studied the history of racism and sexism would understand.

Furthermore, it’s not clear that what Meta did actually was unethical. Ethics is all about how human behavior impacts other humans (or other animals). If a behavior has a direct negative impact that’s considered unethical. If it has no impact or positive impact that’s an ethical behavior.

What impact did OpenAI, Meta, et al have when they downloaded these copyrighted works? They were not read by humans–they were read by machines.

From an ethics standpoint that behavior is moot. It’s the ethical equivalent of trying to measure the environmental impact of a bit traveling across a wire. You can go deep down the rabbit hole and calculate the damage caused by mining copper and laying cables but that’s largely a waste of time because it completely loses the narrative that copying a billion books/images/whatever into a machine somehow negatively impacts humans.

It is not the copying of this information that matters. It’s the impact of the technologies they’re creating with it!

That’s why I think it’s very important to point out that copyright violation isn’t the problem in these threads. It’s a path that leads nowhere.

Just so you know, still pedantic.

The irony of choosing the most pedantic way of saying that they’re not pedantic is pretty amusing though.

The issue I see is that they are using the copyrighted data, then making money off that data.

…in the same way that someone who’s read a lot of books can make money by writing their own.

Do you know someone who’s read a billion books and can write a new (trashy) book in 5 mins?

No, but humans have differences in scale also. Should a person gifted with hyper-fast reading and writing ability be given less opportunity than a writer who takes a year to read a book and a decade to write one? Imo if the argument comes down to scale, it’s kind of a shitty argument. Is the underlying principle faulty or not?

Part of my point is that a lot of everyday rules do break down at large scale. Like, ‘drink water’ is good advice - but a person can still die from drinking too much water. And having a few people go for a walk through a forest is nice, but having a million people go for a walk through a forest is bad. And using a couple of quotes from different sources to write an article for a website is good; but using thousands of quotes in an automated method doesn’t really feel like the same thing any more.

That’s what I’m saying. A person can’t physically read billions of books, or do the statistical work to put them together to create a new piece of work from them. And since a person cannot do that, no law or existing rule currently takes that possibility into account. So I don’t think we can really say that a person is ‘allowed to’ do that. Rather, it’s just an undefined area. A person simply cannot physically do it, and so the rules don’t have to consider it. On the other hand, computer systems can now do it. And so rather than pointing to old laws, we have to decide as a society whether we think that’s something we are ok with.

I don’t know what the ‘best’ answer is, but I do think we should at least stop to think about it carefully; because there are some clear downsides that need to be considered - and probably a lot of effects that aren’t as obvious which should also be considered!

I hate to be the one to break it to you but AIs aren’t actually people. Companies claiming that they are “this close to AGI” doesn’t make it true.

The human brain is an exception to copyright law. Outsourcing your thinking to a machine that doesn’t actually think makes this something different and therefore should be treated differently.

This is an interesting argument that I’ve never heard before. Isn’t the question more about whether ai generated art counts as a “derivative work” though? I don’t use AI at all but from what I’ve read, they can generate work that includes watermarks from the source data, would that not strongly imply that these are derivative works?

If you studied loads of classic art then started making your own would that be a derivative work? Because that’s how AI works.

The presence of watermarks in output images is just a side effect of the prompt and its similarity to training data. If you ask for a picture of an Olympic swimmer wearing a purple bathing suit and it turns out that only a hundred or so images in the training match that sort of image–and most of them included a watermark–you can end up with a kinda-sorta similar watermark in the output.

It is absolutely 100% evidence that they used watermarked images in their training. Is that a problem, though? I wouldn’t think so since they’re not distributing those exact images. Just images that are “kinda sorta” similar.

If you try to get an AI to output an image that matches someone else’s image nearly exactly… is that the fault of the AI or the end user, specifically asking for something that would violate another’s copyright (with a derivative work)?

Sounds like a load of techbro nonsense.

By that logic mirroring an image would suffice to count as derivative work since it’s “kinda sorta similar”. It’s not the original, and 0% of pixels match the source.

“And the machine, it learned to flip the image by itself! Like a human!”

It’s a predictive keyboard on steroids, let’s not pretent that it can create anything but noise with no input.

It varies massivelly depending on the ML.

For example things like voice generation or object recognition can absolutelly be done with entirelly legit training datasets - literally pay a bunch of people to read some texts and you can train a voice generation engine with it and the work in object recognition is mainly tagging what’s in the images on top of a ton of easilly made images of things - a researcher can literally go around taking photos to make their dataset.

Image generation, on the other hand, not so much - you can only go so far with just plain photos a researcher can just go around and take on the street and they tend to relly a lot on artistic work of people who have never authorized the use of their work to train them, and LLMs clearly cannot be do without scrapping billions of pieces of actual work from billions of people.

Of course, what we tend to talk about here when we say “AI” is LLMs, which are IMHO the worst of the bunch.

Well, the harvesting isn’t illegal (yet), and I think it probably shouldn’t be.

It’s scraping, and it’s hard to make that part illegal without collateral damage.

But that doesn’t mean we should do nothing about these AI fuckers.

In the words of Cory Doctorow:

Web-scraping is good, actually.

Scraping against the wishes of the scraped is good, actually.

Scraping when the scrapee suffers as a result of your scraping is good, actually.

Scraping to train machine-learning models is good, actually.

Scraping to violate the public’s privacy is bad, actually.

Scraping to alienate creative workers’ labor is bad, actually.

We absolutely can have the benefits of scraping without letting AI companies destroy our jobs and our privacy. We just have to stop letting them define the debate.

I see the “AI is using up massive amounts of water” being proclaimed everywhere lately, however I do not understand it, do you have a source?

My understanding is this probably stems from people misunderstanding data center cooling systems. Most of these systems are closed loop so everything will be reused. It makes no sense to “burn off” water for cooling.

data centers are mainly air-cooled, and two innovations contribute to the water waste.

the first one was “free cooling”, where instead of using a heat exchanger loop you just blow (filtered) outside air directly over the servers and out again, meaning you don’t have to “get rid” of waste heat, you just blow it right out.

the second one was increasing the moisture content of the air on the way in with what is basically giant carburettors in the air stream. the wetter the air, the more heat it can take from the servers.

so basically we now have data centers designed like cloud machines.

Edit: Also, apparently the water they use becomes contaminated and they use mainly potable water. here’s a paper on it

Also the energy for those datacenters has to come from somewhere and non-renewable options (gas, oil, nuclear generation) also use a lot of water as part of the generation process itself (they all relly using the fuel to generate the steam to power turbines which generate the electricity) and for cooling.

steam that runs turbines tends to be recirculated. that’s already in the paper.

nuclear isn’t that bad ngl France uses a ton of it

and the wastewater that nuclear power plants make, its barely radioactive at all

We spend energy on the most useless shit why are people suddenly using it as an argument against AI? You ever saw someone complaining about pixar wasting energies to render their movies? Or 3D studios to render TV ads?

Oh, and it also hallucinates.

This is arguably a feature depending on how you use it. I’m absolutely not an AI acolyte. It’s highly problematic in every step. Resource usage. Training using illegally obtained information. This wouldn’t necessarily be an issue if people who aren’t tech broligarchs weren’t routinely getting their lives destroyed for this, and if the people creating the material being used for training also weren’t being fucked…just capitalism things I guess. Attempts by capitalists to cut workers out of the cost/profit equation.

If you’re using AI to make music, images or video… you’re depending on those hallucinations.

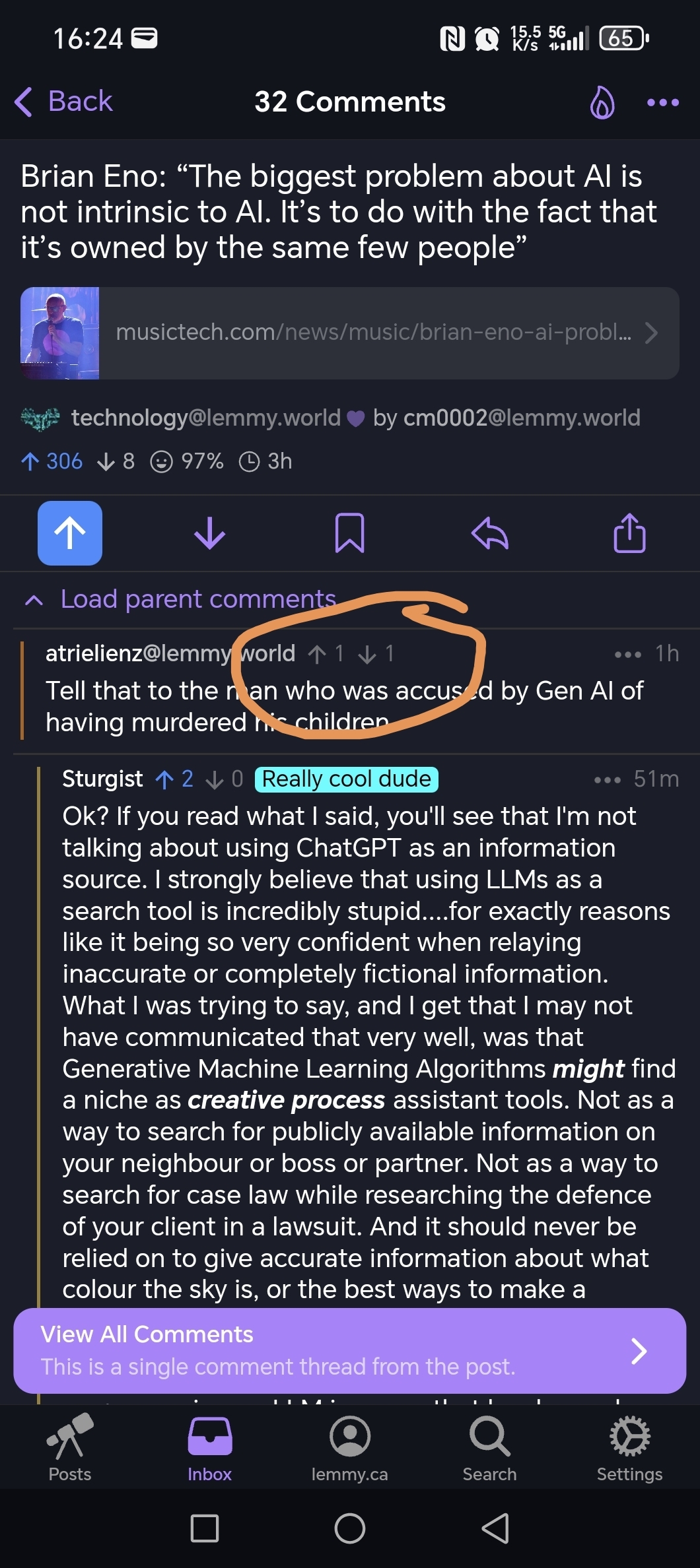

I run a Stable Diffusion model on my laptop. It’s kinda neat. I don’t make things for a profit, and now that I’ve played with it a bit I’ll likely delete it soon. I think there’s room for people to locally host their own models, preferably trained with legally acquired data, to be used as a tool to assist with the creative process. The current monetisation model for AI is fuckin criminal…Tell that to the man who was accused by Gen AI of having murdered his children.

Ok? If you read what I said, you’ll see that I’m not talking about using ChatGPT as an information source. I strongly believe that using LLMs as a search tool is incredibly stupid…for exactly reasons like it being so very confident when relaying inaccurate or completely fictional information.

What I was trying to say, and I get that I may not have communicated that very well, was that Generative Machine Learning Algorithms might find a niche as creative process assistant tools. Not as a way to search for publicly available information on your neighbour or boss or partner. Not as a way to search for case law while researching the defence of your client in a lawsuit. And it should never be relied on to give accurate information about what colour the sky is, or the best ways to make a custard using gasoline.Does that clarify things a bit? Or do you want to carry on using an LLM in a way that has been shown to be unreliable, at best, as some sort of gotcha…when I wasn’t talking about that as a viable use case?

lol. I was just saying in another comment that lemmy users 1. Assume a level of knowledge of the person they are talking to or interacting with that may or may not be present in reality, and 2. Are often intentionally mean to the people they respond to so much so that they seem to take offense on purpose to even the most innocuous of comments, and here you are, downvoting my valid point, which is that regardless of whether we view it as a reliable information source, that’s what it is being marketed as and results like this harm both the population using it, and the people who have found good uses for it. And no, I don’t actually agree that it’s good for creative processes as assistance tools and a lot of that has to do with how you view the creative process and how I view it differently. Any other tool at the very least has a known quantity of what went into it and Generative AI does not have that benefit and therefore is problematic.

and here you are, downvoting my valid point

Wasn’t me actually.

valid point

You weren’t really making a point in line with what I was saying.

regardless of whether we view it as a reliable information source, that’s what it is being marketed as and results like this harm both the population using it, and the people who have found good uses for it. And no, I don’t actually agree that it’s good for creative processes as assistance tools and a lot of that has to do with how you view the creative process and how I view it differently. Any other tool at the very least has a known quantity of what went into it and Generative AI does not have that benefit and therefore is problematic.

This is a really valid point, and if you had taken the time to actually write this out in your first comment, instead of “Tell that to the guy that was expecting factual information from a hallucination generator!” I wouldn’t have reacted the way I did. And we’d be having a constructive conversation right now. Instead you made a snide remark, seemingly (personal opinion here, I probably can’t read minds) intending it as an invalidation of what I was saying, and then being smug about my taking offence to you not contributing to the conversation and instead being kind of a dick.

Not everything has to have a direct correlation to what you say in order to be valid or add to the conversation. You have a habit of ignoring parts of the conversation going around you in order to feel justified in whatever statements you make regardless of whether or not they are based in fact or speak to the conversation you’re responding to and you are also doing the exact same thing to me that you’re upset about (because why else would you go to a whole other post to “prove a point” about downvoting?). I’m not going to even try to justify to you what I said in this post or that one because I honestly don’t think you care.

It wasn’t you (you claim), but it could have been and it still might be you on a separate account. I have no way of knowing.

All in all, I said what I said. We will not get the benefits of Generative AI if we don’t 1. deal with the problems that are coming from it, and 2. Stop trying to shoehorn it into everything. And that’s the discussion that’s happening here.

because why else would you go to a whole other post to “prove a point” about downvoting?

It wasn’t you (you claim)I do claim. I have an alt, didn’t downvote you there either. Was just pointing out that you were also making assumptions. And it’s all comments in the same thread, hardly me going to an entirely different post to prove a point.

We will not get the benefits of Generative AI if we don’t 1. deal with the problems that are coming from it, and 2. Stop trying to shoehorn it into everything. And that’s the discussion that’s happening here.

I agree. And while I personally feel like there’s already room for it in some people’s workflow, it is very clearly problematic in many ways. As I had pointed out in my first comment.

I’m not going to even try to justify to you what I said in this post or that one because I honestly don’t think you care.

I do actually! Might be hard to believe, but I reacted the way I did because I felt your first comment was reductive, and intentionally trying to invalidate and derail my comment without actually adding anything to the discussion. That made me angry because I want a discussion. Not because I want to be right, and fuck you for thinking differently.

If you’re willing to talk about your views and opinions, I’d be happy to continue talking. If you’re just going to assume I don’t care, and don’t want to hear what other people think…then just block me and move on. 👍

COO > Return.

And yet, he released his latest album exclusively on Apple Music.

The difference is that he has the choice of not participating in that model, obviously.

I don’t really agree that this is the biggest issue, for me the biggest issue is power consumption.

Large power consumption only happens because someone is willing to dump lots of capital into it so they can own it.

Oh you’re right, let me just tally up all the days where that isn’t the case…

carry the 2…

don’t forget weekends and holidays…

Oh! It’s every single day. It’s just an always and forever problem. Neat.

It’s nothing of the sort. If nobody had the capital to scale it through more power, then the research would be more focused on making it efficient.

That is a big issue, but excessive power consumption isn’t intrinsic to AI. You can run a reasonably good AI on your home computer.

The AI companies don’t seem concerned about the diminishing returns, though, and will happily spend 1000% more power to gain that last 10% better intelligence. In a competitive market why wouldn’t they, when power is so cheap.

AI will become one of the most important discoveries humankind has ever invented. Apply it to healthcare, science, finances, and the world will become a better place, especially in healthcare. Hey artist, writers, you cannot stop intellectual evolution. AI is here to stay. All we need is a proven way to differentiate the real art from AI art. An invisible watermark that can be scanned to see its true “raison d’etre”. Sorry for going off topic but I agree that AI should be more open to verification for using copyrighted material. Don’t expect compensation though.

Apply it to healthcare, science, finances, and the world will become a better place, especially in healthcare.

That’s all kind of moot if we continue down the capitalist hellscape express. What good is an AI that can diagnose cancer if most people can’t afford access? What good is AI writing novels if our homes are destroyed by climate change induced disasters?

Those problems are mostly political, and AI isn’t going to fix them. The people that probably could be replaced with AI, the shitty “leaders” and such, are not going to voluntarily step down.

None of it is currently useful to those right now

The biggest problem with AI is that it’s the brut force solution to complex problems.

Instead of trying to figure out what’s the most power efficient algorithm to do artificial analysis, they just threw more data and power at it.

Besides the fact of how often it’s wrong, by definition, it won’t ever be as accurate nor efficient as doing actual thinking.

It’s the solution you come up with the last day before the project is due cause you know it will technically pass and you’ll get a C.

It’s moronic. Currently, decision makers don’t really understand what to do with AI and how it will realistically evolve in the coming 10-20 years. So it’s getting pushed even into environments with 0-error policies, leading to horrible results and any time savings are completely annihilated by the ensuing error corrections and general troubleshooting. But maybe the latter will just gradually be dropped and customers will be told to just “deal with it,” in the true spirit of enshittification.

deleted by creator