Author: Mohammed Estaiteyeh | Assistant Professor of Digital Pedagogies and Technology Literacies, Faculty of Education, Brock University

One recent study indicates that 78 per cent of Canadian students have used generative AI to help with assignments or study tasks. In China, authorities have even shut down AI apps during nationwide exams to prevent cheating.

The support structures and policies to guide students’ and educators’ responsible use of AI are often insufficient in Canadian schools. In a recent study, Canada ranked 44th in AI training and literacy out of 47 countries, and 28th among 30 advanced economies. Despite growing reliance on these technologies at homes and in the classrooms, Canada lacks a unified AI literacy strategy in K-12 education.

Without co-ordinated action, this gap threatens to widen existing inequalities and leave both learners and educators vulnerable. Canadian schools need a national AI literacy strategy that provides a framework for teaching students about AI tools and how to use them responsibly.

AI literacy is defined as:

“An individual’s ability to clearly explain how AI technologies work and impact society, as well as to use them in an ethical and responsible manner and to effectively communicate and collaborate with them in any setting.”

Step 1: AI is not your friend

Step 2: Do not use.

Step 3: Throw your electronics away, join Canada in negative per capita productivity growth.

I can’t tell if this based or sarcastic

How about we tackle the environmental issues that Ai is causing before talking about using Ai “responsibly”

Any use of Ai in its current form is not responsible or ethical. It’s an environmental disaster and threatens to harm the collective intelligence of every citizen.

Ai is another way to lower the intelligence of the general population and keep them under corporate control.

There could be a component of the training. E.g. use a calculator to compute math instead of AI.

What intelligence is there, we can’t even build homes for people given the overbearing regulation by mouth breathers. AI has the potential to eradicate all this bureaucracy.

Ai has the potential to eradicate all this bureaucracy

Fuck that is the most ridiculous take.

Ai is only going to dumb down the general public while obfuscating data harvesting and manipulating opinions to the will of the owning class.

You have an optimistic opinion on aggregate intelligence as is then.

They literally make people dumber:

https://www.theregister.com/2025/06/18/is_ai_changing_our_brains/

They are a massive privacy risk:

https://www.youtube.com/watch?v=AyH7zoP-JOg&t=3015s

And they are being used to push fascist ideologies into every aspect of the internet:

https://newsocialist.org.uk/transmissions/ai-the-new-aesthetics-of-fascism/

They are a massive environmental disaster:

https://news.mit.edu/2025/explained-generative-ai-environmental-impact-0117

So no. I’m not optimistic.

Calculators also made people dumber, but the world goes on with technology elevating peoples ability to be productive despite being dumber. Trying to stop technological progress is never going to be a good thing, you can scare monger all you want that it will turn us all fascist but youre no different than those complaining about cars or the internet.

If calculators routinely gave the wrong answer this would be more compelling.

We better do something like China does with the great firewall, before the sky falls.

How long have you been under the influence of Ai? Cause I think you are starting to show symptoms.

You see, a calculator actually functions and doesn’t make shit up. Pretty simple concept.

The technological progress LLMs represent has come to completion. They’re a technological dead end. They have no practical application because of hallucinations, and hallucinations are baked into the very core of how they work. Any further progress will come from experts learning from the successes and failures of LLMs, abandoning them, and building entirely new AI systems.

AI as a general field is not a dread end, and it will continue to improve. But we’re nowhere near the AGI that tech CEOs are promising LLMs are so close to.

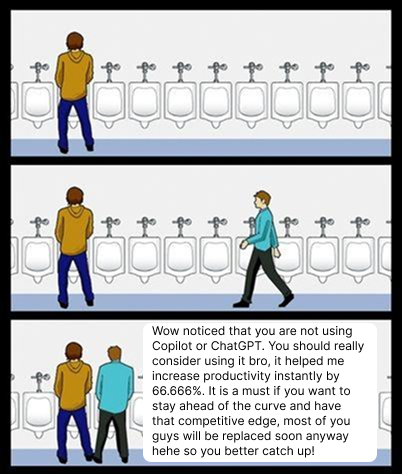

People who are pro-AI seem so weird and dystopian, people who are anti-AI seem logical and reasonable, but my employer requires us to use AI, and I’ve even been forced to work on multiple AI projects recently. It does seem it’s unavoidable unfortunately, but honestly Copilot has given me some of the most useful autocompletions I’ve ever had, especially for tedious things like logging, and I’ve had good luck with ChatGPT assisting with tedious things as well like writing both scaffolding and queries. Considering all of that, I’m torn on AI. I am afraid of the consequences of AI, the fallout of all of it, but I also do find AI/LLMs useful in my day-to-day job and I’m required to use them for my day-to-day job as well.

If AI literacy requires an individual to be able to clearly explain how AI technologies work… then even data scientists are AI illiterate.

For a moment there I thought this was an onion article; Ai propagandists never cease to amaze me!

Learning about how AI works and what it is/isn’t good at, is a good thing? It will most likely make kids use generative AI less, and be careful about what they use it for

AI is American hoax to win over China.

Too bad Canadians fell for it.

AI is American hoax to win over China.

What do you mean?

AI race with China is artificially created to justify private and public money funding the AI and making it an industry.