- cross-posted to:

- [email protected]

- cross-posted to:

- [email protected]

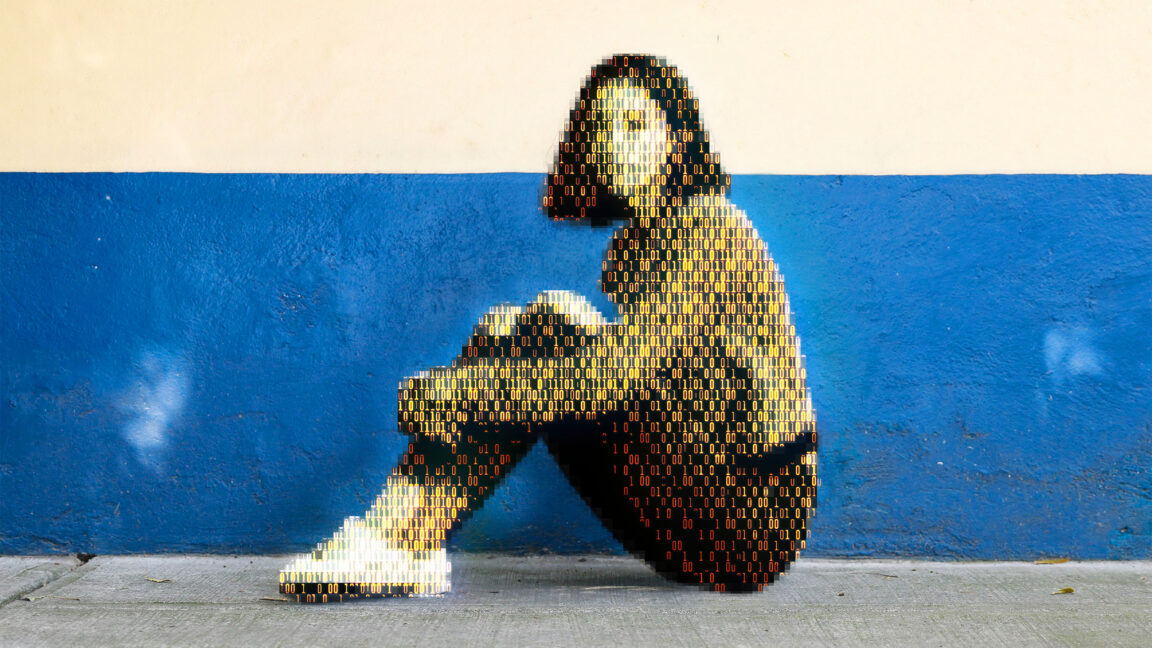

Today, a prominent child safety organization, Thorn, in partnership with a leading cloud-based AI solutions provider, Hive, announced the release of an AI model designed to flag unknown CSAM at upload. It’s the earliest AI technology striving to expose unreported CSAM at scale.

Not a single peep about false positives.

I’m sure it won’t be abused though. And if anyone does complain, just get their electronics seized and checked, because they must be hiding something!

I take this to mean it is at least 1% accurate lol.

Reminds me of the A cup breasts porn ban in Australia a few years ago, because only pedos would watch that

This sort of rhetoric really bothers me. Especially when you consider that there are real adult women with disorders that make them appear prepubescent. Whether that’s appropriate for pornography is a different conversation, but the idea that anyone interested in them is a pedophile is really disgusting. That is a real, human, adult woman and some people say anyone who wants to live them is a monster. Just imagine someone telling you that anyone who wants to love you is a monster and that they’re actually protecting you.

Awe man, I love all titties. Variety is the spice of life.

Believe it or not, straight to jail.

If this is the price I must pay, I will pay it, sir! No man should be deprived of privately viewing a consenting adults perfectly formed small tit’s. They can take my liberty, they can take my livelihood, but they will never take away my boner for puffy nipples on a small chested half Japanese woman!

What is the charge? Biting a breast? A succulent Chinese breast?

Not to mention the self image impact such things would have on women with smaller breasts, who (as I understand it) generally already struggle with poor self image due to breast size.

Clearly the state gives zero fucks about these women, or anyone else or even “the children”

Catholic Church is still around for a reason

Typically the state only cares about things they perceive as children.

Australia has a more general ban on selling or exhibiting hard porn, but is is legal to possess it. So it’s not just small boobs.

There was a a porn studio that was prosecuted for creating CSAM. Brazil i belive. Prosecutors claimed that the petite, A-cup woman was clearly underaged. Their star witness was a doctor who testified that such underdeveloped breasts and hips clearly meant she was still going through puberty and couldn’t possible be 18 or older. The porn star showed up to testify that she was in fact over 18 when they shot the film and included all her identification including her birth certificate and passport. She also said something to the effect of women come in all shapes and sizes and a doctor should know better.

I can’t find an article. All I’m getting is GOP trump pedo nominees and brazil laws on porn.

Pretty sure the adult star was lil Lupe. She was everywhere at the time because she did, indeed, look underage.