- cross-posted to:

- [email protected]

- cross-posted to:

- [email protected]

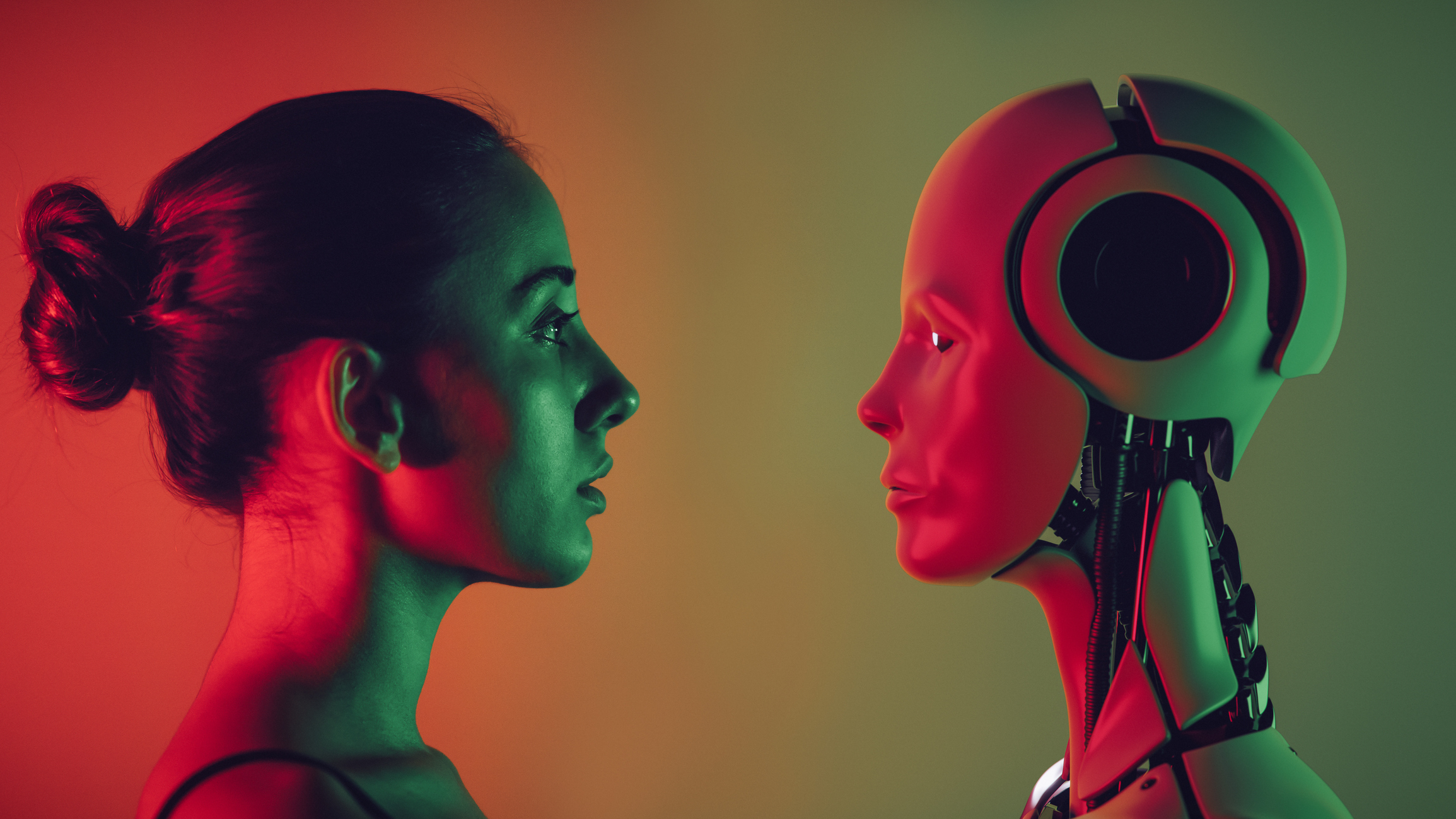

‘Nudify’ Apps That Use AI to ‘Undress’ Women in Photos Are Soaring in Popularity::It’s part of a worrying trend of non-consensual “deepfake” pornography being developed and distributed because of advances in artificial intelligence.

Is it? Usually photography in public places is legal.

Legal and moral are not the same thing.

Do you also think it’s immoral to do street photography?

I think it’s immoral to do street photography to sexualize the subjects of your photographs. I think it’s immoral to then turn that into pornography of them without their consent. I think it’s weird you don’t. If you can’t tell the difference between street photography and using and manipulating photos of people (public or otherwise) into pornography I can’t fuckin help you

If you go to a park, take photos of people, then go home and masturbate to them you need to seek professional help.