Ok let’s give a little bit of context. I will turn 40 yo in a couple of months and I’m a c++ software developer for more than 18 years. I enjoy to code, I enjoy to write “good” code, readable and so.

However since a few months, I become really afraid of the future of the job I like with the progress of artificial intelligence. Very often I don’t sleep at night because of this.

I fear that my job, while not completely disappearing, become a very boring job consisting in debugging code generated automatically, or that the job disappear.

For now, I’m not using AI, I have a few colleagues that do it but I do not want to because one, it remove a part of the coding I like and two I have the feeling that using it is cutting the branch I’m sit on, if you see what I mean. I fear that in a near future, ppl not using it will be fired because seen by the management as less productive…

Am I the only one feeling this way? I have the feeling all tech people are enthusiastic about AI.

Here is an alternative Piped link(s):

Uncle Bob’s response when asked if AI will takeover software engineering job.(1m)

Piped is a privacy-respecting open-source alternative frontend to YouTube.

I’m open-source; check me out at GitHub.

He has a good point. Specifying precisely what the program does is the actual difficult part and won’t be done properly by this current LLM system since it’s creating something new and requires actual thought and understanding.

🙄 no I’m sure you’re the only one

Your fear is in so far justified as that some employers will definitely aim to reduce their workforce by implementing AI workflow.

When you have worked for the same employer all this time, perhaps you don’t know, but a lot of employers do not give two shits about code quality. They want cheap and fast labour and having less people churning out more is a good thing in their eyes, regardless of (long-term) quality. May sound cynical, but that is my experience.

My prediction is that the income gap will increase dramatically because good pay will be reserved for the truly exceptional few. While the rest will be confronted with yet another tool capitalists will use to increase profits.

Maybe very far down the line there is blissful utopia where no one has to work anymore. But between then and now, AI would have to get a lot better. Until then it will be mainly used by corporations to justify hiring less people.

I wish your fear were justified! I’ll praise anything that can kill work.

Hallas, we’re not here yet. Current AI is a glorified search engine. The problem it will have is that most code today is unmaintainable garbage. So AI can only do this for now : unmaintainable garbage.

First the software industry needs to properly industrialise itself. Then there will be code to copy and reuse.

I’ll praise anything that can kill work under UBI. Without reform, I worry the rich will get richer, the poor will get even poorer and it leads to guillotines in the square.

Under capitalism the rich will get richer, and the poor poorer. That’s the whole point of it. Guillotines are a solution to get UBI.

Your last sentence is where I fear we will end up. The very wealthy would be wise to realise it and work reform themselves.

I disagree that capitalism, at least in the way I understand it, always leads to rich getting richer, poor getting poorer. Many European countries have a happy medium that rewards risk-taking while looking after everyone. While most still slowly get worse on the Gini coefficient it’s based on pretty much the 0.1% pulling away and away, while the rest of their societies actually stays roughly the same. So really they only have the top of the top of the top to deal with, whereas a country like the US has a much larger, all-encompassing inequality.

All countries of Europe are going fascists one after the other. Why if there is no problem?

Europe had capitalism under a leash because communism was here to threaten it. Since the 90’s, capitalism is unleashed and inequalities are rising. USA didn’t had communism to tame its capitalism, because it was basically forbidden because of the cold war.

Capitalism is entirely focused on having companies making a profit. If you don’t have strong states to tame it and redistribute the money, inequalities increase. It’s mathematical.

The rise of fascism has more to do with people’s impression of immigration than it does capitalism.

Inequality in Europe isn’t rising if you disregard the top 0.1%. It’s the very very top that needs adjusting in Europe.

I agree with your last paragraph. Of course you need rules and redistribution. That doesn’t mean that capitalism, if well regulated, isn’t the most productive or the most effective at increasing wealth for everyone.

Fascism has everything to do with poverty and inequalities. And inequalities in Europe are rising a lot. Where do you get your informations?

Capitalism is a sickness. It breeds crisis that lead to war, and it lives out of war and exploitation. But that’s beside the point.

I love llms! I’m using them to answer all sorts of bullshit to become a manager…like here’s a bunch of notes make me a managers review of Brian. LOL.

I think Google is struggling to control the bullshit flood from the Internet and so AI is about to eat their lunch. Like I already decided that all AIs are just bullshit and the only really useful AIs are the ones that can actually search the Internet live. Perplexity AI was doing this for a while but someone chopped off it’s balls. I’ve been looking for a replacement ever since.

I also use it for help with python, with Linux, with docker, with solid works and stuff around the house like taxes, kombucha, identifying plants and stupid stuff like that.

But I can definitely see the future when the police are replaced with robo dogs with lases heads that can run at 120mph and shoot holes through cars. The only benefit being that the hole doesn’t get infected and there’s no pool of blood. That future is coming. I’m going to start wearing aluminum reflective shield armor.

I think all jobs that are pure mental labor are under threat to a certain extent from AI.

It’s not really certain when real AGI is going to start to become real, but it certainly seems possible that it’ll be real soon, and if you can pay $20/month to replace a six figure software developer then a lot of people are in trouble yes. Like a lot of other revolutions like this that have happened, not all of it will be “AI replaces engineer”; some of it will be “engineer who can work with the AI and complement it to be produtive will replace engineer who can’t.”

Of course that’s cold comfort once it reaches the point that AI can do it all. If it makes you feel any better, real engineering is much more difficult than a lot of other pure-mental-labor jobs. It’ll probably be one of the last to fall, after marketing, accounting, law, business strategy, and a ton of other white-collar jobs. The world will change a lot. Again, I’m not saying this will happen real soon. But it certainly could.

I think we’re right up against the cold reality that a lot of the systems that currently run the world don’t really care if people are taken care of and have what they need in order to live. A lot of people who aren’t blessed with education and the right setup in life have been struggling really badly for quite a long time no matter how hard they work. People like you and me who made it well into adulthood just being able to go to work and that be enough to be okay are, relatively speaking, lucky in the modern world.

I would say you’re right to be concerned about this stuff. I think starting to agitate for a better, more just world for all concerned is probably the best thing you can do about it. Trying to hold back the tide of change that’s coming doesn’t seem real doable without that part changing.

It’s not really certain when real AGI is going to start to become real, but it certainly seems possible that it’ll be real soon

What makes you say that? The entire field of AI has not made any progress towards AGI since its inception and if anything the pretty bad results from language models today seem to suggest that it is a long way off.

You would describe “recognizing handwritten digits some of the time” -> “GPT-4 and Midjourney” as no progress in the direction of AGI?

It hasn’t reached AGI or any reasonable facsimile yet, no. But up until a few years ago something like ChatGPT seemed completely impossible, and then a few big key breakthroughs happened, and now the impossible is possible. It seems by no means out of the question that a few more big breakthroughs could happen with AGI, especially with as much attention and effort is going into the field now.

It’s not that machine learning isn’t making progress, it’s just many people speculate that AGI will require a different way of looking at AI. Deep Learning, while powerful, doesn’t seem like it can be adapted to something that would resemble AGI.

You mean, it would take some sort of breakthrough?

(For what it’s worth, my guess about how it works is to generally agree with you in terms of real sentience – just that I think (a) neither one of us really knows that for sure (b) AGI doesn’t require sentience; a sufficiently capable fakery which still has limitations can still upend the world quite a bit).

a sufficiently capable fakery which still has limitations can still upend the world quite a bit

Maybe but we are essentially throwing petabyte sized models and lots of compute power at it and the results are somewhere on the level where a three year old would do better in not giving away that they don’t understand what they are talking about.

Don’t get me wrong, LLMs and the other recent developments in generative AI models are very impressive but it is becoming increasingly clear that the approach is maybe barely useful if we throw about as many computing resources at it as we can afford, severely limiting its potential applications. And even at that level the results are still so bad that you essentially can’t trust anything that falls out.

This is very far from being sufficient to fake AGI and has absolutely nothing to do with real AGI.

Yes, and most likely more of a paradigm shift. The way deep learning models work is largely around static statistical models. The main issue here isn’t the statistical side, but the static nature. For AGI this is a significant hurdle because as the world evolves, or simply these models run into new circumstances, the models will fail.

Its largely the reason why autonomous vehicles have sorta hit a standstill. It’s the last 1% (what if an intersection is out, what if the road is poorly maintained, etc.) that are so hard for these models as they require “thought” and not just input/output.

LLMs have shown that large quantities of data seem to approach some sort of generalized knowledge, but researchers don’t necessarily agree on that https://arxiv.org/abs/2206.07682. So if we can’t get to more emergent abilities, it’s unlikely AGI is on the way. But as you said, combining and interweaving these systems may get something close.

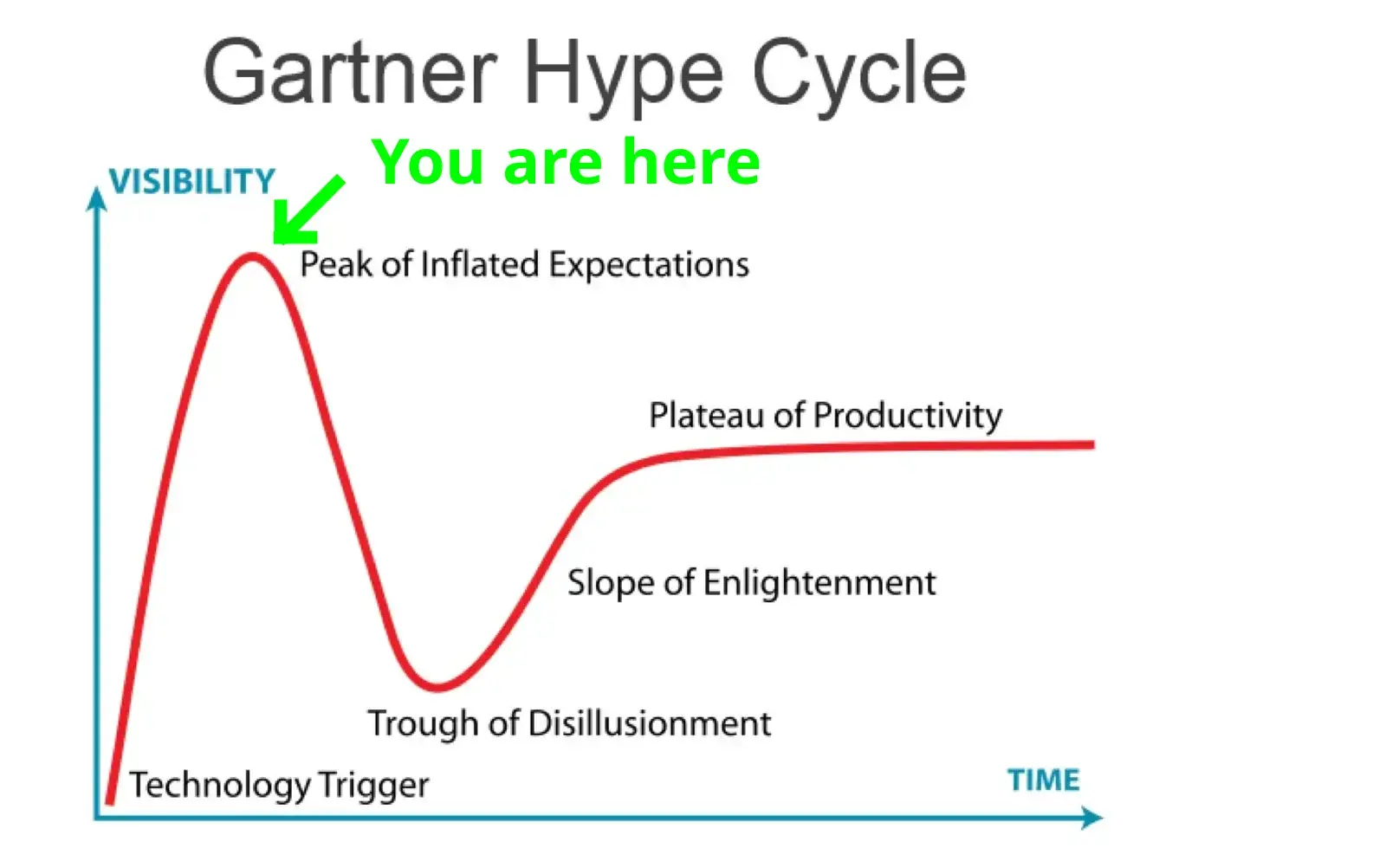

Currently at the crossroads between trough of disillusionment and slope of enlightenment

The trough of disillusionment is my favorite.

Betteridge’s law of headlines: No.

Kind of nice to see NFTs breaking through the floor at the trough of disillusionment, never to return.

I’m in IT and I don’t believe this will happen for quite a while if at all. That said I wouldn’t let this keep you up at night, it’s out of your control and worrying about it does you no favours. If AI really can replace people then we are all in this together and we will figure it out.

If you are afraid about the capabilities of AI you should use it. Take one week to use chatgpt heavily in your daily tasks. Take one week to use copilot heavily.

Then you can make an informed judgement instead of being irrationally scared of some vague concept.

Yeah, not using it isn’t going to help you when the bottom line is all people care about.

It might take junior dev roles, and turn senior dev into QA, but that skillset will be key moving forward if that happens. You’re only shooting yourself in the foot by refusing to integrate it into your work flows, even if it’s just as an assistant/troubleshooting aid.

It’s not going to take junior dev roles) it’s going to transform whole workflow and make dev job more like QA than actual dev jobs, since difference between junior middle and senior is often only with scope of their responsibility (I’ve seen companies that make junior do fullstack senior job while on the paper they still was juniors and paycheck was something between junior and middle dev and these companies is majority in rural area)

You’re certainly not the only software developer worried about this. Many people across many fields are losing sleep thinking that machine learning is coming for their jobs. Realistically automation is going to eliminate the need for a ton of labor in the coming decades and software is included in that.

However, I am quite skeptical that neural nets are going to be reading and writing meaningful code at large scales in the near future. If they did we would have much bigger fish to fry because that’s the type of thing that could very well lead to the singularity.

I think you should spend more time using AI programming tools. That would let you see how primitive they really are in their current state and learn how to leverage them for yourself. It’s reasonable to be concerned that employees will need to use these tools in the near future. That’s because these are new, useful tools and software developers are generally expected to use all tooling that improves their productivity.

If they did we would have much bigger fish to fry because that’s the type of thing that could very well lead to the singularity.

Bingo

I won’t say it won’t happen soon. And it seems fairly likely to happen at some point. But at that point, so much of the world will have changed because of the other impacts of having AI, as it was developing to be able to automate thousands of things that are easier than programming, that “will I still have my programming job” may well not be the most pressing issue.

For the short term, the primary concern is programmers who can work much faster with AI replacing those that can’t. SOCIAL DARWINISM FIGHT LET’S GO

deleted by creator

I think you should spend more time using AI programming tools. That would let you see how primitive they really are in their current state and learn how to leverage them for yourself.

I agree, sosodev. I think it would be wise to at least be aware of modern A.I.'s current capabilities and inadequacies, because honestly, you gotta know what you’re dealing with.

If you ignore and avoid A.I. outright, every new iteration will come as a complete surprise, leaving you demoralized and feeling like shit. More importantly, there will be less time for you to adapt because you’ve been ignoring it when you could’ve been observing and planning. A.I. currently does not have that advantage, OP. You do.

I’m gonna sum up my feelings on this with a (probably bad) analogy.

AI taking software developer jobs is the same thinking as microwaves taking chefs jobs.

They’re both just tools to help you achieve the same goal easier/faster. And sometimes the experts will decide to forego the tool and do it by hand for better quality control or high complexity that the tool can’t do a good job at.

As a welder, I’ve been hearing for 20 years that “robots are going to replace you” and “automation is going to put you out of a job” yadda yadda. None of you code monkies gave a fuck about me and my job, but now it’s a problem because it affects you and your paycheck? Fuck you lmao good riddance to bad garbage.

Weirdly hostile, but ok. It’s like any other tool that can be used to accelerate a process. Hopefully at some point it’s useful enough to streamline the minutia of boring tasks that a competent intern could do. Not sure who is specifically targeting welders??

If it frees up your time to focus on more challenging stuff or stuff you enjoy, isn’t that a good thing? Folks are dynamic and will adjust, as we always have.

Don’t think there’s a good excuse to come at someone with animosity over this topic.

So far it is mainly an advanced search engine, someone still needs to know what to ask it, interpret the results and correct them. Then there’s the task of fitting it into an existing solution / landscape.

Then there’s the 50% of non coding tasks you have to perform once you’re no longer a junior. I think it’ll be mainly useful for getting developers with less experience productive faster, but require more oversight from experienced devs.

At least for the way things are developing at the moment.

Thought about this some more so thought I’d add a second take to more directly address your concerns.

As someone in the film industry, I am no stranger to technological change. Editing in particular has radically changed over the last 10 to 20 years. There are a lot of things I used to do manually that are now automated. Mostly what it’s done is lower the barrier to entry and speed up my job after a bit of pain learning new systems.

We’ve had auto-coloring tools since before I began and colorists are still some of the highest paid folks around. That being said, expectations have also risen. Good and bad on that one.

Point is, a lot of times these things tend to simplify/streamline lower level technical/tedious tasks and enable you to do more interesting things.

Yeh