I don’t bother using things like Copilot or other AI tools like ChatGPT. I mean, they’re pretty cool what they CAN give you correctly and the new demo floored me in awe.

But, I prefer just using the image generators like DALL E and Diffusion to make funny images or a new profile picture on steam.

But this example here? Good god I hope this doesn’t become the norm…

These text generation LLM are good for text generating. I use it to write better emails or listings or something.

I had to do a presentation for work a few weeks ago. I asked co-pilot to generate me an outline for a presentation on the topic.

It spat out a heading and a few sections with details on each. It was generic enough, but it gave me the structure I needed to get started.

I didn’t dare ask it for anything factual.

Worked a treat.

You can ask these LLMs to continue filling out the outline too. They just generate a bunch of generic points and you can erase or fill in the details.

That’s how I used it to write cover letters for job applications. I feed it my resume and the job listing and it puts something together. I’ve got to do a lot of editing and sometimes it just makes up experience, but it’s faster than trying to write it myself.

This is definitely different from using Dall-E to make funny images. I’m on a thread in another forum that is (mostly) dedicated to AI images of Godzilla in silly situations and doing silly things. No one is going to take any advice from that thread apart from “making Godzilla do silly things is amusing and worth a try.”

Just don’t use google

Why people still use it is beyond me.

The abusive adware company can still sometimes kill it with vague searches.

(Still too lazy to properly catalog the daily occurrences such as above.)

SearXNG proxying Google still isn’t as good sometimes for some reason (maybe search bubbling even in private browsing w/VPN). Might pay for search someday to avoid falling back to Google.

Because Google has literally poisoned the internet to be the de facto SEO optimization goal. Even if Google were to suddenly disappear, everything is so optimized forngoogle’s algorithm that any replacements are just going to favor the SEO already done by everyone.

I learned the term Information Kessler Syndrome recently.

Now you have too. Together we bear witness to it.

How do you guys get these AI things? I don’t have such a thing when I search using Google.

I probably have it blocked somewhere on my desktop, because it never happens on my desktop, but it happens on my Pixel 4a pretty regularly.

&udm=14 baybee

I get them pretty regularly using the Google search app on my android.

Gmail has something like it too with the summary bit at the top of Amazon order emails. Had one the other day that said I ordered 2 new phones, which freaked me out. It’s because there were ads to phones in the order receipt email.

IIRC Amazon emails specifically don’t mention products that you’ve ordered in their emails to avoid Google being able to scrape product and order info from them for their own purposes via Gmail.

Well to be fair the OP has the date shown in the image as Apr 23, and Google has been frantically changing the way the tool works on a regular basis for months, so there’s a chance they resolved this insanity in the interim. The post itself is just ragebait.

*not to say that Google isn’t doing a bunch of dumb shit lately, I just don’t see this particular post from over a month ago as being as rage inducing as some others in the community.

I believe it’s US-only for now

Thank god

Of course you should not trust everything you see on the internet.

Be cautious and when you see something suspicious do a google search to find more reliable sources.

Oh … Wait !

Sadly there’s really no other search engine with a database as big as Google. We goofed by heavily relying on Google.

Not yet! But you can make a difference to that… https://yacy.net/

Kagi is pretty awesome. I never directly use Google search on any of my devices anymore, been on Kagi for going on a year.

I just started the Kagi trial this morning, so far I’m impressed how accurate and fast it is. Do you find 300 searches is enough or do you pay for unlimited?

Interesting… sadly paid service.

I use perplexity, I just have to get into the habit of not going straight to google for my searches.

I do think it’s worth the money however, especially since it allows you to cutomize your search results by white-/blacklisting sites and making certain sites rank higher or lower based on your direct feedback. Plus, I like their approach to openness and considerations on how to improve searching without bogging down the standard search.

Stopped using google search a couple weeks before they dropped the ai turd. Glad i did

What do you use now?

I work in IT and between the Advent of “agile” methodologies meaning lots of documentation is out of date as soon as it’s approved for release and AI results more likely to be invented instead of regurgitated from forum posts, it’s getting progressively more difficult to find relevant answers to weird one-off questions than it used to be. This would be less of a problem if everything was open source and we could just look at the code but most of the vendors corporate America uses don’t ascribe to that set of values, because “Mah intellectual properties” and stuff.

Couple that with tech sector cuts and outsourcing of vendor support and things are getting hairy in ways AI can’t do anything about.

Not who you asked but I also work IT support and Kagi has been great for me.

I started with their free trial set of searches and that solidified it.

Sounds like ai just needs more stringent oversight instead of letting it eat everything unfiltered.

Duckduckgo, kagi, and Searxng are the ones i hear about the most

DDG is basically a (supposedly) privacy-conscious front-end for Bing. Searxng is an aggregator. Kagi is the only one of those three that uses its own index. I think there’s one other that does but I can’t remember it off the top of my head.

I’ve had similar issues with copilot where it seemingly pulls information out of it’s ass. I use it to do fact-finding about services the company I work for is considering and even when I specify “use only information found on whateveritis.com” it still occasionally gives an answer I can’t verify in their docs. Still better than manually searching a bunch of knowledge articles myself but it is annoying.

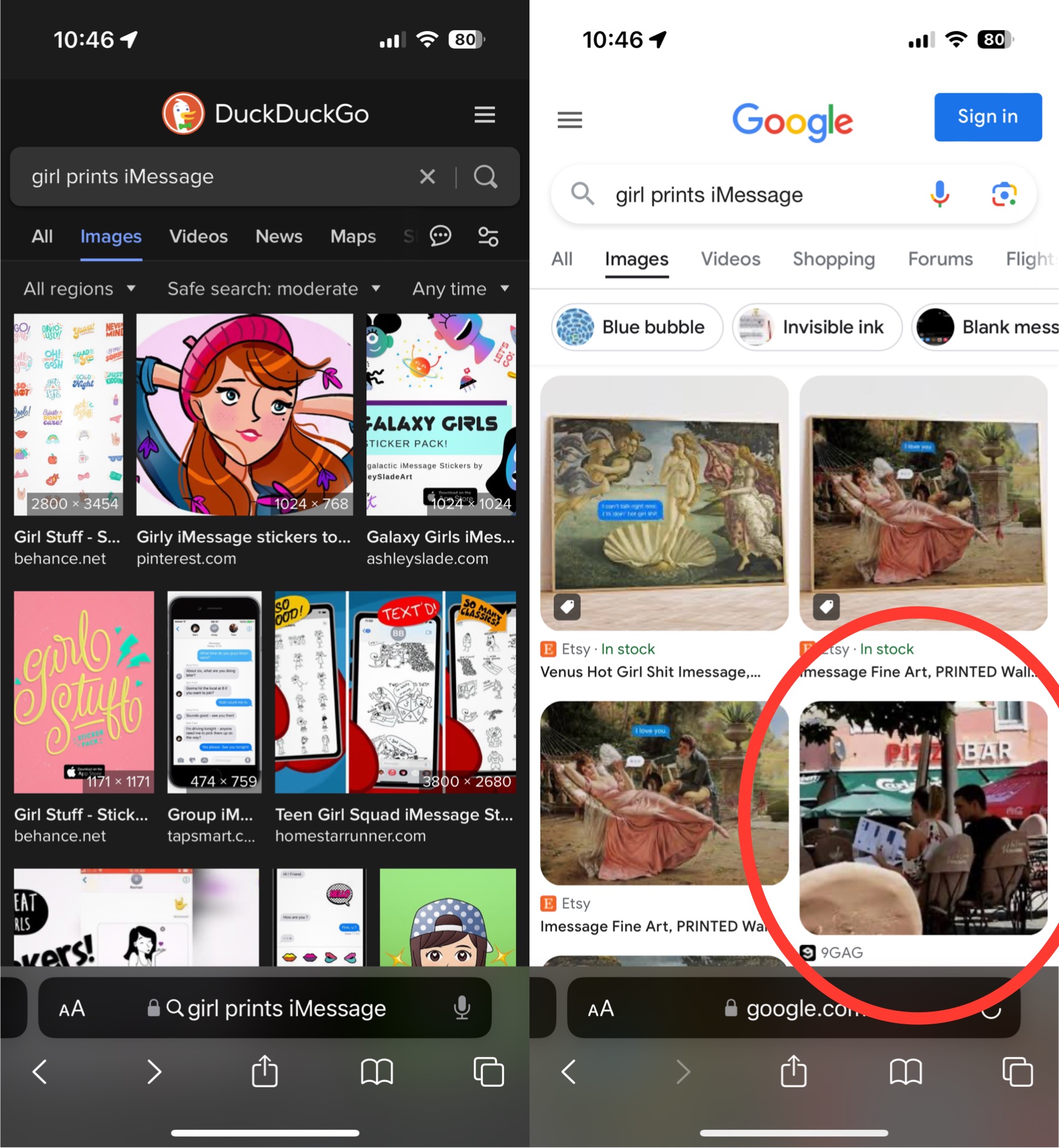

I always try to replicate these results, because the majority of them are fake. For this one in particular I don’t get any AI results, which is interesting, but inconclusive

How would you expect to recreate them when the models are given random perturbations such that the results usually vary?

The point here is that this is likely another fake image, meant to get the attention of people who quickly engage with everything anti AI. Google does not generate an AI response to this query, which I only know because I attempted to recreate it. Instead of blindly taking everything you agree with at face value, it can behoove you to question it and test it out yourself.

Google is well known to do A/B testing, meaning you might not get a particular response (or even whole sets of results generated via different algorithms they are testing) even if your neighbor searches for the same thing.

So again, I ask how your anecdotal evidence somehow invalidates other anecdotal evidence? If your evidence isn’t anecdotal, I am very interested in your results.

Otherwise, what you’re saying has the same or less value than the example.

These are the subtle types of errors that are much more likely to cause problems than when it tells someone to put glue in their pizza.

Yes its totally ok to reuse fish tank tubing for grammy’s oxygen mask

Wait… why can’t we put glue on pizza anymore?

because the damn liberals canceled glue on pizza!

How else are the toppings supposed to stay in place?

Obviously you need hot glue for pizza, not the regular stuff.

It do be keepin the cheese from slidin off onto yo lap tho

You’re giving humans too much in the sense of intelligence…there are people who literally drove in lakes because a GPS told them to…

Why do we call it hallucinating? Call it what it is: lying. You want to be more “nice” about it: fabricating. “Google’s AI is fabricating more lies. No one dead… yet.”

To be fair, they call it a hallucination because hallucinations don’t have intent behind them.

LLMs don’t have any intent. Period.

A purposeful lie requires an intent to lie.

Without any intent, it’s not a lie.

I agree that “fabrication” is probably a better word for it, especially because it implies the industrial computing processes required to build these fabrications. It allows the word fabrication to function as a double entendre: It has been fabricated by industrial processes, and it is a fabrication as in a false idea made from nothing.

LLM’s may not have any intent, but companies do. In this case, Google decides to present the AI answer on top of the regular search answers, knowing that AI can make stuff up. MAybe the AI isn’t lying, but Google definitely is. Even with the “everything is experimental, learn more” line, because they’d just give the information if they’d really want you to learn more, instead of making you have to click again for it.

In other words, I agree with your assessment here. The petty abject attempts by all these companies to produce the world’s first real “Jarvis” are all couched in “they didn’t stop to think if they should.”

My actual opnion is that they don’t want to think if they should, because they know the answer. The pressure to go public with a shitty model outweighs the responsibility to the people relying on the search results.

It is difficult to get a man to understand something when his salary depends on his not understanding it.

-Upton Sinclair

Sadly, same as it ever was. You are correct, they already know the answer, so they don’t want to consider the question.

There’s also the argument that “if we don’t do it, somebody else would,” and I kind of understand that, while I also disagree with it.

Oh, they absolutely should. A “Jarvis” would be great.

But that thing they are pushing has absolutely no relation to a “Jarvis”.

I did look up an article about it that basically said the same thing, and while I get “lie” implies malicious intent, I agree with you that fabricate is better than hallucinating.

The most damning thing to call it is “inaccurate”. Nothing will drive the average person away from a companies information gathering products faster than associating it with being inaccurate more times than not. That is why they are inventing different things to call it. It sounds less bad to say “my LLM hallucinates sometimes” than it does to say “my LLM is inaccurate sometimes“.

Because lies require intent to deceive, which the AI cannot have.

They merely predict the most likely thing that should next be said, so “hallucinations” is a fairly accurate description

It’s not lying or hallucinating. It’s describing exactly what it found in search results. There’s an web page with that title from that date. Now the problem is that the web page is pinterest and the title is the result of aggressive SEO. These types of SEO practices are what made Google largely useless for the past several years and an AI that is based on these useless results will be just as useless.

it’s probably going to be doing that

Could this be grounds for CVS to sue Google? Seems like this could harm business if people think CVS products are less trustworthy. And Google probably can’t find behind section 230 since this is content they are generating but IANAL.

Iirc cases where the central complaint is AI, ML, or other black box technology, the company in question was never held responsible because “We don’t know how it works”. The AI surge we’re seeing now is likely a consequence of those decisions and the crypto crash.

I’d love CVS try to push a lawsuit though.

I would love if lawsuits brought the shit that is ai down. It has a few uses to be sure but overall it’s crap for 90+% of what it’s used for.

In Canada there was a company using an LLM chatbot who had to uphold a claim the bot had made to one of their customers. So there’s precedence for forcing companies to take responsibility for what their LLMs says (at least if they’re presenting it as trustworthy and representative)

This was with regards to Air Canada and its LLM that hallucinated a refund policy, which the company argued they did not have to honour because it wasn’t their actual policy and the bot had invented it out of nothing.

An important side note is that one of the cited reasons that the Court ruled in favour of the customer is because the company did not disclose that the LLM wasn’t the final say in its policy, and that a customer should confirm with a representative before acting upon the information. This meaning that the the legal argument wasn’t “the LLM is responsible” but rather “the customer should be informed that the information may not be accurate”.

I point this out because I’m not so sure CVS would have a clear cut case based on the Air Canada ruling, because I’d be surprised if Google didn’t have some legalese somewhere stating that they aren’t liable for what the LLM says.

But it has to be clearly presented. Consumer law and defamation law has different requirements on disclaimers

But those end up being the same in practice. If you have to put up a disclaimer that the info might be wrong, then who would use it? I can get the wrong answer or unverified heresay anywhere. The whole point of contacting the company is to get the right answer; or at least one the company is forced to stick to.

This isn’t just minor AI growing pains, this is a fundamental problem with the technology that causes it to essentially be useless for the use case of “answering questions”.

They can slap as many disclaimers as they want on this shit; but if it just hallucinates policies and incorrect answers it will just end up being one more thing people hammer 0 to skip past or scroll past to talk to a human or find the right answer.

Yeah the legalise happens to be in the back pocket of sundar pichai. ???

iirc alaska airlines had to pay

That was their own AI. If CVS’ AI claimed a recall, it could be a problem.

So will the google AI be held responsible for defaming CVS?

Spoiler alert- they won’t.

“We don’t know how it works but released it anyway” is a perfectly good reason to be sued when you release a product that causes harm.

The crypto crash? Idk if you’ve looked at Crypto recently lmao

Current froth doesn’t erase the previous crash. It’s clearly just a tulip bulb. Even tulip bulbs were able to be traded as currency for houses and large purchases during tulip mania. How much does a great tulip bulb cost now?

67k, only barely away from it’s ATH

deleted by creator

People been saying that for 10+ years lmao, how about we’ll see what happens.

deleted by creator

67k what? In USD right? Tell us when buttcoin has its own value.

And this is what the rich will replace us with.

keep poisoning AI until it’s useless to everyone.

Why?

Because LLMs are planet destroying bullshit artists built in the image of their bullshitting creators. They are wasteful and they are filling the internet with garbage. Literally making the apex of human achievement, the internet, useless with their spammy bullshit.

Lol, got any more angry words?

Fuck 'em, that’s why.

For one thing, it’s an environmental disaster and few people seem to care.

https://e360.yale.edu/features/artificial-intelligence-climate-energy-emissions

Because he wants to stop it from helping impoverished people live better lives and all the other advantages simply because it didn’t exist when.he was young and change scares him

Holy shit your assumption says a lot about you. How do you think AI is going to “help impoverished people live better lives” exactly?

It’s fascinating to me that you genuinely don’t know, it shows not only do you have no active interest in working to benefit impoverished communities but you have no real knowledge of the conversations surrounding ai - but here you are throwing out your opion with the certainty of a zealot.

If you had any interest or involvement in any aid or development project relating to the global south you’d be well aware that one of the biggest difficulties for those communities is access to information and education in their first language so a huge benefit of natural language computing would be very obvious to you.

Also If you followed anything but knee-jerk anti-ai memes to try and develop an understand of this emerging tech you’d have without any doubt been exposed to the endless talking points on this subject, https://oxfordinsights.com/insights/data-and-power-ai-and-development-in-the-global-south/ is an interesting piece covering some of the current work happening on the language barrier problems i mentioned ai helping with.

he wants to stop it from helping impoverished people live better lives and all the other advantages simply because it didn’t exist when.he was young and change scares him

That’s the part I take issue with, the weird probably-projecting assumption about people.

Have fun with the holier-than-thou moral high ground attitude about AI though, shits laughable.

I think you misunderstood the context, I’m not really saying that he actively wants to stop it helping poor people I’m saying that he doesn’t care about or consider the benefits to other people simply because he’s entirely focused on his own emotional response which stems from a fear of change.

Because they will only be used my corporations to replace workers, furthering class divide, ultimately leading to a collapse in countries and economies. Jobs will be taken, and there will be no resources for the jobless. The future is darker than bleak should LLMs and AI be allowed to be used indeterminately by corporations.

furthering class divide, ultimately leading to a collapse in countries and economies

Might be the cynic in me but I don’t think that would be the worst outcome. Maybe it will finally be the straw that breaks the camel’s back for people to realize that being a highly replaceable worker drone wage slave isn’t really going anywhere for everyone except the top-0.001%.

We should use them to replace workers, letting everyone work less and have more time to do what they want.

We shouldn’t let corporations use them to replace workers, because workers won’t see any of the benefits.

that won’t happen. technological advancement doesn’t allow you to work less, it allowa you to work less for the same output. so you work the same hours but the expected output changes, and your productivity goes up while your wages stay the same.

technological advancement doesn’t allow you to work less,

It literally has (When forced by unions). How do you think we got the 40-hr workweek?

How do you think we got the 40hr work week?

Unions fought for it after seeing the obvious effects of better technology reducing the need for work hours.

it was forced by unions.

In response to better technology that reduced the need for work hours.

That wasn’t technology. It was the literal spilling of blood of workers and organizers fighting and dying for those rights.

And you think they just did it because?

They obviously thought they deserved it, because… technology reduced the need for work hours, perhaps?

because the sooner corporate meatheads clock that this shit is useless and doesn’t bring that hype money the sooner it dies, and that’d be a good thing because making shit up doesn’t require burning a square km of rainforest per query

not that we need any of that shit anyway. the only things these plagiarism machines seem to be okayish at is mass manufacturing spam and disinfo, and while some adderral-fueled middle managers will try to replace real people with it, it will fail flat on this task (not that it ever stopped them)

I think it sounds like there are huge gains to be made in energy efficiency instead.

Energy costs money so datacenters would be glad to invest in better and more energy efficient hardware.

orrrr just ditch the entire overhyped underdelivering thing

It can be helpful if you know how to use it though.

I don’t use it myself a lot but quite a few at work use it and are very happy with chatgpt

There are also AI poisoners for images and audio data