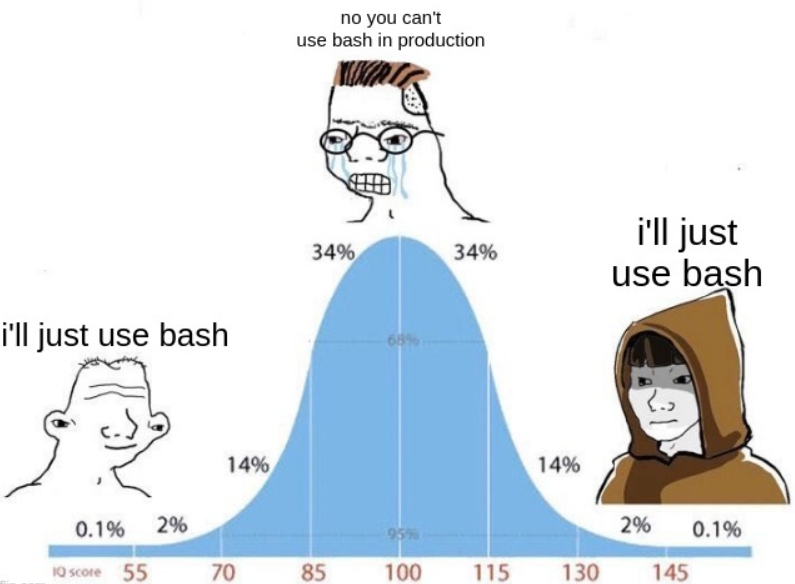

This may make some people pull their hair out, but I’d love to hear some arguments. I’ve had the impression that people really don’t like bash, not from here, but just from people I’ve worked with.

There was a task at work where we wanted something that’ll run on a regular basis, and doesn’t do anything complex aside from reading from the database and sending the output to some web API. Pretty common these days.

I can’t think of a simpler scripting language to use than bash. Here are my reasons:

- Reading from the environment is easy, and so is falling back to some value; just do

${VAR:-fallback}; no need to write another if-statement to check for nullity. Wanna check if a variable’s set to something expected?if [[ <test goes here> ]]; then <handle>; fi - Reading from arguments is also straightforward; instead of a

import os; os.args[1]in Python, you just do$1. - Sending a file via HTTP as part of an

application/x-www-form-urlencodedrequest is super easy withcurl. In most programming languages, you’d have to manually open the file, read them into bytes, before putting it into your request for the http library that you need to import.curlalready does all that. - Need to read from a

curlresponse and it’s JSON? Reach forjq. - Instead of having to set up a connection object/instance to your database, give

sqlite,psql,duckdbor whichever cli db client a connection string with your query and be on your way. - Shipping is… fairly easy? Especially if docker is common in your infrastructure. Pull

Ubuntuordebianoralpine, install your dependencies through the package manager, and you’re good to go. If you stay within Linux and don’t have to deal with differences in bash and core utilities between different OSes (looking at you macOS), and assuming you tried to not to do anything too crazy and bring in necessary dependencies in the form of calling them, it should be fairly portable.

Sure, there can be security vulnerability concerns, but you’d still have to deal with the same problems with your Pythons your Rubies etc.

For most bash gotchas, shellcheck does a great job at warning you about them, and telling how to address those gotchas.

There are probably a bunch of other considerations but I can’t think of them off the top of my head, but I’ve addressed a bunch before.

So what’s the dealeo? What am I missing that may not actually be addressable?

Honestly, if a script grows to more than a few tens of lines I’m off to a different scripting language because I’ve written enough shell script to know that it’s hard to get right.

Shellcheck is great, but what’s greater is a language that doesn’t have as many gotchas from the get go.

What gave you the impression that this was just for development? Bash is widely used in production environments for scripting all over enterprises. The people you work with just don’t have much experience at lots of shops I would think.

It’s just not wise to write an entire system in bash. Just simple little tasks to do quick things. Yes, in production. The devops world runs on bash scripts.

Bash is widely used in production environments for scripting all over enterprises.

But it shouldn’t be.

The people you work with just don’t have much experience at lots of shops I would think.

More likely they do have experience of it and have learnt that it’s a bad idea.

Yeah, no.

I’ve never had that impression, and I know that even large enterprises have Bash scripts essentially supporting a lot of the work of a lot of their employees. But there are also many very loud voices that seems to like screaming that you shouldn’t use Bash almost at all.

You can take a look at the other comments to see how some are entirely turned off by even the idea of using bash, and there aren’t just a few of them.

This Lemmy thread isn’t representative of the real world. I’ve been a dev for 40 years. You use what works. Bash is a fantastic scripting tool.

I understand that. I have coworkers with about 15-20 years in the industry, and they frown whenever I put a bash script out for, say, a purpose that I put in my example: self-contained, clearly defined boundaries, simple, and not mission critical despite handling production data, typically done in less than 100 lines of bash with generous spacing and comments. So I got curious, since I don’t feel like I’ve ever gotten a satisfactory answer.

Thank you for sharing your opinion!

My #1 rule for the teams I lead is “consistency”. So it may fall back to that. The standard where you work is to use a certain way of doing things so everyone becomes skilled at the same thing.

I have the same rule, but I always let a little bash slide here and there.

Pretty much all languages are middleware, and most of the original code was shell/bash. All new employees in platform/devops want to immediately push their preferred language, they want java and rust environments. It’s a pretty safe bet if they insist on using a specific language; then they don’t know how awk or sed. Bash has all the tools you need, but good developers understand you write libraries for functionality that’s missing. Modern languages like Python have been widely adopted and has a friendlier onboarding and will save you time though.

Saw this guy’s post in another thread, he’s strawmanning because of lack of knowledge.

Pretty much all languages are middleware, and most of the original code was shell/bash.

What? I genuinely do not know what you mean by this.

2 parts:

- All languages are middleware. Unless you write in assembly, whatever you write isn’t directly being executed, they are being run through a compiler and being translated from your “middle language” or into 0s and 1s the computer can understand. Middleware is code used in between libraries to duplicate their functionality.

https://azure.microsoft.com/en-us/resources/cloud-computing-dictionary/what-is-middleware/ - Most original code was written in shell. Most scripting is done in the cli or shell language and stored as a

script.shfile, containing instructions to execute tasks. Before python was invented you used the basic shell because nothing else existed yet

The first part is confusing what “middleware” means. Rather than “duplicating” functionality, it connects libraries (I’m guessing this is what you meant). But that has nothing to do with a language being compiled versus “directly executed”, because compilation doesn’t connect different services or libraries; it just transforms a higher-level description of execution into an executable binary. You could argue that an interpreter or managed runtime is a form of “middleware” between interpreted code and the operating system, but middleware typically doesn’t describe anything so critical to a piece of software that the software can’t run without it, so even that isn’t really a correct use of the term.

The second part is just…completely wrong. Lisp, Fortran, and other high-level languages predate terminal shells; C obviously predates the shell because most shells are written in C. “Most original code” is in an actual systems language like C.

(As a side note, Python wasn’t the first scripting language, and it didn’t become popular very quickly. Perl and Tcl preceded it; Lua, php, and R were invented later but grew in popularity much earlier.)

You are stuck on 100% accuracy and trying to actually stuff to death. The user asked if it’s possible to write an application in bash and the answer is an overwhelming duh. Most assembly languages are emulators and they all predate C. You are confidant, wrong and loud. Guess I struck a nerve when I called you out for needing a specific language.

In addition to not actually being correct, I don’t think the information you’ve provided is particularly helpful in answering OP’s question.

- All languages are middleware. Unless you write in assembly, whatever you write isn’t directly being executed, they are being run through a compiler and being translated from your “middle language” or into 0s and 1s the computer can understand. Middleware is code used in between libraries to duplicate their functionality.

I’ve only ever used bash.

May I introduce you to rust script? Basically a wrapper to run rust scripts right from the command line. They can access the rust stdlib, crates, and so on, plus do error handling and much more.

Basically a wrapper to run rust scripts right from the command line.

Isn’t that just Python? :v

How easily can you start parsing arguments and read env vars? Do people import clap and such to provide support for those sorts of needs?

I’d use clap, yeah. And env vars

std::env::var("MY_VAR")?You can of course start writing your own macro crate. I wouldn’t be surprised if someone already did write a proc macro crate that introduces its own syntax to make calling subprocesses easier. The shell is… your oyster 😜I can only imagine that macro crate being a nightmare to read and maintain given how macros are still insanely hard to debug last I heard (might be a few years ago now).

proc macros can be called in tests and debugged. They aren’t that horrible, but can be tedious to work with. A good IDE makes it a lot easier though, that’s for sure.

Check out @[email protected]’s comment with existing libraries. Someone already did the work! 🎉

I’m so glad we have madlads in rust land xD Thanks for referring me to that!

Yeah, sometimes I’ll use that just to have the sane control flow of Rust, while still performing most tasks via commands.

You can throw down a function like this to reduce the boilerplate for calling commands:

fn run(command: &str) { let status = Command::new("sh") .arg("-c") .arg(command) .status() .unwrap(); assert!(status.success()); }Then you can just write

run("echo 'hello world' > test.txt");to run your command.Defining

runis definitely the quick way to do it 👍 I’d love to have a proc macro that takes a bash like syntax e.gsomeCommand | readsStdin | processesStdIn > someFileand builds the necessary rust to use. xonsh does it using a superset of python, but I never really got into it.Wow, that’s exactly what I was looking for! Thanks dude.

“Use the best tool for the job, that the person doing the job is best at.” That’s my approach.

I will use bash or python dart or whatever the project uses.

Bash is perfectly good for what you’re describing.

Serious question (as a bash complainer):

Have I missed an amazing bash library for secure database access that justifies a “perfectly good” here?

Every database I know comes with an SQL shell that takes commands from stdin and writes query results to stdout. Remember that “bash” never means bash alone, but all the command line tools from cut via jq to awk and beyond … so, that SQL shell would be what you call “bash library”.

Thank you. I wasn’t thinking about that. That’s a great point.

As long as any complex recovery logic fits inside the SQL, itself, I don’t have any issue invoking it from

bash.It’s when there’s complicated follow-up that needs to happen in bash that I get anxious about it, due to past painful experiences.

Right, that’s when you should look for a driver language that’s better suited for the job, e. g. Python.

I don’t disagree with this, and honestly I would probably support just using bash like you said if I was in a team where this was suggested.

I think no matter how simple a task is there are always a few things people will eventually want to do with it:

- Reproduce it locally

- Run unit tests, integration tests, smoke tests, whatever tests

- Expand it to do more complex things or make it more dynamic

- Monitor it in tools like Datadog

If you have a whole project already written in Python, Go, Rust, Java, etc, then just writing more code in this project might be simpler, because all the tooling and methodology is already integrated. A script might not be so present for many developers who focus more on the code base, and as such out of sight out of mind sets in, and no one even knows about the script.

There is also the consideration that many people simply dislike bash since it’s an odd language and many feel it’s difficult to do simple things with it.

due to these reasons, although I would agree with making the script, I would also be inclined to have the script temporarily while another solution is being implemented.

I don’t necessarily agree that all simple tasks will lead to the need for a test suite to accommodate more complex requirements. If it does reach that point,

- Your simple bash script has and is already providing basic value.

- You can (and should) move onto a more robust language to do more complicated things and bring in a test suite, all while you have something functional and delivering value.

I also don’t agree that you can just solder on whatever small task you have to whatever systems you already have up and running. That’s how you make a Frankenstein. Someone at some point will have to come do something about your little section because it started breaking, or causing other things to break. It could be throwing error messages because somebody changed the underlying db schema. It could be calling and retrying a network call and due to, perhaps, poorly configured backoff strategy, you’re tripping up monitoring alerts.

That said, I do agree on it suitable for temporary tasks.

I agree with your points, except if the script ever needs maintaining by someone else’s they will curse you and if it gets much more complicated it can quickly become spaghetti. But I do have a fair number of bash scripts running on cron jobs, sometimes its simplicity is unbeatable!

Personally though the language I reach for when I need a script is Python with the click library, it handles arguments and is really easy to work with. If you want to keep python deps down you can also use the sh module to run system commands like they’re regular python, pretty handy

Those two libraries actually look pretty good, and seems like you can remove a lot of the boilerplate-y code you’d need to write without them. I will keep those in mind.

That said, I don’t necessarily agree that bash is bad from a maintainability standpoint. In a team where it’s not commonly used, yeah, nobody will like it, but that’s just the same as nobody would like it if I wrote in some language the team doesn’t already use? For really simple, well-defined tasks that you make really clear to stakeholders that complexity is just a burden for everyone, the code should be fairly simple and straightforward. If it ever needs to get complicated, then you should, for sure, ditch bash and go for a larger language.

That said, I don’t necessarily agree that bash is bad from a maintainability standpoint.

My team uses bash all the time, but we agree (internally as a team) that bash is bad from a maintainability perspective.

As with any tool we use, some of us are experts, and some are not. But the non-experts need tools that behave themselves on days when experts are out of office.

We find that bash does very well when each entire script has no need for branching logic, security controls, or error recovery.

So we use substantial amounts of bash in things like CI/CD pipelines.

Hell, I hate editing bash scripts I’ve written. The syntax just isn’t as easy

I just don’t think bash is good for maintaining the code, debugging, growing the code over time, adding automated tests, or exception handling

If you need anything that complex and that it’s critical for, say, customers, or people doing things directly for customers, you probably shouldn’t use bash. Anything that needs to grow? Definitely not bash. I’m not saying bash is what you should use if you want it to grow into, say, a web server, but that it’s good enough for small tasks that you don’t expect to grow in complexity.

small tasks that you don’t expect to grow in complexity

On one conference I heard saying: “There is no such thing as temporary solution and there is no such thing as proof of concept”. It’s an overexaguration of course but it has some truth to it - there’s a high chance that your “small change” or PoC will be used for the next 20 years so write it as robust and resilient as possible and document it. In other words everything will be extended, everything will be maintained, everything will change hands.

So to your point - is bash production ready? Well, depends. Do you have it in git? Is it part of some automation pipeline? Is it properly documented? Do you by chance have some tests for it? Then yes, it’s production ready.

If you just “write this quick script and run it in cron” then no. Because in 10 years people will pull their hair screaming “what the hell is hapenning?!”

Edit: or worse, they’ll scream it during the next incident that’ll happen at 2 AM on Sunday

I find it disingenuous to blame it on the choice of bash being bad when goalposts are moved. Solutions can be temporary as long as goalposts aren’t being moved. Once the goalpost is moved, you have to re-evaluate whether your solution is still sufficient to meet new needs. If literally everything under the sun and out of it needs to be written in a robust manner to accommodate moving goalposts, by that definition, nothing will ever be sufficient, unless, well, we’ve come to a point where a human request by words can immediately be compiled into machine instructions to do exactly what they’ve asked for, without loss of intention.

That said, as engineers, I believe it’s our responsibility to identify and highlight severe failure cases given a solution and its management, and it is up to the stakeholders to accept those risks. If you need something running at 2am in the morning, and a failure of that process would require human intervention, then maybe you should consider not running it at 2am, or pick a language with more guardrails.

it’s (bash) good enough for small tasks that you don’t expect to grow in complexity.

I don’t think you’ll get a lot of disagreement on that, here. As mention elsewhere, my team prefers bash for simple use cases (and as their bash-hating boss, I support and agree with how and when they use bash.)

But a bunch of us draw the line at database access.

Any database is going to throw a lot of weird shit at the bash script.

So, to me, a bash script has grown to unacceptable complexity on the first day that it accesses a database.

We have dozens of bash scripts running table cleanups and maintenece tasks on the db. In the last 20 years these scripts where more stable than the database itself (oracle -> mysql -> postgres).

But in all fairness they just call the cliclient with the appropiate sql and check for the response code, generating a trap.

That’s a great point.

I post long enough responses already, so I didn’t want to get into resilience planning, but your example is a great highlight that there’s rarely hard and fast rules about what will work.

There certainly are use cases for bash calling database code that make sense.

I don’t actually worry much when it’s something where the first response to any issue is to run it again in 15 minutes.

It’s cases where we might need to do forensic analysis that bash plus SQL has caused me headaches.

Yeah, if it feels like a transaction would be helpful, at least go for pl/sql and save yourself some pain. Bash is for system maintenance, not for business logic.

Heck, I wrote a whole monitoring system for a telephony switch with nothing more than bash and awk and it worked better than the shit from the manufacturer, including writing to the isdn cards for mobile messaging. But I wouldn’t do that again if I have an alternative.

Bash is for system maintenance, not for business logic.

That is such a good guiding principle. I’m gonna borrow that.

It’s ok for very small scripts that are easy to reason through. I’ve used it extensively in CI/CD, just because we were using Jenkins for that and it was the path of least resistance. I do not like the language though.

Can I slap a decorator on a Bash function? I love my

@retry(...)(viatenacity, even if it’s a bit wordy).I’m going to read this with a big “/s” at the end there xD

At the level you’re describing it’s fine. Preferably use shellcheck and

set -euo pipefailto make it more normal.But once I have any of:

- nested control structures, or

- multiple functions, or

- have to think about handling anything else than simple strings that other programs manipulate (including thinking about bash arrays or IFS), or

- bash scoping,

- producing my own formatted logs at different log levels,

I’m on to Python or something else. It’s better to get off bash before you have to juggle complexity in it.

-e is great until there’s a command that you want to allow to fail in some scenario.

I know OP is talking about bash specifically but pipefail isn’t portable and I’m not always on a system with bash installed.

-e is great until there’s a command that you want to allow to fail in some scenario.

Yeah, I sometimes do

set +e do_stuff set -eIt’s sort of the bash equivalent of a

try { do_stuff() } catch { /* intentionally bare catch for any exception and error */ /* usually a noop, but you could try some stuff with if and $? */ }I know OP is talking about bash specifically but pipefail isn’t portable and I’m not always on a system with bash installed.

Yeah, I’m happy I don’t really have to deal with that. My worst-case is having to ship to some developer machines running macos which has bash from the stone ages, but I can still do stuff like rely on

[[rather than have to deal with[. I don’t have a particular fondness for usingbashas anything but a sort of config file (withexport SETTING1=...etc) and some light handling of other applications, but I have even less fondness for POSIXsh. At that point I’m liable to rewrite it in Python, or if that’s not availaible in a user-friendly manner either, build a small static binary.It’s nice to agree with someone on the Internet for once :)

Have a great day!

If you’re writing a lot of shell scripts and checking them with Shellcheck, and you’re still convinced that it’s totally safe… I tip my hat to you.

Set don’t forget set -E as well to exit on failed subshells.

In your own description you added a bunch of considerations, requirements of following specific practices, having specific knowledge, and a ton of environmental requirements.

For simple scripts or duck tape schedules all of that is fine. For anything else, I would be at least mindful if not skeptical of bash being a good tool for the job.

Bash is installed on all linux systems. I would not be very concerned about some dependencies like sqlite, if that is what you’re using. But very concerned about others, like jq, which is an additional tool and requirement where you or others will eventually struggle with diffuse dependencies or managing a managed environment.

Even if you query sqlite or whatever tool with the command line query tool, you have to be aware that getting a value like that into bash means you lose a lot of typing and structure information. That’s fine if you get only one or very few values. But I would have strong aversions when it goes beyond that.

You seem to be familiar with Bash syntax. But others may not be. It’s not a simple syntax to get into and intuitively understand without mistakes. There’s too many alternatives of if-ing and comparing values. It ends up as magic. In your example, if you read code, you may guess that

:-means fallback, but it’s not necessarily obvious. And certainly not other magic flags and operators.

As an anecdote, I guess the most complex thing I have done with Bash was scripting a deployment and starting test-runs onto a distributed system (and I think collecting results? I don’t remember). Bash was available and copying and starting processes via ssh was simple and robust enough. Notably, the scope and env requirements were very limited.

You seem to be familiar with Bash syntax. But others may not be.

If by this you mean that the Bash syntax for doing certain things is horrible and that it could be expressed more clearly in something else, then yes, I agree, otherwise I’m not sure this is a problem on the same level as others.

OP could pick any language and have the same problem. Except maybe Python, but even that strays into symbolic line noise once a project gets big enough.

Either way, comments can be helpful when strange constructs are used. There are comments in my own Bash scripts that say what a line is doing rather than just why precisely because of this.

But I think the main issue with Bash (and maybe other shells), is that it’s parsed and run line by line. There’s nothing like a full script syntax check before the script is run, which most other languages provide as a bare minimum.

OP could pick any language and have the same problem. Except maybe Python, but even that strays into symbolic line noise once a project gets big enough.

Personally, I don’t see python far off from bash. Decent for small scripts, bad for anything bigger. While not necessarily natively available, it’s readily available and more portable (Windows), and has a rich library ecosystem.

Personally, I dislike the indent syntax. And the various tooling and complexities don’t feel approachable or stable, and structuring not good.

But maybe that’s me. Many people seem to enjoy or reach for python even for complex systems.

More structured and stable programming languages do not have these issues.

As one other comment mentioned, unfamiliarity with a particular language isn’t restricted to just bash. I could say the same for someone who only dabbles in C being made to read through Python. What’s this

@decoratorthing? Or what’sf"Some string: {variable}"supposed to do, and how’s memory being allocated here? It’s a domain, and we aren’t expected to know every single domain out there.And your mention of losing typing and structure information is… ehh… somewhat of a weird argument. There are many cases where you don’t care about the contents of an output and only care about the process of spitting out that output being a success or failure, and that’s bread and butter in shell scripts. Need to move some files, either locally or over a network, bash is good for most cases. If you do need something just a teeny bit more, like whether some key string or pattern exists in the output, there’s grep. Need to do basic string replacements? sed or awk. Of course, all that depends on how familiar you or your teammates are with each of those tools. If nearly half the team are not, stop using bash right there and write it in something else the team’s familiar with, no questions there.

This is somewhat of an aside, but jq is actually pretty well-known and rather heavily relied upon at this point. Not to the point of say sqlite, but definitely more than, say, grep alternatives like ripgrep. I’ve seen it used quite often in deployment scripts, especially when interfaced with some system that replies with a json output, which seems like an increasingly common data format that’s available in shell scripting.

Yes, every unfamiliar language requires some learning. But I don’t think the bash syntax is particularly approachable.

I searched and picked the first result, but this seems to present what I mean pretty well https://unix.stackexchange.com/questions/248164/bash-if-syntax-confusion which doesn’t even include the alternative if parens https://stackoverflow.com/questions/12765340/difference-between-parentheses-and-brackets-in-bash-conditionals

I find other languages syntaxes much more approachable.

I also mentioned the magic variable expansion operators. https://www.gnu.org/software/bash/manual/html_node/Shell-Parameter-Expansion.html

Most other languages are more expressive.

Your experiences are based on your familiarity with other languages. It may or may not apply to others. So to each their own I guess?

I do agree that the square bracket situation is not best though. But once you know it, you, well, know it. There’s also shellcheck to warn you of gotchas. Not the best to write in, but we have linters in most modern languages for a reason.

I actually like bash’s variable expansion. It’s very succinct (so easier to write and move onto your next thing) and handles many common cases. The handling is what I hope most stdlibs in languages would do with env vars by default, instead of having to write a whole function to do that handling. Falling back is very very commonly used in my experience.

There are cases where programming is an exercise of building something. Other times, it’s a language, and when we speak, we don’t necessarily want to think too much about syntax or grammar, and we’d even invent syntaxes to make what we have to say shorter and easier to say, so that we may speak at the speed of thought.

Run checkbashisms over your $PATH (grep for #!/bin/sh). That’s the problem with Bash.

#!/bin/shis for POSIX compliant shell scripts only, use#!/bin/bashif you use bash syntax.Btw, i quite like yash.

Always welcome a new shell. I’ve not heard of yash but I’ll check it out.

Any reason to use

#!/bin/shover#!/usr/bin/env sh?I personally don’t see the point in using the absolute path to a tool to look up the relative path of your shell, because shell is always /bin/sh but the env binary might not even exist.

Maybe use it with bash, some BSD’s or whatever might have it in /usr without having /bin symlinked to /usr/bin.

There are times when doing so does make sense, eg if you need the script to be portable. Of course, it’s the least of your worries in that scenario. Not all systems have bash being accessible at

/binlike you said, and some would much prefer that you use the first bash that appears in theirPATH, e.g. in nix.But yeah, it’s generally pretty safe to assume

/bin/shwill give you a shell. But there are, apparently, distributions that symlink that to bash, and I’ve even heard of it being symlinked to dash.Not all systems have bash being accessible at

/binlike you sayYeah, but my point is, neither match they

/usr/bin/env. Bash, ok; but POSIX shell and Python, just leave it away.and I’ve even heard of it being symlinked to dash.

I think Debian and Ubuntu do that (or one of them). And me too on Artix, there’s

dash-as-bin-shin AUR, a pacman hook that symlinks. Nothing important breaks by doing so.Leaving it away for Python? Are you mad? Why would you want to use my system Python instead of the one specified in my

PATH?