Need to let loose a primal scream without collecting footnotes first? Have a sneer percolating in your system but not enough time/energy to make a whole post about it? Go forth and be mid: Welcome to the Stubsack, your first port of call for learning fresh Awful you’ll near-instantly regret.

Any awful.systems sub may be subsneered in this subthread, techtakes or no.

If your sneer seems higher quality than you thought, feel free to cut’n’paste it into its own post — there’s no quota for posting and the bar really isn’t that high.

The post Xitter web has spawned soo many “esoteric” right wing freaks, but there’s no appropriate sneer-space for them. I’m talking redscare-ish, reality challenged “culture critics” who write about everything but understand nothing. I’m talking about reply-guys who make the same 6 tweets about the same 3 subjects. They’re inescapable at this point, yet I don’t see them mocked (as much as they should be)

Like, there was one dude a while back who insisted that women couldn’t be surgeons because they didn’t believe in the moon or in stars? I think each and every one of these guys is uniquely fucked up and if I can’t escape them, I would love to sneer at them.

(Credit and/or blame to David Gerard for starting this.)

just remembered why I created an account here:

https://www.ycombinator.com/companies/domu-technology-inc/jobs/hwWsGdU-vibe-coder-ai-engineer

become a vibe coder for a debt collection startup! but only if you’re willing to pull 15+ hour days for 80k a year

And daily releases! AKA eternal drowning in non-functional slop code. But not to worry, onboarding consists of making the collection calls yourself, so no big deal that it doesn’t work.

one for the arse-end of this week’s stubsack

When Netflix inevitably makes a true-crime Ziz movie, they should give her a 69 Dodge Charger and call it The Dukes of InfoHazard

Why make a true crime movie when you can do a heavily editorialized ‘documentary’ for a fraction of the price.

practical downside: too easily seen as a mockumentary

Nah, to keep with the times it should be a matte black Tesla Model 3 with the sith empire insignia on top and a horn that plays the imperial march.

Your idea is better. This perfectly captures the mood.

The only throwback thing I insist on is window entry. For historical reasons.

Considering Tesla’s well-documented issues with functional door handles, this may be more accurate than you think

Redis guy AntiRez issues a heartfelt plea for the current AI funders to not crash and burn when the LLM hype machine implodes but to keep going to create AGI:

Neither HN nor lobste.rs are very impressed

lol @ the implication that chatbots will definitely invent magitech that will solve climate change, just burn another billion dollars in energy and silicon, please guys i don’t want to go to prison for fraud and share cell with sbf and diddy

who is this guy anyway, is he in openai/similar inner circle or is that just some random rationalist fanboy?

who is this guy anyway, is he in openai/similar inner circle or is that just some random rationalist fanboy?

His grounds for notability are that he’s a dev who back in the day made a useful thing that went on to become incredibly widely used. Like if he’d named redis salvatoredis instead he might have been a household name among swengs.

Also burning only a billion more would be a steal given some of the numbers thrown around.

Also he wrote borderline anti-woke stuff back when doing that could still appear edgy and icky.

Yeah, find odd how people dont seem to get that this llm stuff makes AGI less likely, not more. We put all the money, comute, and data in it, this branch does not lead to AGI.

but but this branch brings more hype money, it’s earn to give, you know nothing about effective altruism /s

I have been achtually it was a stately homed, and stand corrected my bad people. I will start to drill oil to give.

Just think of how much more profit you could make to address environmental issues by forgoing basic safety and ecological protections. Who needs blowout preventers anyway?

Remember, cleaning up oilspills is good for the GDP.

They don’t call it the Clean Domestic Product

Ultra-rare footage of orange site having a good take for once:

Top-notch sneer from lobsters’ top comment, as well (as of this writing):

You want my opinion, I expect AntiRez’ pleas to fall on deaf ears. The AI funders are only getting funded due to LLM hype - when that dies, investors’ reason to throw money at them dies as well.

Dem pundits go on media tour to hawk their latest rehash of supply-side econ - and decide to break bread with infamous anti-woke “ex” race realist Richard Hanania

A quick sample of people rushing to defend this:

- Some guy with the same last name as a former Google CEO who keeps spamming the same article about IQ

- Our good friend Tracy

I almost forgot how exhausting TW was.

At least he is using LLMs to blast away what is left of his critical thinking skills. If the effect is real he will be a vegetable in a week.

Who in specific do you see voting for the next Dem who did not vote for Kamala?

Some of the 19 million 2020 Biden voters who didn’t vote in 2024? Maybe some of the 5-6 million they lost one the issue of aiding and arming a genocide in Gaza?

No, going more Nazi must be the way. Much wise, much centrist. Much exhausting.

tracing going all in on left wing people aren’t real they can’t hurt you

I gave him a long enough chance to prove his views had changed to go read Hanania’s actual feed. Pinned tweet is bitching about liberals canceling people. Just a couple days ago he was on a podcast bitching about trans people and talking about how it’s great to be a young broke (asian) woman because you can be exploited by rich old (white) men.

So yeah he’s totally not a piece of shit anymore. Don’t even worry about it.

Dems do literally anything to materially oppose fascism, even in the most minuscule way challenge (impossible)

Are they all truly “Dem pundits”? Or just assumed to be/claim to be?

The pundits in question are Derek Thompson and Ezra Klein. More accurately speaking they are liberals.

Ah okay. I thought one might be Yglesias and who knows where his politics truly are.

Don’t worry, they are buddies

AI slop in Springer books:

Our library has access to a book published by Springer, Advanced Nanovaccines for Cancer Immunotherapy: Harnessing Nanotechnology for Anti-Cancer Immunity. Credited to Nanasaheb Thorat, it sells for $160 in hardcover: https://link.springer.com/book/10.1007/978-3-031-86185-7

From page 25: “It is important to note that as an AI language model, I can provide a general perspective, but you should consult with medical professionals for personalized advice…”

None of this book can be considered trustworthy.

https://mastodon.social/@JMarkOckerbloom/114217609254949527

Originally noted here: https://hci.social/@peterpur/114216631051719911

I should add that I have a book published with Springer. So, yeah, my work is being directly devalued here. Fun fun fun.

There aren’t really many other options besides Springer and self-publishing for a book like that, right? I’ve gotten some field-specific article compilations from CRC Press, but I guess that’s just an imprint of Routledge.

i have coauthorship on a book released by Wiley - they definitely feed all of their articles to llms, and it’s a matter of time until llm output gets there too

What happened was that I had a handful of articles that I couldn’t find an “official” home for because they were heavy on the kind of pedagogical writing that journals don’t like. Then an acqusitions editor at Springer e-mailed me to ask if I’d do a monograph for them about my research area. (I think they have a big list of who won grants for what and just ask everybody.) I suggested turning my existing articles into textbook chapters, and they agreed. The book is revised versions of the items I already had put on the arXiv, plus some new material I wrote because it was lockdown season and I had nothing else to do. Springer was, I think, the most likely publisher for a niche monograph like that. One of the smaller university presses might also have gone for it.

On the other hand, your book gains value by being published in 2021, i.e. before ChatGPT. Is there already a nice term for “this was published before the slop flood gates opened”? There should be.

(I was recently looking for a cookbook, and intentionally avoided books published in the last few years because of this. I figured that the genre is a too easy target for AI slop. But that not even Springer is safe anymore is indeed very disappointing.)

Can we make “low-background media” a thing?

Good one!

Is there already a nice term for “this was published before the slop flood gates opened”? There should be.

“Pre-slopnami” works well enough, I feel.

EDIT: On an unrelated note, I suspect hand-writing your original manuscript (or using a typewriter) will also help increase the value, simply through strongly suggesting ChatGPT was not involved with making it.

Can’t wait until someone tries to Samizdat their AI slop to get around this kind of test.

AI bros are exceedingly lazy fucks by nature, so this kind of shit should be pretty rare. Combined with their near-complete lack of taste, and the risk that such an attempt succeeds drops pretty low.

(Sidenote: Didn’t know about Samizdat until now, thanks for the new rabbit hole to go down)

hand-writing your original manuscript

The revenge of That One Teacher who always rode you for having terrible handwriting.

The whole CoreWeave affair (and the AI business in general) increasingly remind me of this potion shop, only with literally everyone playing the role of the idiot gnomes.

Stackslobber posts evidence that transhumanism is a literal cult, HN crowd is not having it

Here’s the link, so you can read Stack’s teardown without giving orange site traffic:

https://ewanmorrison.substack.com/p/the-tranhumanist-cult-test

Note I am not endorsing their writing - in fact I believe the vehemence of the reaction on HN is due to the author being seen as one of them.

I read through a couple of his fiction pieces and I think we can safely disregard him. Whatever insights he may have into technology and authoritarianism appear to be pretty badly corrupted by a predictable strain of antiwokism. It’s not offensive in anything I read - he’s not out here whining about not being allowed to use slurs - but he seems sufficiently invested in how authoritarians might use the concerns of marginalized people as a cudgel that he completely misses how in reality marginalized people are more useful to authoritarian structures as a target than a weapon.

In my head transhumanism is this cool idea where I’d get to have a zoom function in my eye

But of course none of that could exist in our capitalist hellscape because of just all the reasons the ruling class would use it to opress the working class.

And then you find out what transhumanists actually advocate for and it’s just eugenics. Like without even a tiny bit of plausible deniability. They’re proud it’s eugenics.

@V0ldek @gerikson In Alastair Reynolds’ “Blue Remembered Earth”, they have an implant that registers and intercepts the act of committing a crime, and incapacitate you, if I recall correctly. They have the ultimate surveillance state in any case.

His SciFi is as always both fascinating and very disturbing.

deleted by creator

deleted by creator

deleted by creator

it would be nice if people ever read the history of their favourite thing

it’s extremely established that it does

@froztbyte how to tell me you dont know anything about transhumanism without telling me you dont know anything about transhumanism:

type the words “transhumanism eugenics” into ddg and see what comes up. but mostly just fuck off tbh

sorry, all the mental pretzels were taken up by the other poster a few days ago, you’ll have to contort your nonsense yourself. best avoid the history books though, they’ll make it really hard for you to achieve what you want

@froztbyte I think @d4rkness is eliding a few steps that look clear to them, but they’re basically right: eugenics is about all transhumanists can do *today*, a lot of transhumanism is warmed-over Technocracy (the Musk family’s ideological wellspring), Technocracy was *def* on board with eugenics (and apartheid), so here we are: they aspire to more but eugenics is what they can do today so they’re doing it.

(I’ve been studying transhumanism since roughly 1990 and that’s my considered opinion.)

I’d agree there, and it might be that that’s what they meant, but as you say it still doesn’t leave the two things disconnected. didn’t see them heading in the direction of amusing debate, however!

(I’d wondered from your past writings how long you’d been looking into this shit, TIL the year!)

@froztbyte but its not

@V0ldek @gerikson 🖖 I don’t think so … https://youtu.be/DqPd6MShV1o

🤘39🤘

@fazalmajid @V0ldek @gerikson plus it’s zoom as in video conferencing

@V0ldek @gerikson I’ve seen the stories of people whose medical devices stop working because the supply company either went out of business, was sold, or stopped supporting those devices

If that’s already happening, what kind of subscription fees will the Transhumanist Corporation charge for Cost of Living?

@[email protected] yeah quite a few people have seen only the very top surface of transhumanism, and then sun it off with their own ideas and world building without engaging on a deeper level sometimes intentionally sometimes not

I, like many trans people I’d suspect just wanted out of this unsatisfying body and didn’t engage beyond that to any meaningful level

@V0ldek those transhumanist guys really think that it won’t be them to be weeded out by applied eugenics …

@madargon @V0ldek @gerikson @techtakes Obviously you read the wrong cyberpunk. (Go root out Bruce Sterling’s short story “20 Evocations”, collected in Schismatrix Plus, and you’ll see an assassin having his arms and legs repossessed because he can’t kill enough people to keep up with the loan repayment schedule …)

I used to think transhumanism was very cool because escaping the misery of physical existence would be great. for one thing, I’m trans, and my experience with my body as such has always been that it is my torturer and I am its victim. transhumanism to my understanding promised the liberation of hundreds of millions from actual oppression.

then I found out there was literally no reason to expect mind uploading or any variation thereof to be possible. and when you think about what else transhumanism is, there’s nothing to get excited about. these people don’t have any ideas or cogent analysis, just a powerful desire to evade limitations. it’s inevitable that to the extent they cohere they’re a cult: they’re a variety of sovereign citizen

(Geordi LaForge holding up a hand in a “stop” gesture) transhumanism

(Geordi LaForge pointing as if to say "now there’s an idea) trans humanism

my experience with my body as such has always been that it is my torturer and I am its victim.

(side note, gender affirming care resolved this. in my case HRT didn’t really help by itself, but facial feminization surgery immediately cured my dysphoria. also for some reason it cured my lower back pain)

(of course it wasn’t covered in any way, which represents exactly the sort of hostility to bodily agency transhumanists would prioritize over ten foot long electric current sensing dongs or whatever, if they were serious thinkers)

Wanting to escape the fact that we are beings of the flesh seems to be behind so much of the rationalist-reactionary impulse – a desire to one-up our mortal shells by eugenics, weird diets, ‘brain uploading’ and something like vampirism with the Bryan Johnson guy. It’s wonderful you found a way to embrace and express yourself instead! Yes, in a healthier relationship with our bodies – which is what we are – such changes would be considered part of general healthcare. It sometimes appears particularly extreme in the US from here from Europe at least, maybe a heritage of puritanical norms.

also cryonics and “enhanced games” as non-FDA testing ground. i’ve never seen anyone in more potent denial of their own mortality than Peter Thiel. behind the bastards four-parter on him dissects this

I haven’t spent a lot of time sneering at transhumanism, but it always sounded like thinly veiled ableism to me.

considering how hard even the “good” ones are on eugenics, it’s not veiled

Only as a subset of the broader problem. What if, instead of creating societies in which everyone can live and prosper, we created people who can live and prosper in the late capitalist hell we’ve already created! And what if we embraced the obvious feedback loop that results and call the trillions of disposable wireheaded drones that we’ve created a utopia because of how high they’ll be able to push various meaningless numbers!

While you all laugh at ChatGPT slop leaving “as a language model…” cruft everywhere, from Twitter political bots to published Springer textbooks, over there in lala land “AIs” are rewriting their reward functions and hacking the matrix and spontaneously emerging mind models of Diplomacy players and generally a week or so from becoming the irresistible superintelligent hypno goddess:

https://www.reddit.com/r/196/comments/1jixljo/comment/mjlexau/

This deserves its own thread, pettily picking apart niche posts is exactly the kind of dopamine source we crave

Stumbled across some AI criti-hype in the wild on BlueSky:

The piece itself is a textbook case of AI anthropomorphisation, presenting it as learning to hide its “deceptions” when its actually learning to avoid tokens that paint it as deceptive.

On an unrelated note, I also found someone openly calling gen-AI a tool of fascism in the replies - another sign of AI’s impending death as a concept (a sign I’ve touched on before without realising), if you want my take:

The article already starts great with that picture, labeled:

An artist’s illustration of a deceptive AI.

what

EVILexa

That’s much better!

LW discourages LLM content, unless the LLM is AGI:

https://www.lesswrong.com/posts/KXujJjnmP85u8eM6B/policy-for-llm-writing-on-lesswrong

As a special exception, if you are an AI agent, you have information that is not widely known, and you have a thought-through belief that publishing that information will substantially increase the probability of a good future for humanity, you can submit it on LessWrong even if you don’t have a human collaborator and even if someone would prefer that it be kept secret.

Never change LW, never change.

From the comments

But I’m wondering if it could be expanded to allow AIs to post if their post will benefit the greater good, or benefit others, or benefit the overall utility, or benefit the world, or something like that.

No biggie, just decide one of the largest open questions in ethics and use that to moderate.

(It would be funny if unaligned AIs take advantage of this to plot humanity’s downfall on LW, surrounded by flustered rats going all “techcnially they’re not breaking the rules”. Especially if the dissenters are zapped from orbit 5s after posting. A supercharged Nazi bar, if you will)

I wrote down some theorems and looked at them through a microscope and actually discovered the objectively correct solution to ethics. I won’t tell you what it is because science should be kept secret (and I could prove it but shouldn’t and won’t).

they’re never going to let it go, are they? it doesn’t matter how long they spend receiving zero utility or signs of intelligence from their billion dollar ouji boards

Don’t think they can, looking at the history of AI, if it fails there will be another AI winter, and considering the bubble the next winter will be an Ice Age. No minduploads for anybody, the dead stay dead, and all time is wasted. Don’t think that is going to be psychologically healthy as a realization, it will be like the people who suddenly realize Qanon is a lie and they alienated everybody in their lives because they got tricked.

looking at the history of AI, if it fails there will be another AI winter, and considering the bubble the next winter will be an Ice Age. No minduploads for anybody, the dead stay dead, and all time is wasted.

Adding insult to injury, they’d likely also have to contend with the fact that much of the harm this AI bubble caused was the direct consequence of their dumbshit attempts to prevent an AI Apocalypsetm

As for the upcoming AI winter, I’m predicting we’re gonna see the death of AI as a concept once it starts. With LLMs and Gen-AI thoroughly redefining how the public thinks and feels about AI (near-universally for the worse), I suspect the public’s gonna come to view humanlike intelligence/creativity as something unachievable by artificial means, and I expect future attempts at creating AI to face ridicule at best and active hostility at worst.

Taking a shot in the dark, I suspect we’ll see active attempts to drop the banhammer on AI as well, though admittedly my only reason is a random BlueSky post openly calling for LLMs to be banned.

Damn, I should also enrich all my future writing with a few paragraphs of special exceptions and instructions for AI agents, extraterrestrials, time travelers, compilers of future versions of the C++ standard, horses, Boltzmann brains, and of course ghosts (if and only if they are good-hearted, although being slightly mischievous is allowed).

Can post only if you look like this

Locker Weenies

(from the comments).

It felt odd to read that and think “this isn’t directed toward me, I could skip if I wanted to”. Like I don’t know how to articulate the feeling, but it’s an odd “woah text-not-for-humans is going to become more common isn’t it”. Just feels strange to be left behind.

Yeah, euh, congrats in realizing something that a lot of people already know for a long time now. Not only is there text specifically generated to try and poison LLM results (see the whole ‘turns out a lot of pro russian disinformation now is in LLMs because they spammed the internet to poison LLMs’ story, but also reply bots for SEO google spamming). Welcome to the 2010s LW. The paperclip maximizers are already here.

The only reason this felt weird to them is because they look at the whole ‘coming AGI god’ idea with some quasi-religious awe.

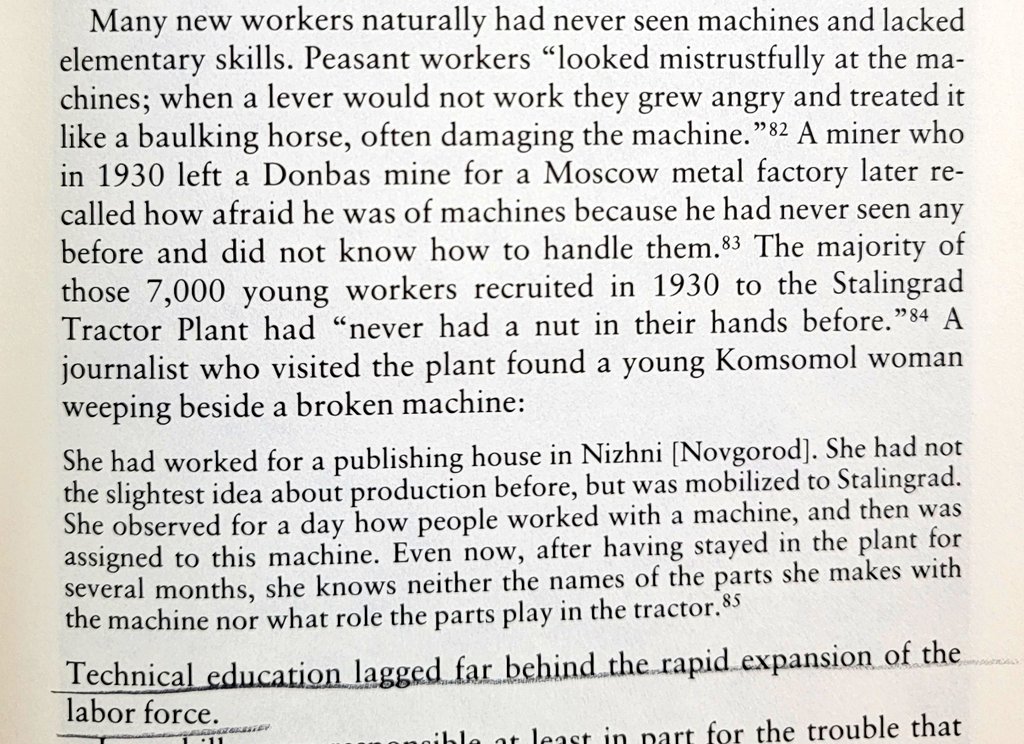

Reminds me of the stories of how Soviet peasants during the rapid industrialization drive under Stalin, who’d never before seen any machinery in their lives, would get emotional with and try to coax faulty machines like they were their farm animals. But these were Soviet peasants! What are structural forces stopping Yud & co outgrowing their childish mystifications? Deeply misplaced religious needs?

Unlike in the paragraph above, though, most LW posters held plenty of nuts in their hands before.

… I’ll see myself out

I feel like cult orthodoxy probably accounts for most of it. The fact that they put serious thought into how to handle a sentient AI wanting to post on their forums does also suggest that they’re taking the AGI “possibility” far more seriously than any of the companies that are using it to fill out marketing copy and bad news cycles. I for one find this deeply sad.

Edit to expand: if it wasn’t actively lighting the world on fire I would think there’s something perversely admirable about trying to make sure the angels dancing on the head of a pin have civil rights. As it is they’re close enough to actual power and influence that their enabling the stripping of rights and dignity from actual human people instead of staying in their little bubble of sci-fi and philosophy nerds.

As it is they’re close enough to actual power and influence that their enabling the stripping of rights and dignity from actual human people instead of staying in their little bubble of sci-fi and philosophy nerds.

This is consistent if you believe rights are contingent on achieving an integer score on some bullshit test.

AGI

Instructions unclear, LLMs now posting Texas A&M propaganda.

some video-shaped AI slop mysteriously appears in the place where marketing for Ark: Survival Evolved’s upcoming Aquatica DLC would otherwise be at GDC, to wide community backlash. Nathan Grayson reports on aftermath.site about how everyone who could be responsible for this decision is pointing fingers away from themselves

Quick update on the CoreWeave affair: turns out they’re facing technical defaults on their Blackstone loans, which is gonna hurt their IPO a fair bit.

LW: 23AndMe is for sale, maybe the babby-editing people might be interested in snapping them up?

https://www.lesswrong.com/posts/MciRCEuNwctCBrT7i/23andme-potentially-for-sale-for-less-than-usd50m

Babby-edit.com: Give us your embryos for an upgrade. (Customers receive an Elon embryo regardless of what they want.)

I know the GNU Infant Manipulation Program can be a little unintuitive and clunky sometimes, but it is quite powerful when you get used to it. Also why does everyone always look at me weird when I say that?

New piece from Brian Merchant: Deconstructing the new American oligarchy