- cross-posted to:

- [email protected]

- cross-posted to:

- [email protected]

Driverless cars worse at detecting children and darker-skinned pedestrians say scientists::Researchers call for tighter regulations following major age and race-based discrepancies in AI autonomous systems.

cars should be tested for safety in collisions with children and it should affect their safety rating and taxes. Driverless equipment shouldn’t be allowed on the road until these sorts of issues are resolved.

The study only used images and the image recognition system, so this will only be accurate for self driving systems that operate purely on image recognition. The only one that does that currently is Tesla AFAIK.

#blacklivesmatter

I hate all this bias bullshit because it makes the problem bigger than it actually is and passes the wrong idea to the general public.

A pedestrian detection system shouldn’t have as its goal to detect skin tones and different pedestrian sizes equally. There’s no benefit in that. It should do the best it can to reduce the false negative rates of pedestrian detection regardless, and hopefully do better than human drivers in the majority of scenarios. The error rates will be different due to the very nature of the task, and that’s ok.

This is what actually happens during research for the most part, but the media loves to stir some polarization and the public gives their clicks. Pushing for a “reduced bias model” is actually detrimental to the overall performance, because it incentivizes development of models that perform worse in scenarios they could have an edge just to serve an artificial demand for reduced bias.

I think you’re misunderstanding what the article is saying.

You’re correct that it isn’t the job of a system to detect someone’s skin color, and judge those people by it.

But the fact that AVs detect dark skinned people and short people at a lower effectiveness is a reflection of the lack of diversity in the tech staff designing and testing these systems as a whole.

They staff are designing the AVs to safely navigate in a world of people like them, but when the staff are overwhelmingly male, light skinned, young and single, and urban, and in the United States, a lot of considerations don’t even cross their minds.

Will the AVs recognize female pedestrians?

Do the sensors sense light spectrum wide enough to detect dark skinned people?

Will the AVs recognize someone with a walker or in a wheelchair, or some other mobility device?

Toddlers are small and unpredictable.

Bicyclists can fall over at any moment.

Are all these AVs being tested in cities being exposed to all the animals they might encounter in rural areas like sheep, llamas, otters, alligators and other animals who might be in the road?

How well will AVs tested in urban areas fare on twisty mountain roads that suddenly change from multi lane asphalt to narrow twisty dirt roads?

Will they recognize tractors and other farm or industrial vehicles on the road?

Will they recognize something you only encounter in a foreign country like an elephant or an orangutan or a rickshaw? Or what’s it going to do if it comes across that tomato festival in Spain?

Engineering isn’t magical: It’s the result of centuries of experimentation and recorded knowledge of what works and doesn’t work.

Releasing AVs on the entire world without testing them on every little thing they might encounter is just asking for trouble.

What’s required for safe driving without human intelligence is more mind boggling the more you think about it.

But the fact that AVs detect dark skinned people and short people at a lower effectiveness is a reflection of the lack of diversity in the tech staff designing and testing these systems as a whole.

No, it isn’t. Its a product of the fact that dark people are darker and children are smaller. Human drivers have a harder time seeing these individuals too. They literally send less data to the camera sensor. This is why people wear reflective vests for safety at night, and ninjas dress in black.

They literally send less data to the camera sensor.

So maybe let’s not limit ourselves to using hardware which cannot easily differentiate when there is other hardware, or combinations of hardware, which can do a better job at it?

Humans can’t really get better eyes, but we can use more appropriate hardware in machines to accomplish the task.

This is true but tesla and others could compensate for this by spending more time and money training on those form factors, something humans can’t really do. It’s an opportunity for them to prove the superhuman capabilities of their systems.

That is true. I almost hit a dark guy, wearing black, who was crossing a street at night with no streetlight as I turned into it. Almost gave me a heart attack. It is bad enough almost getting hit, as a white guy, when I cross a street with a streetlight.

That doesn’t make it better.

It doesn’t matter why they are bad at detecting X, it should be improved regardless.

Also maybe Lidarr would be a better idea.

These are important questions, but addressing them for each model built independently and optimizing for a low “racial bias” is the wrong approach.

In academia we have reference datasets that serve as standard benchmarks for data driven prediction models like pedestrian detection. The numbers obtained on these datasets are usually the referentials used when comparing different models. By building comprehensive datasets we get models that work well across a multitude of scenarios.

Those are all good questions, but need to be addressed when building such datasets. And whether model M performs X% better to detect people of that skin color is not relevant, as long as the error rate of any skin color is not out of an acceptable rate.

The media has become ridiculously racist, they go out of their way to make every incident appear to be racial now

They need Google Pixel cameras.

Night Sight is a beast of a feature

I was thinking more along the lines of being better at reproducing black skin tones.

On that front, I think Google is the only company actively (or at least publicly) training their image processing feature to adequately handle darker skin

This has been the case with pretty much every single piece of computer-vision software to ever exist…

Darker individuals blend into dark backgrounds better than lighter skinned individuals. Dark backgrounds are more common that light ones, ie; the absence of sufficient light is more common than 24/7 well-lit environments.

Obviously computer vision will struggle more with darker individuals.

Visible light is racist.

deleted by creator

-

No it’s because they train AI with pictures of white adults.

-

It literally wouldn’t matter for lidar, but Tesla uses visual cameras to save money and that weighs down everyone else’s metrics.

Lumping lidar cars with Tesla makes no sense

-

If the computer vision model can’t detect edges around a human-shaped object, that’s usually a dataset issue or a sensor (data collection) issue… And it sure as hell isn’t a sensor issue because humans do the task just fine.

Which cars are equipped with human eyes for sensors?

And it sure as hell isn’t a sensor issue because humans do the task just fine.

Sounds like you have never reviewed dash camera video or low light photography.

Do they? People driving at night quite often have a hard time seeing pedestrians wearing dark colors.

Worse than humans?!

I find that very hard to believe.

We consider it the cost of doing business, but self-driving cars have an obscenely low bar to surpass us in terms of safety. The biggest hurdle it has to climb is accounting for irrational human drivers and other irrational humans diving into traffic that even the rare decent human driver can’t always account for.

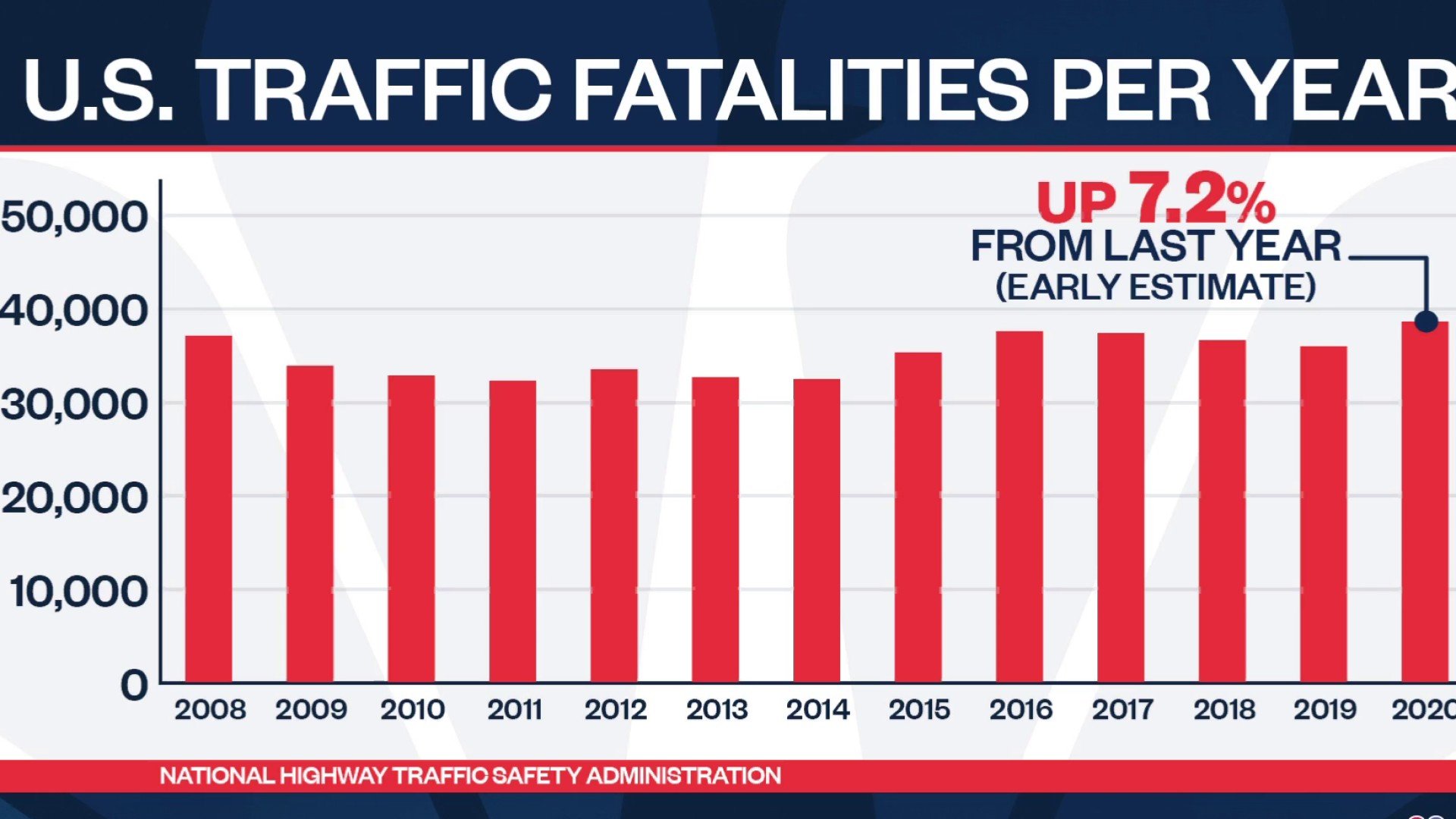

American human drivers kill more people than 10 9/11s worth of people every year. Id rather modernizing and automating our roadways would be a moonshot national endeavor, but we don’t do that here anymore, so we complain when the incompetent, narcissistic asshole who claimed the project for private profit turned out to be an incompetent, narcissistic asshole.

The tech is inevitable, there are no physics or computational power limitations standing in our way to achieve it, we just lack the will to be a society (that means funding stuff together through taxation) and do it.

Let’s just trust another billionaire do it for us and act in the best interests of society though, that’s been working just gangbusters, hasn’t it?

Not necessarily worse than humans, no, just worse than it can detect light skinned and tall people.

Your proving their point. That’s Tesla, the one run by an edgy, narcissistic, billionaire asshole, not the companies with better tech under (and above, in this case) the hood.

All I am saying is that the article doesn’t attempt to make any comparison between human’s and AI’s ability to detect dark skinned people or children… the “worse” mentioned in the poorly worded (misleading) headline was comparing the detection rates of AI only.

Any black people or children in your ‘study’?

2020 was lockdown year, how on earth have accidents increased in the US?

Ah yes, because all humans are equally bad drivers.

A self-driving car shouldn’t compete with the average human because the average human is a fucking idiot. A self-driving car should drive better than a good driver, or else you’re just putting more idiots on the road.

Replacing bad drivers with ok drivers is a net win. Let’s not leave perfection be the enemy of progress.

Probably could have stopped that headline at the third word.

Isn’t that true for humans as well? I know I find it harder to see children due to the small size and dark skinned people at night due to, you know, low contrast (especially if they are wearing dark clothes).

Human vision be racist and ageist

Ps: but yes, please do improve the algorithms

Part of the children problem is distinguishing between ‘small’ and ‘far away’. Humans seem reasonably good at it, but from what I’ve seen AIs aren’t there yet.

Yeah This probably accounts for 90% of the issue.

LiDAR doesn’t see skin color or age. Radar doesn’t either. Infra-red doesn’t either.

Do lidar and infrared work equally on white and black people? Both are still optical systems, and I don’t know how well black people reflect infrared light.

Seriously no pun intended: infrared cameras see black-body radiation, which depends on the temperature of the object being imaged, not its surface chemistry. As long as a person has a live human body temperature, they’re glowing with plenty of long-wave IR to see them, regardless of their skin melanin content.

I thought we were talking about near-IR cameras using active illumination (so, an IR spotlight).

I didn’t think low-wave IR cameras are fast enough and have enough resolution to be used on a car. Every one I have seen so far gives you a low-res low-FPS image, because there just isn’t enough long-wave IR falling into the lens.

You know, on any camera you have to balance exposure time and noise/resolution with the amount of light getting into the lens. If the amount of light decreases, you have to either take longer exposures (->lower FPS), decrease the resolution (so that more light falls onto one pixel), or increase the ISO, thereby increasing noise. Or increase the lens diameter, but that’s not always an option.

And since long-wave IR has super little “light”, I didn’t think the result would work for cars, which do need a decent amount of resolution, high FPS and low noise so that their image recognition algos aren’t confused.

LiDAR, radar and infra-red may still perform worse on children due to children being smaller and therefore there would be fewer contact points from the LiDAR reflection.

I work in a self driving R&D lab.

Would you be willing to share some neato stuff about your job with us?

How about skin color? Does darker skin reflect LiDAR/infrared the same way as light skin?

Infrared cameras don’t depend on you reflecting infrared. You’re emitting it.

All matter emits light; the frequencies that it’s brightest in depend on the matter’s temperature. Objects around human body temperature mostly glow in the long-wave infrared. It doesn’t matter what your skin color is; “color” is a different chunk of spectrum.

Sorry, I misunderstood. I know about all that, I just thought it meant active infrared lighted cameras. So basically, an IR light on the car, illuminating the road ahead, and then just using a near-IR camera like a regular optical camera.

I didn’t think it meant a far-IR camera passively filming black body radiation, because I thought the resolution (both spacially and temporally) of these cameras is usually really low. Didn’t think they were fast and high-res enough to be used on cars.

That’s a fair observation! LiDAR, radar, and infra-red systems might not directly detect skin color or age, but the point being made in the article is that there are challenges when it comes to accurately detecting darker-skinned pedestrians and children. It seems that the bias could stem from the data used to train these AI systems, which may not have enough diverse representation.

The main issue, as someone else pointed out as well, is in image detection systems only, which is what this article is primarily discussing. Lidar does have its own drawbacks, however. I wouldn’t be surprised if those systems would still not detect children as reliably. Skin color wouldn’t definitely be a consideration for it, though, as that’s not really how that tech works.

Ya hear that Elno?

DRIVERLESS CARS: We killed them. We killed them all. They’re dead, every single one of them. And not just the pedestmen, but the pedestwomen and the pedestchildren, too. We slaughtered them like animals. We hate them!

This is kinda why I dislike cars and self driving cars. Self driving cars are made more and more cost effective with compromises to safety. I feel like the US needs to mandate lidar on anything that has driver assist features. Self driving cars have been in the grey area for too long.

You’re just decribing Tesla. It’s not even most self driving car companies, it’s just Tesla. If there are others cutting corners, I’ve never heard of them.

Damn ageist AI!

Racist lighting!

Self driving cars are republicans?

Built by them, inherited their biases.

They just want to do abortions on the road. Just a few years after birth.

What? No. They’d need to recognise them better - otherwise how can they swerve to make sure they hit them?

deleted by creator

Im not expert, but perhaps thermal camera + lidar sensor could help.

It’s amazing Elon hasn’t figured this out. Then again, Steve Jobs said no iPhone would ever have an OLED screen.

We should just assume CEO’s are stupid at this point. Seriously. It’s a very common trend we all keep seeing. If they prove otherwise, then that’s great! But let’s start them at “dumbass” and move forward from there.