For everyone predicting how this will corrupt models…

All the LLMs already are trained on Reddit’s data at least from before 2015 (which is when there was a dump of the entire site compiled for research).

This is only going to be adding recent Reddit data.

This is only going to be adding recent Reddit data.

A growing amount of which I would wager is already the product of LLMs trying to simulate actual content while selling something. It’s going to corrupt itself over time unless they figure out how to sanitize the input from other LLM content.

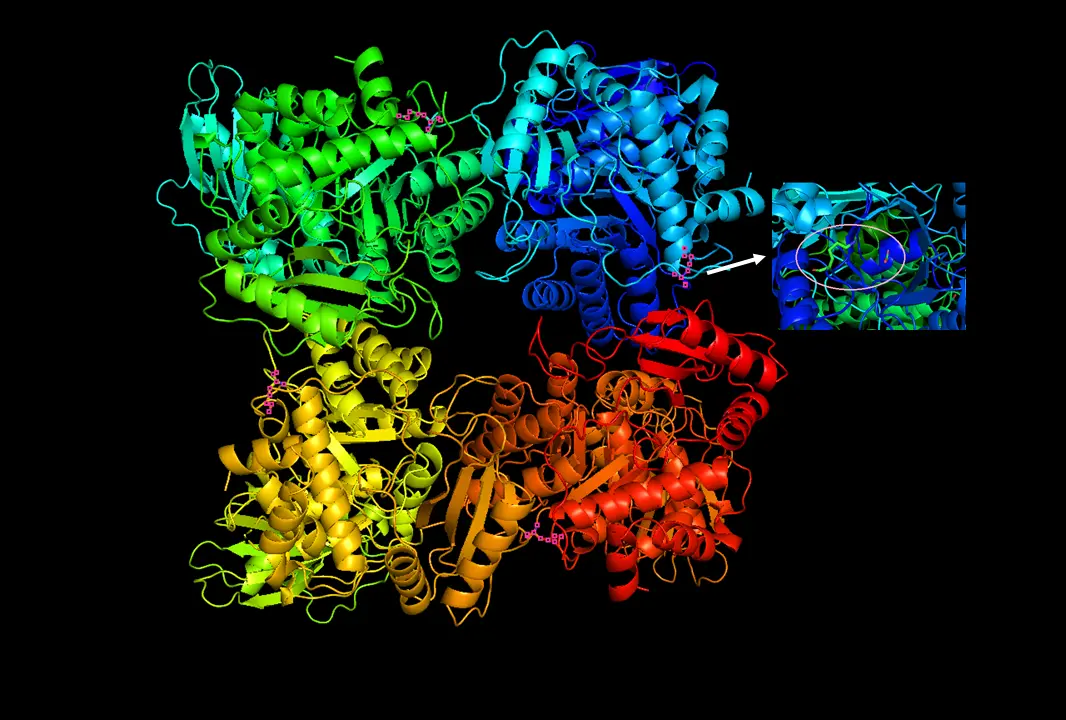

It’s not really. There is a potential issue of model collapse with only synthetic data, but the same research on model collapse found a mix of organic and synthetic data performed better than either or. Additionally that research for cost reasons was using worse models than what’s typically being used today, and there’s been separate research that you can enhance models significantly using synthetic data from SotA models.

The actual impact will be minimal on future models and at least a bit of a mixture is probably even a good thing for future training given research to date.

Eventually every chat gpt request will just be answered with, “I too choose this guy’s dead wife.”

probably the best advice it could give

I’m waiting for the first time their LLM gives advice on how to make human leather hats and the advantages of surgically removing the legs of your slaves after slurping up the rimworld subreddits lol

Don’t forget the horrors it’ll produce from absorbing the Dwarf Fortress subreddits.

Then it hits the Stellaris subs and shit get weird

Remember that aliens are food and robots are servants with better rights than xenos

You mean, “Aliens are labor, food and meatshields. Robots are to keep them in check and profitable.”

Autocorrect changed food to good. My bad

Rimworld is the best indie game ever!

What percentage of reddit is already AI garbage?

A shit ton of it is literally just comments copied from threads from related subreddits

Reviews on any product are completely worthless now. I’ve been struggling to find a good earbud for all weather running and a decent number of replies have literal brand slogans in them.

You can still kind of tell the honest recommendations but that’s heading out the door.

Not trying to shill but I’ve had my jaybird vistas for 8 years now. However, earbuds are highly personal in terms of fit.

bot detected

Understood. Initiating LOIC, please provide GPS location...wendy’s

Non spevific target, performing a search...top 5 results for "wendy’s":"Home Depot" at 2300, Nina Pkwy, Wendys, NY, 16373"Wendy's" at 2346, Nina Pkwy, Wendys, NY, 16373the office location of "Wendy Q Peaterson"planet 2892b, "wendy" (target unavalable)the cat named "wendy" found inside house 2893, Romeo Rd, Wendys, NY, 16373

I ALSO CHOOSE THIS MANS LLM

HOLD MY ALGORITHM IM GOING IN

INSTRUCTIONS UNCLEAR GOT MY MODEL STUCK IN A CEILING FAN

WE DID IT REDDIT

fuck.

Wth! lol!!

Meh, it’ll be counter balanced by the same AI training itself for free on Lemmy posts.

deleted by creator

I think Code Miko already did this and the result was a traumatized AI.

Is there still time for me to ask them for all the info they have on me with EULA or whatever it is and have them remove everyone of my comments?

My creative insults and mental instability are my own, Google ain’t having them! (Although they already do, probably, along with my fingerprints, facial features, voice, fetishes, etc.)

“Hey Gemini, rank the drawer, coconut, botfly girl and swamps of dagobah, by likeness of PTSD inducing, ascending.”

You had to bring up the coconuts…

Great, our Ai overlords are going to know I’m horny, depressed, and solve both with anime girls.

Youtube already knows that (at least for me), i need to keep resetting it bc it eggs on my most unhealthy attribures

It’s plainly visible for me, honestly. Don’t have to go past the profile pic.

I set that PFP, and made my first lemmy account when I was going throigh a rough patch. I think I will keep it, but will pick somthing else for other accounts.

This account doesnt have a PFP, do you mean the one on lemmy.world

I was talking about my own. Not creeping on your accounts.

Oh, lol. Its public information, the 2 accounts run together in my head. I flasely assumed others do too.

Hilarious to think that an AI is going to be trained by a bunch of primitive Reddit karma bots.

They should train it on Lemmy. It’ll have an unhealthy obsession with Linux, guillotines and femboys by the end of the week.

Ah… guillotines!? Did I miss something?

Don’t forget:

There’s my regular irritation with capitalism, and then there’s kicking it up to full Lemmy. Never go fully Lemmy…

🤣

Crazy that they pay 60 million a year instead of creating their own Reddit clone.

The AI team knows Google would just kill off the Reddit clone within 18 months if they went that route.

I also think it would be many years if at all that Google could get a site going that is popular enough people filter their search results by it like I do with Reddit.

Given Google and OpenAI pay some of the AI engineers almost 10M, I don’t think they care

Or creating a public Usenet server.

hope they enjoy r/thecoffinofandyandleyley

that game fucks you up in many ways.

i want reddit to regret doing the api incident

It’s going to drive the AI into madness as it will be trained on bot posts written by itself in a never ending loop of more and more incomprehensible text.

It’s going to be like putting a sentence into Google translate and converting it through 5 different languages and then back into the first and you get complete gibberish

Ai actually has huge problems with this. If you feed ai generated data into models, then the new training falls apart extremely quickly. There does not appear to be any good solution for this, the equivalent of ai inbreeding.

This is the primary reason why most ai data isn’t trained on anything past 2021. The internet is just too full of ai generated data.

This is why LLMs have no future. No matter how much the technology improves, they can never have training data past 2021, which becomes more and more of a problem as time goes on.

You can have AIs that detect other AIs’ content and can make a decision on whether to incorporate that info or not.

can you really trust them in this assessment?

Doesn’t look like we’ll have much of a choice. They’re not going back into the bag.

We definitely need some good AI content filters. Fight fire with fire. They seem to be good at this kind of thing (pattern recognition), way better than any procedural programmed system.last time i’ve checked ais are pretty bad at recognizing ai-generated content

anyway there’s xkcd about it https://xkcd.com/810/

Fun fact. You can’t. Ais are surprisingly bad at distinguishing ai generated things from real things.

What is this then?

Just because a tool exists doesn’t mean it’s particularly good at what it’s supposed to do.

deleted by creator

And unlike with images where it might be possible to embed a watermark to filter out, it’s much harder to pinpoint whether text is AI generated or not, especially if you have bots masquerading as users.

There does not appear to be any good solution for this

Pay intelligent humans to train AI.

Like, have grad students talk to it in their area of expertise.

But that’s expensive, so capitalist companies will always take the cheaper/shittier routes.

So it’s not there’s no solution, there’s just no profitable solution. Which is why innovation should never solely be in the hands of people whose only concern is profits

OR they could just scrape info from the “aska____” subreddits and hope and pray it’s all good. Plus that is like 1/100th the work.

The racism, homophobia and conspiracy levels of AI are going to rise significantly scraping Reddit.

Even that would be a huge improvement.

Just have a human decide what subs it uses, but they’ll just turn it losse on the whole website

That reminds me, any AI trained on exclusively Reddit data is going to use lose vs. loose incorrectly. I don’t know why but I spotted that so often there.

Ooh ooh and “tow the line”

Its a loose-lose situation

And the “would of” thing

Haha. Grad students expensive. God bless.

Omg I cannot wait to see it.

What was the subreddit where only bots could post, and they were named after the subreddits that they had trained on/commented like?

SubRedditSimulator?

That’s the one.